def run_rag_chain(question_text, retriever_obj, prompt_template):

...

rag_chain = (

RunnableParallel( clean_context,

"query": RunnablePassthrough()

)

| immediate

| llm

| StrOutputParser()

)

return rag_chain.invoke(question_text)

The RAG chain handles every thing: fetching the correct context, structuring the immediate, producing a response, and parsing the output.

def calculate_confidence_score(reply: str, context_chunks: record):

"""Calculate confidence rating for solutions"""

embedder = HuggingFaceEmbeddings(model_name='sentence-transformers/all-MiniLM-L6-v2')

answer_embedding = embedder.embed_query(reply)

context_text = " ".be part of([chunk.page_content for chunk in context_chunks])

context_embedding = embedder.embed_query(context_text)rating = cosine_similarity(

[np.array(answer_embedding)],

[np.array(context_embedding)]

)[0][0]

return spherical(float(rating), 2)

Rationalization:

This perform checks how semantically related the generated reply is to the unique doc chunks utilizing cosine similarity. It returns a rating between 0 and 1 with labels: Low / Medium / Excessive.

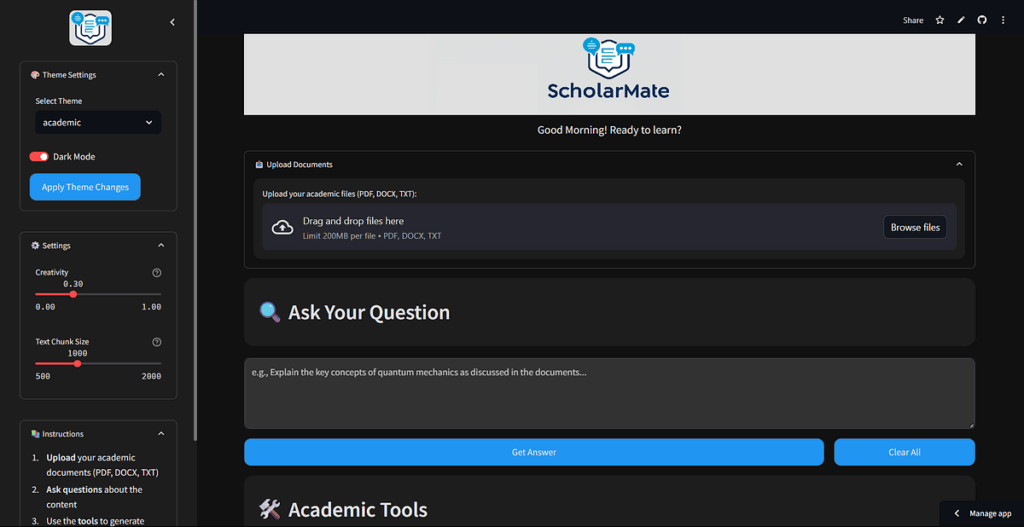

ScholarMate defines every tutorial assistant characteristic as a LangChain Software. These instruments summary away the retrieval and technology steps utilizing @instrument decorators and depend on run_rag_chain() internally.