Image this: you’re looking at a photograph of a sundown, and as a substitute of soaking in the entire scene, you slice it into little 16×16 pixel squares, shuffle them round, and nonetheless work out it’s a sundown. Seems like a bizarre artwork venture, nevertheless it’s the core of a 2021 paper that shook up laptop imaginative and prescient: “An Picture is Price 16×16 Phrases: Transformers for Picture Recognition at Scale” by Alexey Dosovitskiy and crew. This isn’t only a cute trick — it’s a full-on rethink of how machines course of photos, swapping out old-school convolution for one thing borrowed from the language world. Let’s dig in, get technical, and hold it actual.

Transformers 101: From Textual content to Pixels

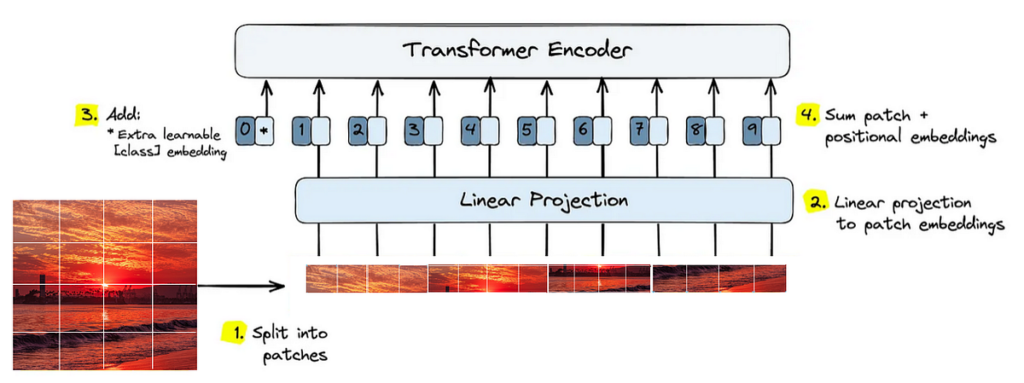

Transformers first strutted onto the scene in 2017 with Vaswani’s “Consideration is All You Want,” designed to deal with sentences by specializing in how phrases hyperlink up. Quick ahead to ViT (Imaginative and prescient Transformer), and the identical thought will get a visible twist. Right here’s the gist: take a picture — say, 224×224 pixels — and chop it into 196 patches of 16×16 pixels every (as a result of 224 ÷ 16 = 14, and 1⁴² = 196). Every patch is a “token,” like a phrase in a sentence. Flatten these 16x16x3 RGB values right into a 768-long vector (16 × 16 × 3 = 768), and also you’ve obtained one thing a Transformer can work with.

But it surely’s not only a chop-and-drop job. Every patch vector will get a linear projection — consider it as a fast math therapeutic massage — right into a fixed-size embedding (like 768 dimensions). Add a sprinkle of positional encoding (so the mannequin is aware of patch #5 isn’t patch #50), and toss in a particular [CLS] token to summarize the entire picture. Now, feed this sequence into the Transformer’s layers. What comes out? A prediction, like “yep, that’s a sundown.”

Self-Consideration: The Math That Ties It All Collectively

Right here’s the place the magic occurs: self-attention. Not like CNNs, which slide filters over a picture to catch native patterns, ViT seems at every part without delay. For every patch, it asks, “How a lot do I care about each different patch?” The mathematics is slick however not scary — let’s break it down.

Say you’ve obtained your sequence of patch embeddings, a matrix X with form (196, 768). The Transformer splits X into three vectors per patch: Question (Q), Key (Ok), and Worth (V), every computed with a weight matrix (e.g., W_Q, W_K, W_V). The eye rating for patch i caring about patch j is:

Right here, QK^T measures how “comparable” patches are (through dot product), sqrt{d_k} (the place d_k = 768 or comparable) retains issues secure, and softmax turns these scores into weights between 0 and 1. Multiply by V, and also you’ve obtained a brand new illustration of every patch, weighted by its relationships. Stack a couple of layers of this — 12 or 24 in ViT, with multi-head consideration splitting the work — and also you’ve obtained a mannequin that sees the forest and the bushes.

ViT’s energy comes with a price ticket. The paper examined it on beasts like JFT-300M (300 million photos) and ImageNet-21k (14 million), the place it smoked CNNs like ResNet. On vanilla ImageNet (1.3 million photos), although? It lagged except pre-trained on larger datasets first. Why? Self-attention doesn’t assume native construction like CNNs do — it learns every part from scratch, which takes severe knowledge.

Compute’s one other beast. For a 224×224 picture, ViT processes 196 patches per layer, with consideration scaling quadratically — O(n^2), the place n=196. Evaluate that to a CNN’s native filters, and ViT’s a fuel guzzler. However on large {hardware} (suppose TPUs or beefy GPUs), it flexes laborious.

Why This Rocks — and The place It’s Going

ViT isn’t only a lab toy. It’s a proof that Transformers can flex past textual content, opening doorways to hybrid fashions for video, medical imaging, and even generative artwork. Since 2021, people have hacked it — Swin Transformers shrink the eye window, EfficientViT cuts compute prices. It’s uncooked, it’s hungry, nevertheless it’s a spark.

For you? Perhaps you’re a coder itching to construct one thing cool. Or possibly you similar to realizing how your telephone’s digital camera will get smarter yearly. Both means, ViT’s a reminder: cross-pollinate concepts, and wild stuff occurs.

Arms-On: Code It Your self

Let’s get soiled with some Python. Right here’s a stripped-down ViT demo utilizing Hugging Face’s transformers. You’ll want transformers, torch, and Pillow—set up with pip set up transformers torch pillow.

import torch

from transformers import ViTImageProcessor, ViTForImageClassification

from PIL import Picture# Load pre-trained ViT (base mannequin, 224x224 enter)

processor = ViTImageProcessor.from_pretrained('google/vit-base-patch16-224')

mannequin = ViTForImageClassification.from_pretrained('google/vit-base-patch16-224')

# Seize an image-say, a chicken

picture = Picture.open("backyard_bird.jpg").convert("RGB")

# Course of it: resize, patchify, normalize

inputs = processor(photos=picture, return_tensors="pt") # Form: (1, 3, 224, 224)

# Ahead move

with torch.no_grad():

outputs = mannequin(**inputs) # Logits: (1, 1000) for ImageNet courses

predicted_idx = outputs.logits.argmax(-1).merchandise()

# Decode the guess

labels = mannequin.config.id2label

print(f"ViT says: {labels[predicted_idx]}")

This hundreds ViT-Base (86 million parameters, 12 layers), splits your picture into 196 patches, and spits out a guess. Swap "backyard_bird.jpg" to your personal file and see what occurs. Professional tip: peek at inputs.pixel_values to see the patched tensor—form (1, 3, 224, 224) earlier than the mannequin munches it.

The Backside Line

“An Picture is Price 16×16 Phrases” isn’t good — it’s an information hog, a compute beast, and a little bit of a diva with out pre-training. But it surely’s additionally a daring swing, proving imaginative and prescient doesn’t want convolution’s crutches. With self-attention and a pile of patches, it’s rewriting the foundations. So, seize a picture, slice it up, and let ViT inform you its story — math and all.

What’s your take? Tried the code? Bought a wild thought to riff on this? Hit me up — I’m all ears.