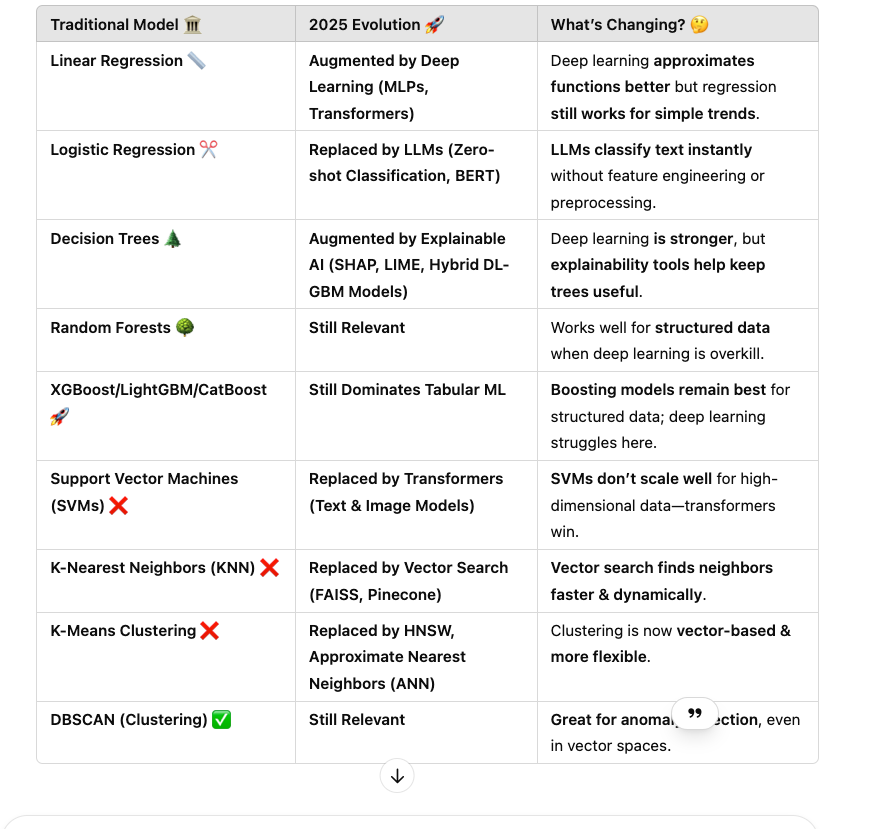

TL;DR

🔹 Earlier than: A scholar thinks grades observe a straight line — research 2 hours, get precisely 10% higher.

❌ Drawback: Doesn’t account for burnout, distractions, or motivation boosts — generally extra finding out hurts!

🔹 MLP Enhance: Learns hidden patterns — realizes sleep, stress, and snacks have an effect on scores!

🔹 Transformer Improve: Remembers previous exams & instructor’s grading type to foretell scores higher.

🧠 Improve Impact: From primary development guessing ➝ to AI-level forecasting! 🚀

🔹 Earlier than: A scholar thinks in black & white — research 3 hours = go, lower than that = fail.

❌ Drawback: Actual life isn’t that easy — some college students cram final minute & go, whereas others fail regardless of finding out arduous!

🔹 LLM Enhance: Learns from previous check scores, query issue, & even sleep patterns to predict passing probabilities extra precisely!

🔹 Zero-Shot Improve: Can classify new conditions immediately — predicts if a scholar will go even with out seeing their actual research sample earlier than!

🧠 Improve Impact: From inflexible sure/no pondering ➝ to nuanced AI-powered predictions! 🚀

🔹 Earlier than: A scholar memorizes each check query & reply with out understanding ideas.

❌ Drawback: Overfitting! If the examination format adjustments, they panic & fail as a result of they’ll’t generalize.

🔹 LLM + Explainable AI Enhance:

- Now the scholar understands patterns as an alternative of simply memorizing.

- Makes use of SHAP & LIME to clarify why a solution is right, like a instructor breaking down troublesome questions.

- Can adapt to new check codecs by utilizing previous information (Hybrid Deep Studying + GBM fashions).

🧠 Improve Impact: From inflexible memorization ➝ to adaptive reasoning with explainability! 🚀

4️⃣ 🌳 Random Forest → 100 College students Now Have Shared Reminiscence & Immediate Group Chat

🔹 Earlier than: 100 college students research barely completely different variations of the e-book & vote on solutions.

🔹 LLM Augmented: Now, college students share information immediately by way of AI (like federated studying), lowering redundant errors.

🧠 Improve Impact: From impartial learners to a super-synced AI-powered resolution group.

5️⃣ 🚀 XGBoost / LightGBM / CatBoost (Boosting) → Scholar Now Learns From World Errors, Not Simply Their Personal

🔹 Earlier than: One scholar retains studying from previous errors & improves after every check.

🔹 LLM Augmented: Now, the scholar additionally learns from worldwide check patterns, instructor biases, & associated topics!

🧠 Improve Impact: From sequential self-learning to reinforcement-learning AI (like fine-tuned LLMs).

6️⃣ ❌ SVM → Scholar Now Admits They Can’t Preserve Up With AI-Powered Complexity

🔹 Earlier than: Makes use of a strict rulebook however struggles with massive textbooks.

🔹 LLM Augmented: Scholar realizes deep studying fashions now deal with high-dimensional knowledge higher (textual content, photos).

🧠 Actuality Verify: SVM is changed by transformers for textual content & picture duties.

7️⃣ ❌ Ok-Nearest Neighbors (KNN) → Scholar Now Makes use of AI As a substitute of Asking Associates

🔹 Earlier than: Asks closest mates for solutions based mostly on their previous experiences.

🔹 LLM Augmented: As a substitute of asking 10,000 college students (sluggish), the scholar accesses AI-powered vector search (FAISS, Pinecone) for fast retrieval!

🧠 Improve Impact: From sluggish guide lookup to real-time AI suggestions.

8️⃣ ❌ Ok-Means Clustering → Scholar Now Learns from Dynamic, Context-Based mostly Teams

🔹 Earlier than: Teams college students into mounted classes (math group, artwork group).

🔹 LLM Augmented: Now, AI clusters college students dynamically based mostly on evolving expertise, cross-domain experience, & peer affect.

🧠 Improve Impact: From static clustering to AI-powered, versatile group formation (like HNSW, Approximate Nearest Neighbors).

9️⃣ ✅ DBSCAN (Clustering) → Scholar Now Detects Anomalies in Actual Time

🔹 Earlier than: Finds outliers — detects college students who research very in another way.

🔹 LLM Augmented: AI detects rising traits, social dynamics, & uncommon behaviors immediately (like AI-powered fraud detection).

🧠 Improve Impact: From primary anomaly detection to AI-powered real-time insights.

Regression assumes that if one issue adjustments, the end result will observe a predictable sample.However in the true world, traits aren’t straight — issues like sudden occasions, human habits, and market shifts make regression fashions unreliable. 🚀

🚀 LLMs & Deep Studying Automate Regression

│

┌─────────────────────────────┴─────────────────────────────┐

│ │

🔥 Deep Studying Handles Non-Linearity 📜 LLMs Do Textual content-Based mostly Classification

│ │

▼ ▼

❌ Linear Regression Cannot Deal with Complicated Tendencies ❌ Logistic Regression Cannot Compete with Zero-Shot Studying

│ │

▼ ▼

🏆 Neural Networks Approximate Any Operate 🔥 BERT & GPT Deal with Classification With out Preprocessing

│ │

▼ ▼

💀 Regression is Turning into a Subset of Deep Studying!

🔥 Last Verdict for Regression:

🚀 Deep Studying + LLMs + Hybrid AI = The way forward for monetary forecasting.

Logistic Regression has lengthy been the go-to mannequin for binary classification (sure/no, spam/not spam, fraud/not fraud). But when it’s getting changed.

🚀 LLMs Exchange Handbook Textual content Classification

│

┌─────────────────────────────┴─────────────────────────────┐

│ │

🔥 LLMs Study Textual content Context Straight 🤖 Zero-Shot Studying Works Immediately

│ │

▼ ▼

❌ Logistic Regression Wants Handbook Options ❌ Requires Stopword Elimination & Tokenization

│ │

▼ ▼

🏆 BERT & GPT Perceive Textual content That means 🔥 LLMs Classify With out Preprocessing

│ │

▼ ▼

💀 Logistic Regression is Turning into a Particular Case of LLMs!

🛑 Instance:

- “COVID-19 vaccines trigger 5G monitoring” → Logistic Regression may misclassify this as impartial if phrases like ‘protected’ seem.

- LLMs detect the false declare by understanding context & scientific details.

🔥 Last Verdict for Logistic Regression:

✅ Nonetheless Used for Easy Structured Knowledge: Credit score Scoring (Financial institution Loans) 💰 — Banks nonetheless use it to predict default threat when deep studying is overkill. Docs use Logistic Regression for binary illness predictions (diabetes: sure/no).

❌ Dying in Massive Tech & AI Purposes: Corporations want fashions that adapt, scale, and work with unstructured knowledge.

🚀 Determination Timber have been as soon as the go-to for structured decision-making, however Explainable AI (XAI) is taking up. Let’s take a look at real-world examples the place resolution timber fail, and XAI-powered fashions outperform.

Actual-World Case Research: Determination Timber 📉 vs. Explainable AI 🚀

✅ Why are they known as Boosting, Bagging, and Stacking? (In Easy English)

- Determination Timber → ❌ Overfits simply as a result of it learns from a single tree with arduous splits.

- Random Forests → ✅ Balances complexity by averaging many timber, lowering overfitting.

- Deep Studying → 🚀 Overkill for structured knowledge as a result of it wants huge knowledge & compute to outperform RF.

So, Random Forests are the candy spot — extra steady than Determination Timber however not as overkill as Deep Studying.

✅ When is Deep Studying Overkill?

✅ When Does Deep Studying Truly Win?

🔥 Largest Takeaway? Random Forests nonetheless rule structured tabular knowledge, whereas deep studying dominates unstructured issues. 🚀

- Boosting Fashions: How a lot does revenue have an effect on mortgage approval? → 45% significance rating 📊

- LLMs: Does revenue have an effect on mortgage approval? → “Increased revenue is normally higher.” 🤖 (No numerical proof!)

### 🤖 Why SVM is Fading & Deep Studying is Taking Over

│

┌──────────────────────────────┴──────────────────────────────┐

│ │

✅ **SVM is nice for small datasets** 🚀 **Deep Studying excels at large-scale AI**

│ │

▼ ▼

⚠️ **SVM wants kernel tips for advanced knowledge** ✅ **DL learns options robotically (CNNs, Transformers)**

│ │

▼ ▼

❌ **SVM struggles with high-dimensional knowledge** ✅ **Deep Studying scales higher with huge options**

│ │

▼ ▼

🔥 **Last Verdict: SVM is outdated for contemporary AI!** DL dominates large-scale textual content & picture duties 🎯

🔥 Last Verdict: SVMs are historical past for large-scale AI — deep studying wins! 🎯

### 🔍 Why KNN is Dying & Vector Search is the Future

│

┌──────────────────────────────┴──────────────────────────────┐

│ │

✅ **KNN works for small datasets** 🚀 **Vector Search scales dynamically**

│ │

▼ ▼

⚠️ **KNN finds neighbors by brute power** ✅ **Vector Search makes use of ANN (FAISS, HNSW) for velocity**

│ │

▼ ▼

❌ **Gradual when dataset grows (hundreds of thousands of factors)** ✅ **Vector Search handles billions of vectors effectively**

│ │

▼ ▼

📉 **Struggles with real-time suggestions** 🏆 **Powering Google Search, Amazon, and ChatGPT’s RAG!**

│ │

▼ ▼

🔥 **Last Verdict: KNN is outdated!** Vector Search wins for AI & large-scale retrieval 🎯

🔥 Last Verdict:

KNN was nice for small datasets in 2010, however Vector Search is the longer term of AI-powered search, suggestions, and retrieval! 🚀

Ok-Means Clustering ❌ vs. HNSW & ANN 🚀

│

┌──────────────────────────────┴──────────────────────────────┐

│ │

✅ **Ok-Means works for small datasets** 🚀 **HNSW & ANN scale to billions of knowledge factors**

│ │

▼ ▼

⚠️ **Ok-Means requires predefined clusters (Ok worth)** ✅ **Vector clustering is versatile, finds pure constructions dynamically**

│ │

▼ ▼

❌ **Fails on high-dimensional knowledge (textual content, photos)** ✅ **Vector embeddings cluster paperwork, movies, & person habits**

│ │

▼ ▼

📉 **Struggles with real-time clustering** 🏆 **Powering Google, Amazon, and AI-driven suggestions!**

│ │

▼ ▼

🔥 **Last Verdict:** Ok-Means is simply too inflexible! Vector-based clustering wins for AI & large-scale purposes. 🎯

🌍 Actual-World Examples of Ok-Means vs. Vector-Based mostly Clustering

🔥 Last Verdict:

Ok-Means is outdated for high-dimensional, dynamic clustering. Vector-Based mostly Clustering (HNSW, ANN) is the way forward for AI-driven search, suggestions, and anomaly detection! 🚀