In immediately’s fast-paced world, synthetic intelligence (AI) is in all places — from our smartphones to our streaming providers, and even in our healthcare techniques. However with this surge in AI expertise comes a wave of buzzwords that may typically go away us feeling confused and overwhelmed. Phrases like “machine studying,” “deep studying,” “pure language processing,” and “massive language fashions” are thrown round in conversations, information articles, and tech talks, however what do they actually imply?

On this article, we’ll break down these AI buzzwords into easy, easy-to-understand ideas. Whether or not you’re a tech fanatic, a curious learner, or somebody who simply needs to maintain up with the newest traits, this information is for you. We’ll keep away from the jargon and dive straight into what these phrases imply, how they work, and why they matter. By the tip, you’ll have a transparent understanding of the AI panorama and be capable of navigate the world of AI with confidence.

So, let’s embark on this journey to demystify AI buzzwords and make sense of the expertise that’s shaping our future.

- Synthetic Intelligence or AI, is the potential of computer systems to carry out duties which have usually required human intelligence. The time period originated in 1955 at Stanford, outlined as “the science and engineering of constructing clever machines.”

- Machine Studying (ML) is a subset of AI that focuses on the event of algorithms that permit computer systems to be taught from and make predictions or selections based mostly on information. ML fashions enhance their efficiency over time as they’re uncovered to extra information.

- Deep Studying is a kind of machine studying that makes use of neural networks with many layers (deep neural networks) to research numerous components of knowledge. It’s significantly efficient for duties like picture and speech recognition.

Machine Studying (ML) is a subset of synthetic intelligence that focuses on the event of algorithms and statistical fashions that allow computer systems to carry out particular duties with out express directions. As a substitute of being programmed with guidelines, ML algorithms be taught patterns from information to make predictions or selections. Right here’s an in depth take a look at machine studying and its sorts:

- Knowledge Assortment: Step one in ML is gathering information related to the duty at hand. This information could be structured (e.g., databases) or unstructured (e.g., textual content, photos).

- Knowledge Preprocessing: Uncooked information typically must be cleaned and remodeled right into a format appropriate for evaluation. This includes dealing with lacking values, normalizing information, and have engineering.

- Mannequin Choice: Choosing the proper algorithm or mannequin is determined by the kind of drawback and the character of the information. Frequent algorithms embrace linear regression, choice timber, and neural networks.

- Coaching: The mannequin is skilled on a subset of the information (coaching set) to be taught patterns and relationships. This includes optimizing the mannequin’s parameters to reduce error.

- Analysis: The mannequin’s efficiency is evaluated utilizing a separate subset of the information (validation set) to make sure it generalizes nicely to new, unseen information.

- Prediction: As soon as skilled and validated, the mannequin could make predictions or selections on new information (take a look at set).

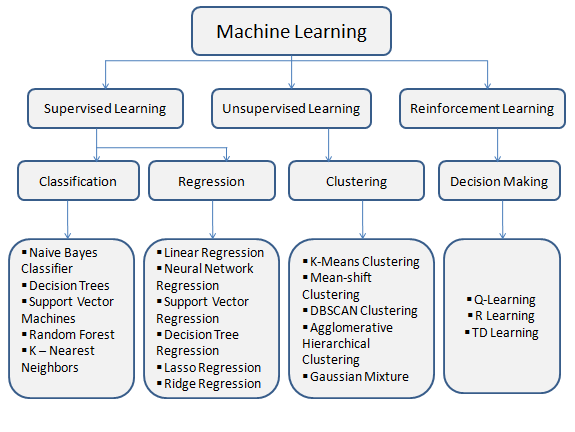

Machine studying could be broadly categorized into three primary sorts based mostly on the character of the training sign:

1. Supervised Studying

In supervised studying, the algorithm learns from labeled information, which incorporates input-output pairs. The aim is to be taught a mapping from inputs to outputs in order that the algorithm can predict the output for brand new, unseen inputs.

- Regression: Used for predicting steady values. Examples embrace linear regression and polynomial regression.

- Functions: Predicting home costs, inventory costs, and temperature.

- Classification: Used for predicting discrete labels or classes. Examples embrace logistic regression, assist vector machines (SVM), and choice timber.

- Functions: Spam detection, picture classification, and illness prognosis.

2. Unsupervised Studying

Unsupervised studying includes coaching algorithms on unlabeled information, the place the aim is to seek out hidden patterns or intrinsic buildings throughout the information.

- Clustering: Teams comparable information factors collectively. Examples embrace Ok-means clustering and hierarchical clustering.

- Functions: Buyer segmentation, anomaly detection, and picture compression.

- Affiliation: Discovers guidelines that describe massive parts of the information. Examples embrace Apriori algorithm and Eclat.

- Functions: Market basket evaluation, advice techniques, and internet utilization mining.

- Dimensionality Discount: Reduces the variety of enter options whereas retaining necessary data. Examples embrace Principal Part Evaluation (PCA) and t-SNE.

- Functions: Visualization, noise discount, and have choice.

3. Reinforcement Studying

Reinforcement studying includes coaching brokers to make selections by taking actions in an atmosphere to maximise cumulative reward. The agent learns by means of trial and error, receiving suggestions within the type of rewards or penalties.

- Q-Studying: A model-free reinforcement studying algorithm to seek out the optimum action-selection coverage.

- Functions: Robotics, sport taking part in (e.g., AlphaGo), and useful resource administration.

- Deep Reinforcement Studying: Combines reinforcement studying with deep studying to deal with complicated, high-dimensional environments.

- Functions: Autonomous autos, sport taking part in (e.g., DeepMind’s DQN for Atari video games), and customized suggestions.

- Switch Studying: Making use of information gained from one drawback to a unique however associated drawback. That is significantly helpful in deep studying, the place pre-trained fashions could be fine-tuned for brand new duties.

- Ensemble Studying: Combining a number of fashions to enhance predictive efficiency. Strategies embrace bagging, boosting, and stacking.

- Meta-Studying: Studying to be taught by leveraging expertise from earlier duties to enhance studying on new duties. That is sometimes called “studying to be taught.”

Deep Studying is a subset of machine studying that focuses on neural networks with many layers (deep neural networks) to mannequin complicated patterns in information. Impressed by the construction and performance of the human mind, deep studying has revolutionized numerous fields by reaching state-of-the-art efficiency in duties similar to picture recognition, speech processing, and pure language understanding. Let’s delve into the main points of deep studying:

- Neural Networks: The inspiration of deep studying is synthetic neural networks, that are computing techniques designed to simulate the best way human brains analyze and course of data. Neural networks include interconnected layers of nodes or “neurons.”

- Layers: Deep studying fashions are composed of a number of layers, together with:

- Enter Layer: Receives the preliminary information.

- Hidden Layers: Course of the information by means of a sequence of transformations. These layers extract options and patterns from the enter information.

- Output Layer: Produces the ultimate prediction or choice.

- Weights and Biases: Every connection between neurons has an related weight, which the mannequin learns throughout coaching. Biases are extra parameters that assist match the mannequin to the information.

- Activation Features: These capabilities introduce non-linearity into the mannequin, enabling it to be taught complicated patterns. Frequent activation capabilities embrace ReLU (Rectified Linear Unit), sigmoid, and tanh.

- Coaching: Deep studying fashions are skilled utilizing optimization algorithms like gradient descent to reduce a loss operate, which measures the distinction between the mannequin’s predictions and the precise values.

- Backpropagation: This algorithm is used to replace the weights of the neural community by propagating the error backward by means of the community layers.

Deep studying encompasses numerous architectures tailor-made to various kinds of information and duties:

1. Convolutional Neural Networks (CNNs)

CNNs are designed to course of grid-like information, similar to photos. They use convolutional layers to robotically and adaptively be taught spatial hierarchies of options from enter photos.

- Functions: Picture classification, object detection, and facial recognition.

- Key Elements: Convolutional layers, pooling layers, and absolutely linked layers.

2. Recurrent Neural Networks (RNNs)

RNNs are fitted to sequential information, the place the order of inputs issues. They’ve loops that permit data to persist, making them efficient for duties involving time sequence or pure language.

- Functions: Language modeling, machine translation, and speech recognition.

- Variants: Lengthy Brief-Time period Reminiscence (LSTM) and Gated Recurrent Models (GRUs) handle the vanishing gradient drawback in conventional RNNs.

3. Generative Adversarial Networks (GANs)

GANs include two neural networks, a generator and a discriminator, which can be skilled collectively. The generator creates new information situations, whereas the discriminator evaluates their authenticity.

- Functions: Picture technology, model switch, and information augmentation.

- Key Ideas: Adversarial coaching and Nash equilibrium.

4. Transformers

Transformers use self-attention mechanisms to weigh the significance of enter information, making them extremely efficient for pure language processing duties.

- Functions: Machine translation, textual content summarization, and query answering.

- Key Elements: Self-attention layers, encoder-decoder structure, and positional encoding.

- Switch Studying: Leveraging pre-trained fashions on new however associated duties. That is significantly helpful when labeled information is scarce.

- Autoencoders: Neural networks used for dimensionality discount or characteristic studying by encoding enter information right into a lower-dimensional illustration after which decoding it again to the unique kind.

- Reinforcement Studying with Deep Studying: Combining deep studying with reinforcement studying to deal with complicated, high-dimensional environments, similar to sport taking part in and robotics.

NLP is a subfield of synthetic intelligence centered on enabling computer systems to know, interpret, and generate human language. It includes the event of algorithms and statistical fashions to research and manipulate human language information.

Functions:

- Sentiment Evaluation: Figuring out the emotional tone or opinion expressed in textual content.

- Machine Translation: Mechanically translating textual content from one language to a different.

- Chatbots and Digital Assistants: Participating in conversations with customers to supply data or help.

- Textual content Summarization: Condensing lengthy texts into shorter summaries whereas retaining key data.

Key Strategies:

- Tokenization: Breaking down textual content into smaller items, similar to phrases or phrases.

- Half-of-Speech Tagging: Labeling phrases in a textual content with their corresponding elements of speech.

- Named Entity Recognition (NER): Figuring out and categorizing key data in textual content, similar to names, dates, and areas.

- Phrase Embeddings: Representing phrases as vectors in a steady vector house, capturing semantic similarity.

LLMs are superior AI fashions skilled on huge quantities of textual content information to know and generate human-like textual content. They’re a kind of deep studying mannequin that has been scaled up in measurement and complexity to attain state-of-the-art efficiency in numerous NLP duties.

Functions:

- Textual content Technology: Creating coherent and contextually related textual content, similar to articles, tales, or code.

- Query Answering: Offering correct and informative solutions to consumer queries.

- Content material Creation: Aiding in writing, enhancing, and producing content material for numerous functions.

Key Ideas:

- Transformer Structure: LLMs usually use the transformer structure, which employs self-attention mechanisms to weigh the significance of enter information.

- Wonderful-Tuning: Adapting a pre-trained LLM to a particular job or area by additional coaching it on a smaller, task-specific dataset.

- Zero-Shot and Few-Shot Studying: The power of LLMs to generalize to new duties with little or no task-specific coaching information.

Pc Imaginative and prescient is a subject of AI that focuses on enabling computer systems to interpret and make selections based mostly on visible information from the world. It includes creating algorithms and fashions to research and perceive digital photos or movies.

Functions:

- Picture Classification: Categorizing photos into predefined courses or labels.

- Object Detection: Figuring out and finding objects inside a picture or video.

- Facial Recognition: Figuring out or verifying people based mostly on their facial options.

- Autonomous Autos: Enabling autos to understand and navigate their atmosphere utilizing visible information.

Key Strategies:

- Convolutional Neural Networks (CNNs): Specialised neural networks designed to course of grid-like information, similar to photos.

- Picture Segmentation: Dividing a picture into distinct areas or objects.

- Optical Character Recognition (OCR): Changing various kinds of paperwork, similar to scanned paper paperwork, PDFs, or photos captured by a digital digicam, into editable and searchable information.