Community-aware job scheduling in machine studying (ML) clusters is an optimization technique that enhances the effectivity of distributed ML coaching by contemplating the community topology and communication patterns of jobs.

In large-scale ML duties, resembling coaching deep studying fashions, vital knowledge change happens between computational nodes – assume mannequin parameters, gradients, or synchronization updates. With out cautious administration, this communication can congest the community, slowing down coaching.

Community-aware scheduling addresses this by intelligently allocating and timing jobs to reduce community bottlenecks, in the end decreasing coaching time and enhancing useful resource utilization.

Key factors about network-aware job scheduling in machine studying clusters:

- Community-aware job scheduling in ML clusters optimizes efficiency by managing community visitors.

- This strategy reduces coaching time by minimizing congestion, with CASSINI as a number one instance.

- Important enhancements, like as much as 1.6x sooner job completion, however complexity varies by implementation.

Community-aware job scheduling in machine studying (ML) clusters is a technique to schedule jobs, like coaching giant fashions, by contemplating how knowledge strikes throughout the community.

ML coaching usually entails a number of computer systems (nodes) working collectively, and they should share a variety of knowledge, which may clog the community if not managed effectively. This scheduling ensures that jobs are timed and positioned to keep away from community bottlenecks, making coaching sooner and extra environment friendly.

Why It Issues?

In ML, particularly with massive fashions, community delays can decelerate the entire course of. By being conscious of the community, schedulers can place jobs nearer collectively or stagger their communication to cut back wait occasions, which is essential for well timed ends in analysis and trade.

In distributed ML coaching, the community usually turns into a efficiency bottleneck, particularly as fashions and datasets scale. For instance, in synchronous coaching, nodes should ceaselessly synchronize, creating bursty communication that may overwhelm community hyperlinks. By factoring in community circumstances, schedulers can:

- Scale back coaching time: Inserting jobs that talk closely on close by nodes cuts down knowledge switch delays.

- Increase useful resource effectivity: Higher community utilization permits extra jobs to run concurrently with out efficiency drops.

- Decrease prices: In cloud environments, optimized community utilization can scale back knowledge switch bills and useful resource calls for.

How It Works?

Community-aware schedulers optimize job allocation in two key dimensions:

- Spatial Optimization: Jobs with excessive communication wants are positioned on nodes with sturdy, low-latency connections — like the identical rack or nodes linked by high-speed interconnects. As an example, in a multi-rack cluster, inserting two data-intensive jobs on the identical rack avoids slower cross-rack visitors.

- Temporal Optimization: The scheduler staggers jobs’ communication phases to forestall overlap, decreasing rivalry on shared community hyperlinks. Think about two jobs needing to sync: scheduling one to speak whereas the opposite computes avoids clogging the community.

CASSINI

CASSINI is a latest software that exhibits how this works. It makes use of a intelligent concept of shifting when jobs begin to keep away from overlapping heavy community use, like avoiding visitors jams. Assessments on a 24-server setup confirmed it may well make jobs end as much as 1.6 occasions sooner on common and scale back community congestion alerts by as much as 33 occasions.

A standout implementation is CASSINI, introduced at NSDI 2024. CASSINI makes use of a geometrical mannequin the place jobs are represented as circles, with their perimeters reflecting iteration occasions.

It calculates optimum time shifts to interleave communication phases, minimizing overlap on shared hyperlinks. In a 24-server cluster check, CASSINI minimize job completion occasions by as much as 1.6x and lowered community congestion alerts by 33x in comparison with schedulers like Themis and Pollux.

Past simply timing, one other strategy focuses on inserting jobs in the identical community zone to chop delays, which may be much less apparent however equally efficient for sure setups.

Different Approaches

- Topology-Conscious Placement: Jobs are assigned primarily based on community zones to reduce delays. A 2023 research used instruments like perfSONAR to observe community metrics and place jobs in low-latency zones, rushing up coaching by as much as 50% in high-loss eventualities.

- Predictive Scheduling: Machine studying fashions predict community circumstances and job behaviors, enabling dynamic changes. This shines in cloud settings the place community efficiency fluctuates.

Challenges and Commerce-Offs

Implementing network-aware scheduling isn’t with out hurdles:

- Modeling Complexity: Predicting communication patterns is difficult, particularly throughout various ML workloads with various fashions and algorithms.

- Heterogeneous Environments: Clusters usually have blended node varieties and community hyperlinks, demanding adaptable methods.

- Equity: Optimizing for community effectivity should stability with equitable useful resource sharing, making certain no person or job is unfairly sidelined.

Actual-World Affect

Massive gamers like Google and Microsoft weave network-aware scheduling into their ML platforms. Google’s TPU pods, with their high-bandwidth interconnects, probably leverage such strategies for peak efficiency.

Frameworks like Horovod and NCCL additionally optimize collective operations (e.g., all-reduce) for particular community topologies, boosting effectivity. In a 2023 experiment with Horovod on a fat-tree community, topology-aware scheduling minimize communication overhead by 30% for a 16-node coaching job.

Advantages in Motion

The payoff is obvious:

- Sooner Coaching: Lowered community rivalry quickens knowledge transfers, vital for large-scale jobs.

- Increased Throughput: Clusters deal with extra jobs effectively, maximizing {hardware} use.

- Value Financial savings: In resource-scarce or pay-per-use settings, like clouds, optimized community use trims bills.

Community-aware job scheduling in machine studying (ML) clusters is an rising and very important space of analysis, addressing the challenges posed by the growing complexity and scale of distributed ML coaching. This observe supplies a complete overview, detailing the idea, significance, and present cutting-edge, with a give attention to sensible implementations and up to date developments.

Introduction

ML fashions, significantly deep studying fashions, have grown in dimension and complexity, necessitating distributed coaching throughout clusters of computing nodes.

This distributed strategy, whereas scalable, introduces vital community communication overheads, resembling transferring coaching knowledge, mannequin parameters, and intermediate outcomes.

Community bottlenecks, together with excessive latency, bandwidth limitations, and congestion, can drastically enhance coaching occasions, impacting each analysis timelines and industrial functions.

Community-aware job scheduling goals to mitigate these points by optimizing job placement and timing primarily based on community topology and communication patterns, making certain environment friendly useful resource utilization and lowered coaching delays.

The significance of this strategy is underscored by the rising demand for environment friendly GPU clusters, as highlighted in latest research. As an example, communication overhead turns into a bottleneck with growing numbers of GPUs, as famous within the literature on distributed ML coaching.

This necessitates schedulers that aren’t solely resource-aware but additionally network-aware, to deal with the dynamic and heavy visitors typical in ML workloads.

Present State of the Artwork: CASSINI

One of the crucial outstanding examples is CASSINI, a network-aware job scheduler for ML clusters, detailed in a 2024 paper introduced on the twenty first USENIX Symposium on Networked Programs Design and Implementation (NSDI 24) CASSINI: Network-Aware Job Scheduling in Machine Learning Clusters.

CASSINI introduces a novel geometric abstraction to mannequin the communication patterns of various jobs, representing them as circles the place the perimeter is proportional to the coaching iteration time.

It makes use of an affinity graph to seek out optimum time-shift values, adjusting the beginning occasions of jobs to interleave their communication phases on shared community hyperlinks, thus minimizing congestion.

The sensible implementation of CASSINI was validated on a 24-server testbed, every outfitted with NVIDIA A100 GPUs and 50 Gbps RDMA NICs, related by way of a topology with 13 logical switches and 48 bi-directional hyperlinks, that includes 2:1 over-subscription.

Experiments with 13 widespread ML fashions, together with VGG11, VGG16, VGG19, ResNet50, WideResNet101, BERT, RoBERTa, XLM, CamemBERT, GPT-1, GPT-2, GPT-3, and DLRM, demonstrated vital efficiency positive aspects.

Particularly, CASSINI improved the typical completion time of jobs by as much as 1.6 occasions and the tail completion time by as much as 2.5 occasions in comparison with state-of-the-art ML schedulers like Themis and Pollux.

Moreover, it lowered the variety of ECN (Specific Congestion Notification) marked packets by as much as 33 occasions, indicating a considerable lower in community congestion.

CASSINI’s integration with present schedulers, resembling Themis and Pollux, is facilitated by including roughly 1000 traces of code, modifying arbiters to return a number of placement candidates, and choosing the highest candidates primarily based on a compatibility rating.

This rating, outlined as 1 – common(Extra(demand))/C (the place C is hyperlink capability), ranks placements, with a rating of 1 indicating full compatibility and probably destructive scores for extremely incompatible placements.

The strategy respects hyper-parameters like batch dimension and handles varied parallelism methods, together with knowledge, mannequin, and hybrid parallelism, capturing Up-Down phases in communication patterns.

Different Approaches

Whereas CASSINI is a number one instance, different network-aware scheduling strategies exist, significantly in distributed high-performance computing (HPC) platforms for deep studying.

A 2023 research printed in Electronics An Optimal Network-Aware Scheduling Technique for Distributed Deep Learning in Distributed HPC Platforms proposes a technique specializing in community optimization by making certain nodes are in the identical zone to cut back delay.

This strategy makes use of community monitoring instruments like perfSONAR (perfSONAR) and MaDDash (MaDDash) to evaluate metrics resembling loss, delay, and throughput, choosing optimum nodes inside zones.

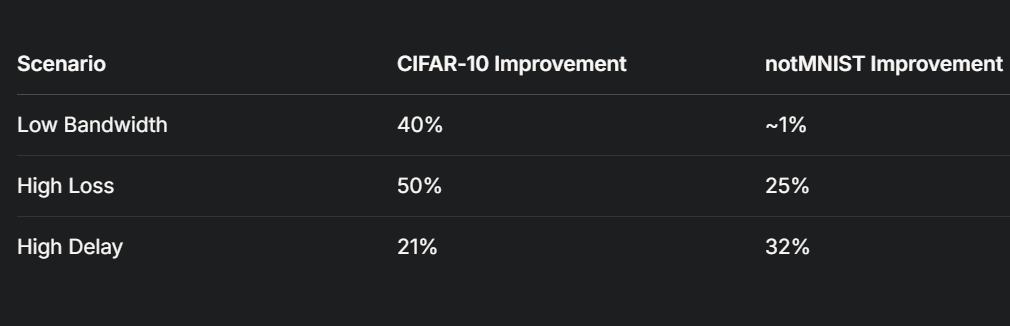

Validated on a Kubernetes cluster with 38 servers, it confirmed enhancements in distributed deep studying efficiency, with positive aspects of as much as 50% in eventualities with excessive loss for the CIFAR-10 dataset, in comparison with a default scheduler.

The efficiency metrics from this research, examined on datasets like notMNIST (500,000 photographs, 28×28 pixels) and CIFAR-10 (60,000 photographs, 32×32 pixels, 10 courses), are summarized within the following desk:

Sensible Implementation and Challenges

The sensible implementation of network-aware job scheduling entails integrating with present cluster administration methods like Kubernetes and Slurm, which help superior options for useful resource allocation, together with community consciousness.

As an example, Kubernetes can combine with community monitoring instruments to tell scheduling choices, as famous in latest guides Code18. These implementations require real-time monitoring of bandwidth, latency, and predictive modeling utilizing machine learning to anticipate community circumstances, making certain adaptive scheduling.

Challenges embody dealing with dynamic workloads, making certain compatibility throughout various ML fashions, and managing overheads. CASSINI addresses a few of these by sustaining low adjustment frequencies (lower than 2 changes per minute for sure snapshots) and reaching excessive time-shift accuracy with angle discretization precision of 5°, as detailed in its analysis.

Nonetheless, partial compatibility eventualities (e.g., compatibility scores of 0.6–1.0) present diminishing positive aspects, indicating areas for additional optimization.

Advantages and Future Instructions

The advantages of network-aware job scheduling are evident in lowered coaching occasions and improved useful resource utilization, vital for large-scale ML deployments. CASSINI’s capacity to deal with multi-GPU setups, with positive aspects of 1.4× common and 1.9× tail iteration occasions on 6 servers with 2 GPUs every, highlights its scalability.

Future analysis could give attention to enhancing compatibility metrics, integrating with rising community applied sciences, and addressing dynamic hint variations, as instructed by the shortage of an specific future work part in CASSINI however implied by its complete analysis.

In conclusion, network-aware job scheduling in ML clusters is a pivotal technique for optimizing distributed coaching, with CASSINI and zone-based approaches representing present finest practices. These strategies not solely improve efficiency but additionally pave the way in which for extra environment friendly and scalable ML methods, addressing the rising calls for of recent AI functions.

In conclusion, network-aware job scheduling in ML clusters is a pivotal technique for optimizing distributed coaching, with CASSINI and zone-based approaches representing present finest practices.

These strategies not solely improve efficiency but additionally pave the way in which for extra environment friendly and scalable ML methods, addressing the rising calls for of recent AI functions.

Community-aware job scheduling is a game-changer for ML clusters, tackling community bottlenecks head-on. By aligning job placement and timing with community realities, it accelerates coaching, enhances throughput, and cuts prices.

As ML fashions develop and distributed coaching turns into the norm, improvements like CASSINI and topology-aware methods will solely develop in significance, driving the following wave of environment friendly, scalable ML methods.

References:

Hello, I’m Alex Nguyen. With 10 years of expertise within the monetary trade, I’ve had the chance to work with a number one Vietnamese securities agency and a worldwide CFD brokerage. I specialise in Shares, Foreign exchange, and CFDs — specializing in algorithmic and automatic buying and selling. I develop Skilled Advisor bots on MetaTrader utilizing MQL5, and my experience in JavaScript and Python allows me to construct superior monetary functions. Captivated with fintech, I combine AI, deep studying, and n8n into buying and selling methods, merging conventional finance with fashionable know-how.