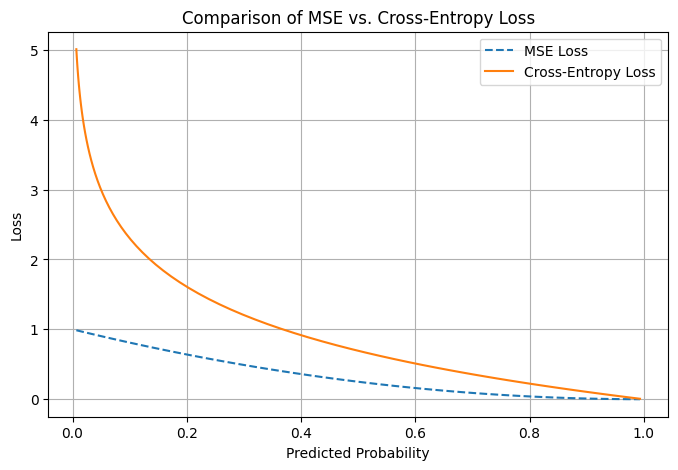

To raised perceive the distinction, let’s visualize how MSE vs. Cross-Entropy Loss behave for classification.

Now that we determined to make use of the Cross Entropy for loss operate. To make use of the loss operate to information the modifications of the weights and bias, we have to take the gradient of the loss operate.

"""

------ Psudo Code------

# Practice logistic regression mannequin

mannequin = LogisticRegression()

mannequin.match(X_train, y_train)

# Predict possibilities for take a look at set

y_probs = mannequin.predict_proba(X_test)[:, 1] # Likelihood for sophistication 1 (related)

# Rank objects primarily based on predicted possibilities

rating = np.argsort(-y_probs) # Destructive signal for descending order

------ Fundamental operate ------

"""import numpy as np

"""

Fundamental operate

"""def pred (X,w):

# X: Enter options, w: Weights

# y hat = sig (1/ 1+e^-z), z = wx +b

z = np.matmul(X,w)

y_hat = 1/(1+np.exp(-z))

return y_hatdef loss(X,Y,w):

# Compute the binary cross-entropy loss

# Loss just isn't instantly used within the practice,g however its gradient is used to replace the weights

y_pred = pred(X, w)

sum = - Y * np.log(y_pred) + (1-Y) * np.log(1-y_pred)

return - np.imply (sum)

def gradient (X,Y,w):

# Spinoff of Loss to w and b

# The gradient of the loss operate tells us the path and magnitude during which the mannequin’s parameters needs to be adjusted to attenuate the loss.

y_pred = pred(X, w)

g = - np.matmul( X.T, (y_pred- Y) ) / X.form[0]

return g"""

Part of coaching and testing

"""

def practice(X,Y, iter= 10000, learning_rate = 0.002):

w = np.zeros((X.form[1],1)) # w0 intialization at Zero

for i in vary(iter):

w = w - learning_rate * gradient(X,Y,w)

y_pred = pred(X,w)

if i % 1000 == 0:

print (f"iteration {i}, loss = { loss(X,Y,w)}" ) return wdef take a look at(X,Y, w):

y_pred = pred(X,w)

y_pred_labels = (y_pred > 0.5).astype(int)

accuracy = np.imply(y_pred_labels == Y)

return accuracy