A sentence transformer [Bi-Encoder] is a neural community mannequin designed to generate high-quality vector representations (embeddings) for sentences or textual content fragments. It’s primarily based on transformer architectures, corresponding to BERT or RoBERTa, however optimized for duties like semantic similarity, clustering, or retrieval. In contrast to conventional transformers, which concentrate on token-level outputs, sentence transformers produce a fixed-size dense vector for a whole sentence, capturing its semantic which means.

Cross-Encoders, alternatively, take two textual content inputs (e.g., a question and a candidate response) and course of them collectively by way of a single mannequin to compute a rating, usually indicating their relevance or similarity. They obtain larger accuracy as a result of the mannequin can concentrate on contextual interactions between the inputs, however they’re computationally costly because the scoring requires processing each pair anew.

Cross Encoders are sometimes used to re-rank the top-k outcomes from a Sentence Transformer mannequin.

The answer got here in 2019 with Nils Reimers and Iryna Gurevych’s SBERT (Sentence-BERT) and since SBERT, numerous sentence transformer fashions have been developed and optimized.

SBERT Structure

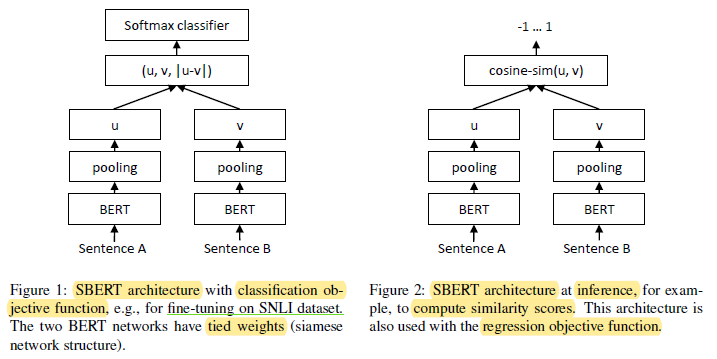

SBERT (Sentence-BERT) enhances the BERT mannequin by using a siamese structure, the place two an identical BERT networks course of two separate sentences independently. This produces embeddings for every sentence, pooled utilizing strategies like imply pooling. These sentence embeddings, uuu and vvv, are then mixed right into a single vector that captures their relationship. The best mixture method is (u,v,∣u−v∣)(u, v, |u-v|)(u,v,∣u−v∣), the place ∣u−v∣|u-v|∣u−v∣ represents the element-wise absolute distinction.

Coaching Course of

SBERT is fine-tuned on duties like Pure Language Inference (NLI), which includes figuring out whether or not one sentence entails, contradicts, or is impartial to a different. The coaching course of consists of the next steps:

- Sentence Embedding: Every sentence pair is processed to generate particular person embeddings.

- Concatenation: The embeddings (uuu and vvv) are mixed right into a single vector ((u,v,∣u−v∣)(u, v, |u-v|)(u,v,∣u−v∣)).

- Feedforward Neural Community (FFNN): The concatenated vector is handed by way of an FFNN with a number of hidden layers to generate uncooked output logits.

- Softmax Layer: The logits are normalized into possibilities, comparable to NLI labels (entailment, contradiction, or impartial).

- Cross-Entropy Loss: The expected possibilities are in contrast with precise labels utilizing the cross-entropy loss perform, which penalizes incorrect predictions.

- Optimization: The loss is minimized by way of backpropagation, adjusting the mannequin’s parameters to enhance accuracy on the coaching activity.

Pretrained fashions and evaluations are discovered right here Pretrained Models — Sentence Transformers documentation

- Basic Objective Fashions: These embrace variations of BERT, RoBERTa, DistilBERT, and XLM-R which are fine-tuned for sentence-level duties. Examples:

– The all-* fashions have been educated on all obtainable coaching information (greater than 1 billion coaching pairs) and are designed as basic goal fashions. The all-mpnet-base-v2 mannequin supplies the very best quality, whereas all-MiniLM-L6-v2 is 5 occasions quicker and nonetheless presents good high quality. - Multilingual Fashions: These fashions help a number of languages, making them splendid for multilingual and cross-lingual duties. Examples:

distiluse-base-multilingual-cased-v2

xlm-r-100langs-bert-base-nli-stsb - Area-Particular Fashions: Fashions fine-tuned on particular domains or datasets, corresponding to biomedical textual content, monetary paperwork, or authorized textual content. Examples:

biobert-sentence-transformer: Specialised for biomedical literature.

– Customized fine-tuned fashions obtainable by way of Hugging Face or Sentence Transformers for area of interest domains. - Multimodal Fashions: These fashions can deal with inputs past textual content, corresponding to pictures and textual content mixed, making them helpful for functions like picture captioning, visible query answering, and cross-modal retrieval. Examples:

clip-ViT-B-32: Integrates visible and textual inputs for duties that contain each modalities, corresponding to discovering pictures primarily based on textual queries.mage-text-matching: A specialised mannequin for matching textual content descriptions with related pictures. - Activity-Particular Fashions: Pre-trained for duties like semantic search, clustering, and classification. Examples:

msmarco-MiniLM-L12-v2: Optimized for info retrieval and search duties.nli-roberta-base-v2: Designed for pure language inference. - Customized Advantageous-Tuned Fashions: Customers can prepare their very own fashions on particular datasets utilizing Sentence Transformers’ coaching utilities. This permits adaptation to extremely specialised use circumstances.

References: