Artificial Intelligence fashions are getting larger, higher, and… bulkier. Within the race for state-of-the-art efficiency, we’ve constructed behemoth fashions that ship jaw-dropping accuracy however demand a king’s ransom in computational assets. Data distillation, is a method that allows you to bottle up the smarts of a giant, cumbersome mannequin and pour them right into a lean, environment friendly one.

On this information, we’ll discover every thing about data distillation. Whether or not you’re an ML practitioner or an AI fanatic, this text will break all of it down, step-by-step.

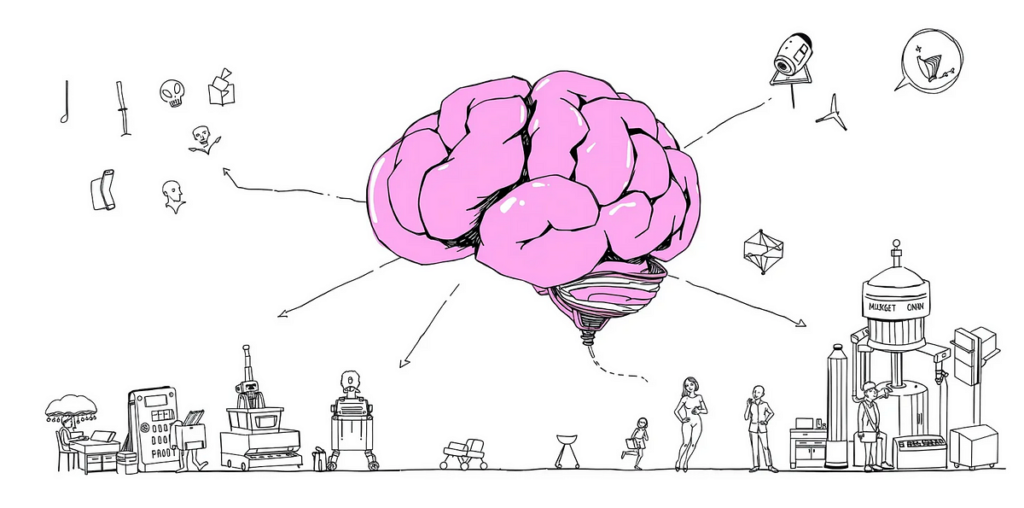

At its core, data distillation is a course of the place a big, pre-trained mannequin (known as the instructor) teaches a smaller, extra environment friendly mannequin (the pupil) to duplicate its efficiency. The coed doesn’t simply study from the bottom reality labels (like an ordinary mannequin) — it additionally absorbs the nuanced “data” of the instructor mannequin, encoded in its likelihood distributions.

- Mannequin Compression: Smaller fashions are cheaper, sooner, and simpler to deploy on edge units like telephones and IoT units.

- Effectivity: A light-weight pupil mannequin could make predictions a lot sooner than a big instructor mannequin with out vital efficiency loss.

- Scalability: Coaching and deploying smaller fashions make AI extra accessible and environmentally sustainable.

Giant fashions don’t simply study what’s proper or fallacious — additionally they study how proper or how fallacious every risk is. This “richness” is captured of their likelihood distributions over courses, generally known as comfortable targets. Let’s break this down:

- Arduous Targets: These are ground-truth labels — binary and unambiguous. For instance, in a classification activity, a picture of a canine may need the label “Canine” (Class A).

- Mushy Targets: As an alternative of assigning one class as “100% right,” the instructor mannequin assigns possibilities to all courses. As an illustration:

- Canine: 70%

- Wolf: 20%

- Cat: 10%

The chances in comfortable targets encode details about inter-class relationships. The instructor implicitly tells the scholar, “This appears principally like a canine, however it additionally has some wolf-like options.”

By mimicking these comfortable targets, the scholar mannequin learns to generalize higher, usually outperforming a mannequin skilled solely on exhausting targets.

Let’s dissect the important thing mathematical ideas.

Softmax and Temperature Scaling

The softmax perform converts uncooked logits (unnormalized scores) into possibilities:

In data distillation, we introduce a temperature parameter (T) to smoothen the possibilities:

Excessive T: Produces a smoother likelihood distribution (simpler for the scholar to study).

Low T: Makes possibilities extra “peaky.”

The instructor makes use of a excessive temperature to provide comfortable targets for the scholar.

KL Divergence: Measuring Similarity Between Distributions

To coach the scholar, we examine the instructor’s and pupil’s likelihood distributions utilizing Kullback-Leibler (KL) divergence, outlined as:

Right here:

- P: Instructor’s likelihood distribution (comfortable targets).

- Q: Pupil’s likelihood distribution.

KL divergence measures how a lot the scholar’s predictions deviate from the instructor’s. Minimizing this divergence forces the scholar to imitate the instructor.

The Complete Loss Perform

The whole loss perform combines:

- Distillation Loss (comfortable targets): Guides the scholar to study from the instructor.

- Customary Cross-Entropy Loss (exhausting targets): Ensures the scholar performs effectively on the bottom reality labels.

- α: Balances the burden between comfortable and exhausting targets.

- T²: Accounts for the scaled logits when utilizing temperature.

Wealthy Info from Mushy Targets

Mushy targets encode inter-class relationships. For instance, if the instructor assigns:

- Canine: 0.6

- Wolf: 0.3

- Cat: 0.1

The coed learns that the picture resembles a canine however shares options with a wolf — a nuance that tough labels like “Canine” would miss.

Smoother Optimization

Mushy targets present gradients which might be much less noisy and extra informative, serving to the scholar converge sooner and generalize higher.

Lowered Overfitting

The instructor acts as a “regularizer,” stopping the scholar from overfitting to noisy or incorrect ground-truth labels.

Let’s implement data distillation with PyTorch utilizing an MNIST dataset.

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

from torch.utils.information import DataLoader# Outline instructor and pupil fashions

class TeacherModel(nn.Module):

def __init__(self):

tremendous(TeacherModel, self).__init__()

self.community = nn.Sequential(

nn.Linear(784, 512),

nn.ReLU(),

nn.Linear(512, 256),

nn.ReLU(),

nn.Linear(256, 10)

)def ahead(self, x):

return self.community(x)

class StudentModel(nn.Module):

def __init__(self):

tremendous(StudentModel, self).__init__()

self.community = nn.Sequential(

nn.Linear(784, 128), # Smaller mannequin

nn.ReLU(),

nn.Linear(128, 10)

)def ahead(self, x):

# Load dataset

return self.community(x)

rework = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (0.5,))])

train_dataset = datasets.MNIST(root='./information', practice=True, rework=rework, obtain=True)

train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True)# Initialize fashions

instructor = TeacherModel()

pupil = StudentModel()# Loss features and optimizer

temperature = 5.0

alpha = 0.7

criterion_ce = nn.CrossEntropyLoss()

criterion_kl = nn.KLDivLoss(discount='batchmean')optimizer = optim.Adam(pupil.parameters(), lr=0.001)# Coaching loop

def train_distillation(instructor, pupil, train_loader, optimizer, criterion_ce, criterion_kl, alpha, temperature):

instructor.eval()

pupil.practice()

for epoch in vary(5):

total_loss = 0

for pictures, labels in train_loader:

pictures = pictures.view(-1, 28*28)

with torch.no_grad():

teacher_logits = instructor(pictures)

student_logits = pupil(pictures)

# Compute comfortable targets

teacher_probs = torch.softmax(teacher_logits / temperature, dim=1)

student_probs = torch.log_softmax(student_logits / temperature, dim=1)

# KL divergence loss

loss_kl = criterion_kl(student_probs, teacher_probs) * (temperature ** 2)

# Cross-entropy loss

loss_ce = criterion_ce(student_logits, labels)

# Complete loss

loss = alpha * loss_kl + (1 - alpha) * loss_ce

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss += loss.merchandise()

print(f"Epoch {epoch+1}, Loss: {total_loss / len(train_loader)}")# Prepare the scholar mannequin

train_distillation(instructor, pupil, train_loader, optimizer, criterion_ce, criterion_kl, alpha, temperature)

- Mannequin Compression: Use small fashions for deployment on resource-constrained units.

- Ensemble Fashions: Prepare a pupil to combination the data of a number of lecturers.

- Area Adaptation: Switch data from a instructor skilled on a big dataset to a pupil in a unique area.

- Multi-task Studying: Distill data from a multi-task instructor to a pupil specializing in a single activity.