GenAI has already made a rare impression on enterprise productiveness. Marc Benioff has stated Salesforce will preserve its software program engineering headcount flat because of a 30% enhance in productiveness because of AI. Customers leveraging Microsoft Co-pilot create or edit 10% more documents.

However this impression has been evenly distributed. Highly effective fashions are a easy API name away and out there to all (as Meta and OpenAI advertisements be sure that to remind us).

The true disruption lies with “information + AI.” In different phrases, when organizations mix their first-party information with LLMs to unlock distinctive insights, automate processes, or speed up specialised workflows.

Nobody is aware of precisely when this tidal wave will hit, however primarily based on our conversations with dozens of groups actively engaged on information + AI purposes, it’s clear the time is nigh.

Why? Effectively, this follows a sample we’ve seen earlier than. A number of instances. Each main expertise shift sees preliminary adoption that’s magnified as soon as it reaches enterprise stage reliability. We noticed this with software program and utility observability, information and Data Observability, and shortly information + AI and information + AI observability.

On this submit, we’ll spotlight the progress of Enterprise Data + AI initiatives in addition to the trail many groups are taking to cross the tipping level.

Previous is prologue

Knowledge + AI will ship exponentially extra distinctive worth, however it’s also exponentially tougher.

Most organizations don’t have $500 billion to spare for science fiction-themed initiatives. Enterprise purposes should be economically possible and dependable.

previous expertise advances–specifically cloud computing and large information–we are able to see it usually occurs in that order. Infrastructure and capability breakthroughs create demand and elevated reliability ranges are required to maintain it.

Earlier than the web was powering the world’s most impactful SaaS purposes with more and more vital duties from banking to real-time navigation, it was primarily the area of cat photos, AOL chatrooms, and electronic mail chain letters. That change solely occurred as soon as we reached the fabled “5 9s of reliability.” S3, Datadog, and website reliability engineering practices modified the world.

Previous to information powering beneficial information merchandise like machine studying fashions and real-time advertising and marketing purposes, information warehouses had been primarily used to create charts in binders that sat off to the aspect of board conferences. Snowflake and Databricks modified the economics and capability of knowledge storage/processing and data observability introduced reliability to the fashionable information stack.

This sample is repeating with AI. 2023 was the yr of GPUs. 2024 was the yr of foundational fashions. 2025 has already seen dramatic will increase in capability with DeepSeek and the preliminary ripple of agentic applications will turn out to be a tidal wave.

Our guess is 2026 would be the yr when information + AI modifications the world…and, if historical past is any indicator, it will likely be no coincidence this revolution will probably be instantly preceded by advances in observability.

The place information + AI groups are right this moment

Knowledge + AI groups are additional alongside than they had been final yr. Primarily based on our conversations:

- 40% are within the manufacturing stage (30% simply acquired there)

- 40% are within the semi or pre-production stage

- 20% are within the experimentation stage

When you can see the vital mass constructing, all of them are dealing with challenges as they try to achieve full scale. The commonest themes:

Knowledge readiness — You possibly can’t have good AI with unhealthy information. On the structured information aspect of the home, groups are racing to realize “AI-ready data.” In different phrases, to create a central supply of fact and scale back their information + AI downtime.

On the unstructured aspect, groups are scuffling with conflicting sources and outdated info. One staff specifically cited a “worry of an unmanageable data base” as the principle obstacle to scale.

System sprawl — At the moment, there’s not what we’d name an trade normal structure, though hints are rising. The information + AI stack is definitely 4 separate stacks coming collectively: structured information, unstructured information, AI and oftentimes the SaaS stack.

Every stack by itself is tough to control and preserve excessive reliability ranges. Piecing them collectively is complexity squared. Nearly all the information groups we now have talked to are attempting to consolidate the chaos the place they will, for instance, by leveraging massive fashionable information cloud platforms for lots of the core parts relatively than purpose-built vector databases.

Suggestions loops — One of the crucial widespread challenges inherent in information + AI purposes is that evaluating the output is commonly subjective. Frequent approaches embrace:

- Letting human annotators rating outputs

- Monitoring consumer conduct (reminiscent of thumbs up/down or accepting a suggestion) as an oblique measure of high quality

- Utilizing fashions (LLMs, SLMs and others) to attain outputs on varied standards

- Evaluating outputs with some recognized floor fact

All approaches have challenges, and creating correlations between system modifications and output outcomes is close to unattainable.

Value & latency — The progress of mannequin capability and value is breathtaking. Throughout a recent presentation, Thomas Tunguz, a number one enterprise capitalist within the AI area, shared this graph displaying how smaller (inexpensive mannequin) efficiency is reaching related efficiency ranges as bigger fashions.

However we aren’t fairly at commodity infrastructure costs simply but. Most groups we spoke with had issues across the monetary impression of AI adoption. If there was any monitoring happening, it was most of the time on tokens and value relatively than consequence reliability.

The subsequent frontier: Knowledge + AI observability

Knowledge + AI is an evolving area with distinctive challenges, however the rules of constructing dependable expertise techniques have remained constant for many years.

A type of core rules is that this: you can’t simply sporadically examine the product on the finish of the meeting line and even at sure factors all through the meeting line. As an alternative, you want full visibility into the meeting line itself. For complicated techniques, it’s the solely option to determine points early and hint them again to the foundation trigger.

However you want to observe the entire system. Finish-to-end. It doesn’t work another manner.

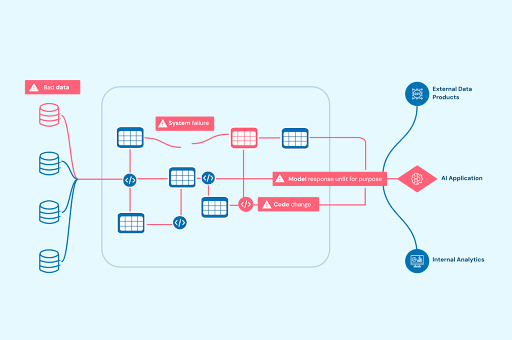

To attain information + AI reliability, groups is not going to achieve success by observing fashions in a vacuum. For information + AI observability, meaning integrations throughout the core system parts. In different phrases, the 4 methods information + AI merchandise break: within the information, system, code, or mannequin.

Detecting, triaging and resolving points would require visibility into structured/unstructured information, orchestration/agent techniques, prompts, contexts and mannequin responses. (Keep tuned for an upcoming deep dive on precisely what this implies and the way every part beaks).

Knowledge + AI are not two separate applied sciences; they’re a single system. By subsequent yr, let’s hope we’re treating it like one.

Change occurs slowly, then unexpectedly

We’re at that precipice with information + AI.

No group will probably be shocked by the what or the how. Each member of the boardroom, the C-suite, and the breakroom has seen how previous platform shifts have created Blockbusters and Netflixes.

The shock will probably be within the when and the the place. Each group is racing, however they don’t know when to pivot when to interrupt right into a dash, and even the place to run.

Standing nonetheless will not be an choice, however nobody needs to make use of quickly evolving infrastructure to construct bespoke AI purposes that can rapidly turn out to be commoditized. Nobody needs their image accompanying the next AI hallucination headline.

It’s clear attaining reliability at scale would be the tipping level that crowns new trade titans. Our advice is that as the information + AI area matures, be sure to are ready to pivot.

As a result of if the previous has proven us something, it’s that the organizations with the proper foundations for constructing dependable techniques with excessive ranges of knowledge readiness would be the ones crossing the end line.