The regular distribution is essential in statistics and machine studying as a result of many real-world datasets naturally comply with this distribution, permitting us to make correct predictions and inferences. In statistics, it’s the basis for key strategies like speculation testing, confidence intervals, and regression evaluation, which depend on the belief of usually distributed errors. In machine studying, algorithms like linear regression, logistic regression, and Gaussian Naive Bayes carry out optimally when options are usually distributed. A number of machine studying algorithms depend on the belief that the info is generally distributed for optimum efficiency. As an example, Linear Regression and Logistic Regression assume that the residuals (errors) comply with a standard distribution, which is crucial for correct speculation testing and confidence intervals. Gaussian Naive Bayes explicitly assumes that options are usually distributed to calculate possibilities. Equally, Linear Discriminant Evaluation (LDA) assumes that knowledge from every class follows a Gaussian distribution with completely different means however equal variance. Principal Part Evaluation (PCA) additionally performs higher when knowledge is generally distributed, because it captures most variance. Moreover, Assist Vector Machines (SVM) with RBF kernel and Okay-Nearest Neighbors (KNN) work extra successfully when knowledge distribution is Gaussian as a result of nature of distance-based calculations. When the info shouldn’t be usually distributed, transformations like log transformation or Field-Cox transformation can assist enhance mannequin efficiency.

Furthermore, the Central Restrict Theorem (CLT) states that the distribution of pattern means approaches a standard distribution because the pattern dimension will increase, whatever the authentic knowledge distribution. This property permits us to make use of a standard distribution for making probabilistic assumptions and constructing strong fashions, even when working with small datasets.

Laymen Time period Rationalization

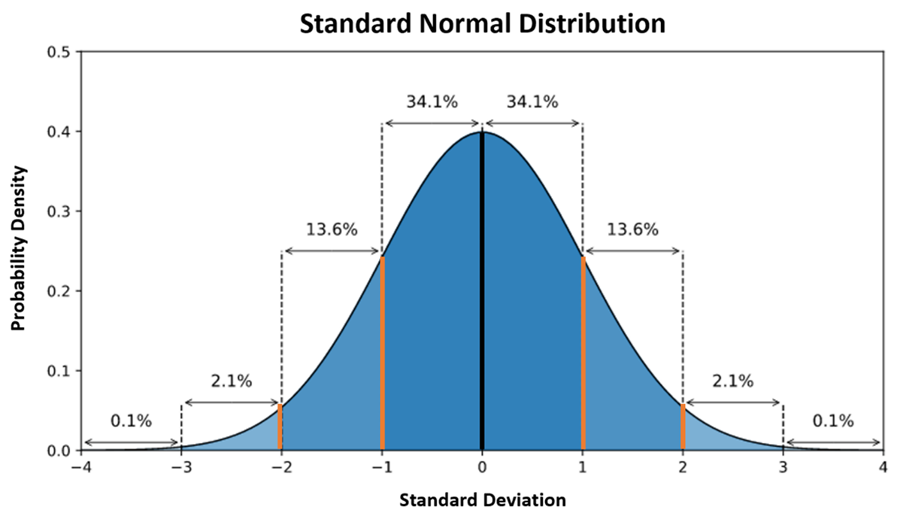

The regular distribution, also called the Gaussian distribution, is a basic idea in statistics and machine studying. It’s a bell-shaped, symmetric chance distribution the place most knowledge factors cluster across the imply, with fewer values showing as you progress farther from the middle. The distribution is absolutely outlined by two parameters: the imply (μ), which represents the middle, and the commonplace deviation (σ), which measures the unfold. The 68–95–99.7 rule states that roughly 68% of knowledge falls inside one commonplace deviation from the imply, 95% inside two, and 99.7% inside three. As it may be seen within the under picture.

Method

The system for regular distribution:

What does every variable within the system?

f(x) represents the chance density

σ represents the variance of the info

π represents the mathematical pie worth

μ represents the imply of the info

Understanding the system step-by-step

Half I: Allow us to give attention to the under system for now and perceive what it precisely means.

That is the core a part of the conventional distribution system, which is:

This half is answerable for shaping the bell curve.

It controls how the chance decreases as you progress away from the imply (μ).

The exponential operate e−x shrinks in a short time as x will increase.

- If x=μ, this half turns into 1 (as a result of e⁰=1).

- If x is much from μ, this half shrinks very quick in direction of 0.

- (x−μ): This measures how far the purpose x is from the imply μ.

- Squaring it, (x−μ)2, makes certain the gap is all the time optimistic (as a result of distance can’t be unfavourable).

- Dividing by 2σ^2: This controls how unfold out the curve is, relying on the variance.

So, If not contemplating the exponential for now. The remaining half is the sq. of the Z-score.

Why Sq. of Z-Rating?

- Squaring makes each the left and proper sides of the curve equal (symmetry).

- With out squaring, it wouldn’t be a bell curve.

The plot of the sq. of the z-score is:

From the above graph, the x-axis is the info factors and y is the Z-score. As you see the Z-score shouldn’t be sure to a restrict it varies from unfavourable infinity to optimistic. After we plot the frequency of Z-scores for all knowledge factors, it naturally varieties a bell curve.

(x−μ)^2 2σ^2

In chance principle, squaring alone grows too quick.

- Dividing by 2 slows down the expansion.

- This comes from Gaussian arithmetic and calculus-based derivation.

- In higher-level math, this division by 2 comes from the variance of the distribution.

Why are we doing the exponential of Z-score squared divided by 2?

That is THE MOST IMPORTANT PART of the conventional distribution!

The exponential operate is used to “punish” excessive factors.

- In a standard distribution, most knowledge factors are near the imply (μ).

- Information factors which might be too removed from the imply ought to have a very low chance.

- The exponential operate ensures that these excessive values get very small weights (possibilities).

What do you observe?

- The highest worth is strictly on the imply (x=μ), the place the chance is 1.

- As you transfer away from the imply, the chance quickly drops to virtually zero.

- The drop is clean and symmetric on each side, because of the squared Z-score.

What is going on right here?

The exponential operate is “punishing” excessive values, so:

- Factors close to the imply get a excessive chance.

- Factors removed from the imply get crushed to just about zero.

How would be the Plot when the usual deviation varies?

- When σ is small, the exponential operate punishes excessive values extra harshly, making the curve very steep.

- When σ is giant, the punishment is sluggish, permitting extra knowledge factors to unfold out.

- That is precisely how the conventional distribution controls the unfold of knowledge.

- By altering σ, you management the “tightness” of the bell curve

Half II: Now will perceive the under half within the system.

Which will also be interpreted as under:

In chance distributions, the whole space underneath the curve have to be precisely 1, as a result of the whole chance of all attainable outcomes is 1.

- This half ensures that the whole space underneath the bell curve is strictly 1, which is a key property of any chance distribution.

- It scales the curve in order that the chances calculated from the distribution are legitimate and add as much as 1.

- With out this issue, the curve is perhaps too excessive or too low, resulting in incorrect possibilities.

Mathematical Proof (Utilizing Integration):

The integral of the conventional distribution is:

The results of this integral is:

So, to steadiness this out and make the world = 1, we divide by the identical worth.

This half normalizes the curve, making certain that the whole chance is strictly 1, which is a core requirement of any chance distribution.

Hope this text explains the instinct of regular distribution system. Cheers!