What’s a Giant Language Mannequin?

In easy phrases, it’s a neural community designed to grasp, generate, and reply to textual content in a pure, human-like approach. You possibly can consider it as a deep neural community that learns by coaching on huge quantities of textual content knowledge.

Giant Language Fashions Vs Pure Language Processing?

Within the early levels of Pure Language Processing (NLP), neural community fashions have been usually designed and skilled for specialised duties, comparable to textual content classification, spam detection, or language translation. These conventional NLP fashions have been typically task-specific, limiting their capability to adapt past their preliminary coaching goal.

In distinction, Giant Language Fashions (LLMs) characterize a big development. Skilled on billions of parameters and big volumes of textual knowledge, LLMs excel at all kinds of NLP duties — together with query answering, sentiment evaluation, language translation, and past. In contrast to earlier NLP fashions, LLMs provide exceptional flexibility, permitting them to generalize and successfully carry out extra nuanced duties, comparable to composing emails, producing inventive textual content, or participating in conversational interactions.

What’s the key sauce which makes LLMs SO GOOD ?

The reply lies within the publication “Attention is all you need” which launched “Transformer Structure” a posh suggestions loop which permits enter/output from pure language processing.

Transformers use a mechanism known as “consideration,” a strong suggestions loop that helps the mannequin successfully course of enter and produce significant output in pure language.

Consider transformer structure like attending a busy feast: whereas many conversations occur concurrently, your mind effortlessly focuses on the conversations most related to you, filtering out background chatter. Equally, transformers enable language fashions to “listen” selectively to an important elements of the enter, serving to them generate coherent, contextually related responses.

Functions of Giant Language Fashions:

LLMs are invaluable for automating virtually any process that includes parsing and producing textual content. Their purposes are infinite and as we proceed to innovate and discover new methods to make use of these fashions, it’s clear that LLMs have the potential to redefine our relationship with expertise, making it extra conversational, intutive and accessible.

Some distinguished purposes of LLMs embody:

- Conversational AI and Chatbots: Pure, human-like interactions in buyer assist and digital assistants.

- Content material Technology: Writing articles, emails, reviews, and inventive storytelling.

- Translation and Language Understanding: Seamless language translation and comprehension.

- Sentiment Evaluation and Emotion Detection: Understanding buyer suggestions, social media monitoring, and market evaluation.

- Summarization and Data Extraction: Mechanically summarizing prolonged paperwork or extracting key insights.

- Code Technology and Help: Writing, debugging, or helping builders with programming duties.

- Academic Instruments: Interactive studying, personalised tutoring, and automatic assessments.

- Accessibility Instruments: Supporting assistive applied sciences that assist people work together extra successfully with digital platforms.

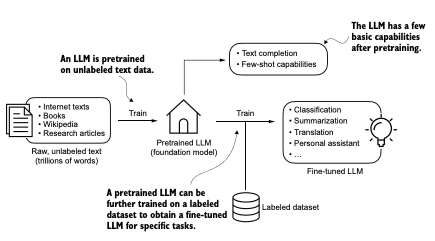

Phases of Constructing an LLM:

Coding an LLM from the bottom up is a superb train to grasp its mechanics and limitations. Additionally, it equips us with the required data for pretraining or fine-tuning present open supply LLM architectures to our personal domain-specific datasets or duties.

In less complicated phrases, the levels of constructing an LLM could be expressed with a fundamental equation:

LLM = Pre-training + Fantastic-tuning

- Pre-training means coaching the mannequin initially on huge quantities of normal textual content knowledge.

- Fantastic-tuning means refining the mannequin by coaching it additional on smaller, extra particular datasets to deal with explicit duties.

Pre-training (Studying the Fundamentals)

Think about instructing somebody normal data by having them learn hundreds of books from totally different matters — this provides them a broad understanding of language and the power to speak about varied issues. Equally, in pre-training, an LLM learns from big quantities of normal textual content knowledge, comparable to the complete web or many books, to grasp language patterns. A great instance is GPT-3, a mannequin designed to foretell and full sentences based mostly on what it has discovered from huge textual content knowledge. It’s like instructing somebody normal data first, permitting them to finish half-written sentences or reply easy questions utilizing fundamental understanding.

Fantastic-tuning (Specialised Coaching)

After normal coaching, the mannequin is refined by fine-tuning to deal with particular duties. Consider fine-tuning as specialised coaching or superior training after faculty. This may be achieved in two main methods:

- Instruction fine-tuning: The mannequin is given examples of directions paired with appropriate responses (e.g., learn how to translate sentences). It’s like coaching an assistant by giving them clear examples of learn how to do particular duties.

- Classification fine-tuning: The mannequin learns to categorize textual content into particular teams (e.g., labeling emails as spam or not spam). It’s just like instructing somebody to arrange letters into totally different bins based mostly on their content material.

Briefly, constructing an LLM is like educating an individual: first, you give them broad normal data (pre-training), then educate them specialised abilities to excel in particular jobs (fine-tuning).

Abstract of Chapter 1: Introduction to Giant Language Fashions

Giant Language Fashions (LLMs) characterize superior developments within the fields of Pure Language Processing (NLP) and deep studying. They’ve developed considerably past conventional NLP fashions, demonstrating better effectivity and flexibility in dealing with varied language duties.

In comparison with earlier NLP fashions, LLMs are usually not solely extra environment friendly but additionally extra succesful, successfully performing advanced duties with higher accuracy and adaptability. The power of LLMs lies primarily of their structure — particularly, the Transformer structure, which permits the mannequin to selectively take note of essentially the most related elements of the enter. This makes them exceptionally highly effective at understanding and producing textual content.

Briefly, LLMs construct upon and surpass conventional NLP fashions by combining large-scale coaching with superior Transformer-based strategies, permitting them to effectively deal with numerous and complicated language duties.

Reference: Construct a Giant Language Mannequin ( From Scratch) by Sebastian Raschka