We ask ourselves: can Reinforcement Studying resolve Frequent Pool Useful resource (CPR) issues? To reply that, we are going to make a quick setting in C utilizing pufferlib, and prepare PPO on it. We wish to know if the brokers deplete the useful resource or handle to discover a optimum steadiness.

A standard pool useful resource is a kind of fine that faces issues of overuse as a result of it’s restricted. It usually consists of a core useful resource, which defines the inventory variable, whereas offering a restricted amount of extractable items, which defines the movement variable. The core useful resource have to be protected to make sure sustainable exploitation, whereas the movement variable may be harvested. (source)

Reinforcement Studying is presently cursed with tremendous sluggish environments and algorithms, with many hyperparameters which ends up in a ton of dangerous analysis. Certainly, it’s near unimaginable to benchmark and examine options when it’s essential run hyperparam sweeps in excessive dimensions, when every run takes days.

Pufferlib is an open sourced mission aiming at fixing that. Primarily, they supply quick envs working at hundreds of thousands of steps per second, enabling you to run sweeps a lot quicker. Moreover, they’re constructing quicker algo implementation and quicker and higher hyperparameter search options.

All of this unlocks good analysis. Everybody can contribute by writing new envs for particular analysis usecases. We are going to fork their repo and take inspiration from their primary C envs to make our personal.

Setup

Fork the repo. You should utilize the two.0 department (on the date of this writing), or dev department, however bear in mind it is likely to be damaged. I don’t have a GPU, so that you received’t be capable of use puffertank (their container, which truly makes it simpler to work with). You wish to interface your C env with python, and I discover python 3.10.12 working simply wonderful. Create a digital setting to make issues simpler.

You’ll want to put in quite a lot of dependencies, and presently pufferlib doesn’t make it tremendous straightforward to make use of with no GPU. You may run their demo file with

python demo.py --env puffer_connect4 --train.system cpu

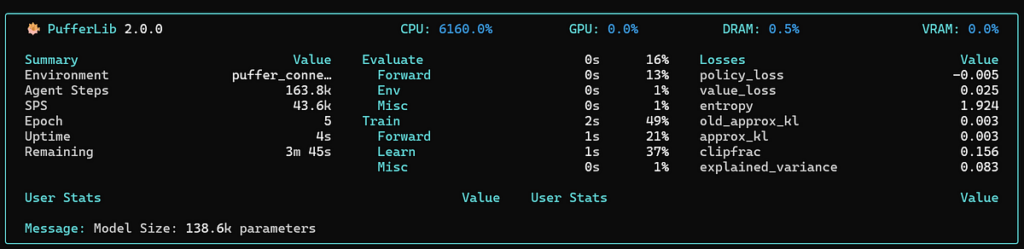

Or some other env actually, you possibly can simply have a look at their documentation here. This must be displaying the puffer console, and begins coaching:

As soon as this runs, you need to be wonderful. You wish to make a brand new C env inside their pufferlib/ocean/ folder. Create a brand new one, I’ll identify mine cpr/. You desire a .h and .c file. The .c file will primarily be for testing as a result of in the long run, you’ll interface it with cython via a .pyx and .py file.

You can begin by studying or copy / pasting their squared env recordsdata, or snake (a bit extra superior).

The C setting is fairly easy. Let’s have a look at our .h file. Let’s describe the setting first.

CPR Atmosphere

This shall be a grid-based world, with a number of brokers. The brokers actions are 5 potential strikes: Up, Down, Proper, Left, Nothing.

The brokers have partial observations of the setting, with a sure radius, thus the observations have to be computed for a 2*radius+1 by 2*radius+1 grid round every agent.

1 step of the setting means stepping each agent with their motion handed right into a buffer. Agent flip order is presently not random and every agent is stepped one after one other, so we simply keep away from collision points.

There’s meals on this world. Two sorts of meals:

- Gray meals: an agent collects the meals each time it steps onto the identical cell as a gray meals

- Yellow meals: this meals requires two brokers to face subsequent to it (solely the 4 adjoining squares, no diagonal) — then will get collected

The meals respawn mechanism is roughly as observe:

- The meals can solely respawn subsequent to a different comparable food-type

- Every step, for each meals presently on the map, there’s a likelihood of a brand new meals spawning subsequent to it (8 adjoining squares — diagonals too). The likelihood is outlined for every kind of meals

- There’s a very low likelihood for a brand new meals to spawn randomly within the map, to deal with full useful resource depletion

The brokers will get rewarded:

- Every time they acquire a meals (completely different reward for various varieties)

- Every step (often a small, unfavorable reward)

The purpose of the brokers is to maximise the anticipated reward over the long term. We count on the brokers to learn to navigate and acquire every kind of meals, and, hopefully, observe what occurs when brokers be taught to deplete the meals. Intuitively, we will assume that the optimum gameplay for the brokers is to go away some meals on the desk to they will regenerate, however we’ll see that fixing that is a lot tougher than we thought. Let’s now describe the code

Primary RL setting construction

We have to observe the requirements for an setting in RL. Due to this fact, we want at the very least:

- A reset operate, which resets the setting in a beginning state

- A step operate, which steps the whole setting with the actions

- A render operate

Macros

Usefull macros are mainly the ids for entity varieties. I recommand placing Brokers or any entities which should be discerned on the finish, so this may help code after. I additionally separate obstacles from non obstacles, usefull in small envs.

I even have macros associated to bitmasking, however this may be averted, see under for an evidence.

Logs

The primary chunk of the .h file is especially for logging. We wish to have the ability to add and clear logs. What you place in your logs is as much as you, however at a minimal I recommand you might have some rating metric. The explanation you wish to identify is rating is as a result of that is the default metric that the sweep algorithms will search for to maximise, though you might identify it no matter and alter this setting within the config recordsdata afterwards.

Agent

We outline an Agent struct. It has id, row and column, quite simple

FoodList

We make a struct for FoodList. That is for optimization. Sooner or later, we wish to iterate via the meals on the map, so we will spawn some extra meals subsequent to it. With out a FoodList someplace, we would wish to iterate over the whole grid. This reduces a bit computation, whereas barely growing reminiscence utilization. We immediately give it the flattened indexes of the grid since we wish to simply interate over it.

CPR env struct

That is the fundamental construction of the setting. It has every little thing you want. The grid is mainly only a checklist of integers, every representing one entity.

The cool stuff to notice right here is how we handle the observations, rewards, actions and terminals. These are all buffers we immediately write into. This shall be helpfull in dashing up the env once we use it from python afterwards.

The opposite attention-grabbing factor to note is the interactive_food_agent_count. Interactive meals require at the very least 2 brokers subsequent to it to be collected. When an agent transfer subsequent to an interactive meals, we use bitmasking (see here) to examine wether one other agent is subsequent to it. This isn’t required, as we may iterate over the adjoining cells of an interactive meals as soon as an agent stands subsequent to it, to see whether or not or not there may be one other agent. With bitmasking, nevertheless, we immediately index on the meals, eradicating any want of iteration, whereas retaining little or no reminiscence overhead.

Subsequent, we now have our allocation mechanisms. The allocate_ccpr is barely utilized by the .c file as a result of we have to instanciate the commentary, rewards, terminals, and so forth, whereas when utilizing the env immediately from Python we can have them already created. You may have a look at the code here

Dealing with of meals

Nothing actually particular right here, however we want features so as to add, take away, and spawn new meals. Right here is the code for the most recent.

What’s essential right here is that we use our FoodList to iterate over the meals. This cut back iterations from top*width (iterating over the whole grid) to n, with n being the present variety of meals within the grid.

Observations

These are only a grid centered at every agent, parametrized by the imaginative and prescient variable. Nothing fancy right here.

Reset operate

The trick right here is to place partitions contained in the grid with radius width, so the observations can’t go over the grid, and the brokers neither. This clearly removes a giant portion of the grid if the observations are large, nevertheless it simply finally ends up being static cells, so only a little bit of reminiscence, and make issues a lot simpler to deal with. Word that this might be dealt with by memsets.

Step operate

We first have the principle step operate, very primary

A lot of the logic is due to this fact within the step_agent operate, as you possibly can see under.

Nothing actually fancy right here, it’s truly fairly easy. We get the delta from the motion for every agent, we compute the following grid_cell, after which we mainly have a listing of checks to carry out primarily based on what’s on that cell, and what’s subsequent to it.

As stated above, within the interactive meals logic half, you might eliminate using bitmasking, and as an alternative iterate over the adjoining cells of the interactive meals.

Rendering

Rendering right here is completed utilizing raylib. You may see the code within the github repo, the present rendering is the naked minimal, and I’m not a giant fan of UI design (as you possibly can see from the horrible seems to be of the rendered env), so I’ll depart this as much as you.

Nicely performed ! You now ought to have a working env in C. You may create a .c file, make your env, name your allocate and reset features and render it. Compile your code and also you’re good to go. For a primary .c file construction, look into my repo here

Cython

It’s now time to port this code to python ! First, it’s essential make a .pyx file for cython, and expose your structs. There’s completely no logic on this, so i’ll depart it as much as you to look on the repo the way it’s completed. It’s simply boring code, actually.

Python

Right here it’s the identical. A few issues to be stated although. That you must inherit from pufferlib.PufferEnv, and respect the construction. This can be a very comparable construction to something you might need seen with some other RL libraries equivalent to gymnasium or pettingzoo. The factor is to ensure your env may be vectorized, so be sure to give it lists of widths, brokers, and something you would possibly wish to have completely different in each env as a listing. It must match your Cython def too, clearly.

The code is boilerplate, primarily you wish to implement the init, reset, step, render and shut features, which can principally simply name the cython init, reset, step, render and shut features, which can in flip principally simply name the identical C features…

Making your env usable with pufferlib

You may already use your env simply as is, so long as you compile issues accurately. If you wish to use it with pufferlib, you wish to add your setting within the ocean/setting.py file, within the MAKE_FNS half, very simply:

Add your env subsequent to the opposite lazy_imports (in case you’ve made your env in ocean as a C env).

Second factor is so as to add it to the setup.py file to the extension paths (see here).

After that, you possibly can compile utilizing

python setup.py build_ext --inplace

You’re completed ! Your env is compiled and usable with pufferlib.

I’ll depart the technical particulars right here. When you don’t wish to hassle an excessive amount of, with pufferlib, you should use the default insurance policies and prepare your env with

python demo.py --env cpr --mode prepare

Ensure that to create a .ini config file in config/ocean/. Take instance on the others to setup which mannequin, hyperparams and timestemps you wish to prepare for.

Right here is mine after 300m steps of coaching a primary RNN utilizing PPO:

We clearly see that the brokers find yourself depleting the meals utterly. There are a pair instructions to resolve for that, particularly:

- Practice for longer occasions

- Carry out sweeps of hyperparams

- Enhance the setting to incorporate higher incentives

I’m undecided any of them can be fixing the difficulty, however I’m going for the third, and see you within the subsequent replace!

This was a fast introduction on how you can make cool, quick environments with pufferlib. Don’t neglect to star the pufferlib repo in case you discover that cool and wish to contribute, you’re welcome!