As massive language fashions (LLMs) like LLaMA 4 push the boundaries of measurement, intelligence, and flexibility, one query usually goes unnoticed behind the scenes:

How will we practice these large fashions effectively and reliably?

It seems that selecting the best coaching settings — comparable to how briskly every layer learns, or how its weights are initialized — is simply as essential because the mannequin’s measurement or structure. These settings, referred to as hyperparameters, could make or break a mannequin’s skill to study successfully. And with trillions of parameters, the price of getting it improper turns into monumental.

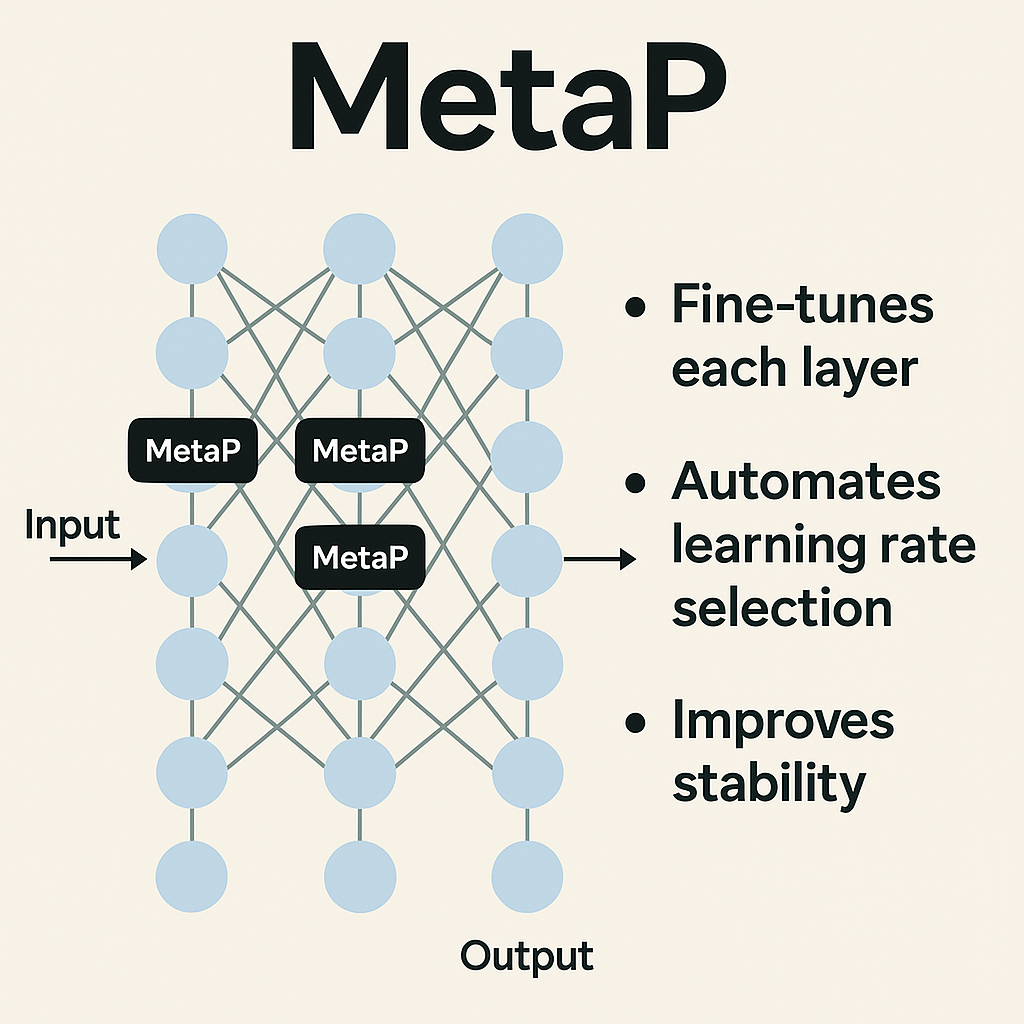

Subsequently Meta launched MetaP , MetaP is a robust inside method utilized in LLaMA 4 that takes a better, extra systematic method to tuning these vital parameters. Reasonably than counting on guesswork or grid search, MetaP permits researchers to set per-layer studying charges and initialization scales that generalize throughout completely different mannequin sizes and coaching configurations.

On this article, we’ll discover how MetaP works, why it issues, and the way it helped make LLaMA 4 one of the vital superior open fashions on this planet.

MetaP

MetaP stands for “Meta Parameters,” and it refers to a scientific and automatic methodology for setting per-layer hyperparameters throughout completely different mannequin configurations. As a substitute of making use of a single world studying fee to your complete mannequin, MetaP assigns customized studying charges and initialization values to every particular person layer of the community.

MetaP is basically a wise strategy to fine-tune every particular person layer inside big AI fashions like LLaMA 4 throughout coaching.

As a substitute of letting all of the layers within the mannequin study on the identical tempo, MetaP permits the structure to:

- Let some layers (usually the earlier layers) study sooner,

- Whereas letting others (often the center or deeper layers) study extra slowly and punctiliously.

It does this by adjusting key hyperparameters like:

1- The studying fee (how briskly a layer ought to study).

2- The preliminary weight scale (how huge the values in that layer begin off as).

MetaP focuses on 3 capabilities :

1. Per-layer studying fee

As a substitute of a single studying fee for your complete mannequin, every layer will get its personal. This helps guarantee the precise elements study on the proper tempo making the mannequin smarter and extra balanced.

2. Initialization scales

When coaching begins, all weights (the values that the mannequin adjusts whereas studying) have to be initialized correctly. MetaP units the precise measurement for these weights per layer, which helps stop issues like exploding or vanishing gradients.

3. Transferability throughout configurations

The identical MetaP settings work properly throughout completely different coaching setups whether or not you’re coaching a small mannequin or a 2 trillion parameter big. This protects effort and time for each scale.

This method is essential and assist us to cut back the price , For big fashions would take perpetually and price quite a bit. MetaP reduces that trial-and-error by giving dependable, automated setups , stop noisy output or crashing and relevant for big and small fashions.

MetaP with LLaMA 4

MetaP performed a key function in coaching all variations of the LLaMA 4 fashions by serving to every layer within the mannequin study on the proper velocity. For instance, in LLaMA 4 Maverick, which makes use of a particular design referred to as Mixture of Experts (MoE), MetaP made certain the layers stayed steady and environment friendly even when solely a small a part of the mannequin was lively at a time. In LLaMA 4 Scout, which is designed to deal with very lengthy inputs (as much as 10 million tokens), MetaP helped hold the coaching balanced throughout many layers, stopping points like forgetting or overfitting. And for the large LLaMA 4 Behemoth mannequin with almost 2 trillion parameters, MetaP made it potential to coach such a big system safely and successfully, permitting it for use as a trainer to enhance smaller fashions. By utilizing MetaP throughout all these fashions, Meta AI averted guide tuning, diminished errors, and educated extra effectively — saving time, sources, and enhancing general mannequin high quality.

Comparability to Conventional Hyperparameter Tuning

Code Instance

Right here’s an instance of code of how metap working (simulation):

import torch

import numpy as np

import torch.nn as nn

import torch.optim as optimclass Simplelayers(nn.Module):

def __init__(self):

tremendous().init()

self.embedding = nn.Embedding(512, 64)

self.layer1 = nn.Linear(64, 64)

self.layer2 = nn.Linear(64, 64)

self.output = nn.Linear(64, 2)

# MetaP-style initialization (weight intitalization)

self._custom_initialize()

def ahead(self,x):

x = self.embedding(x)

x = torch.relu(self.layer1(x))

x = torch.relu(self.layer2(x))

out = self.output(x)

return out

def _custom_initialize(self):

# Set completely different init scales (MetaP-style)

nn.init.xavier_uniform_(self.layer1.weight, achieve=1.0)

nn.init.xavier_uniform_(self.layer2.weight, achieve=0.5)

simple_layer = Simplelayers()

# Simulate MetaP: assign per-layer studying charges

optimizer = optim.Adam([

{'params': model.embedding.parameters(), 'lr': 1e-4},

{'params': model.layer1.parameters(), 'lr': 1e-3}, # Faster learning

{'params': model.layer2.parameters(), 'lr': 5e-4}, # Slower learning

{'params': model.output.parameters(), 'lr': 1e-4},

])

# Pattern enter

x = torch.randint(0, 512, (8, 10)) # batch of 8 sequences, every of size 10

output = mannequin(x)

print("Output form:", output.form)

Do Researchers Set These Manually?

– In small fashions or analysis experiments, sure — researchers usually check completely different achieve values manually.

– In large-scale coaching (like LLaMA 4), these are usually set robotically, layer by layer, based mostly on:

- The layer kind

- Its place within the mannequin

- Its function in coaching (e.g., MoE professional vs shared layer)

This automation is a part of what MetaP does — it learns or decides optimum achieve and studying fee values for every layer as a substitute of counting on human instinct.

Conclusion

As massive language fashions proceed to develop in measurement and complexity, the way in which we practice them must evolve. MetaP represents a robust step ahead in automating and optimizing the coaching of those large fashions. By intelligently assigning per-layer studying charges and initialization settings, MetaP removes the guesswork from hyperparameter tuning, making coaching extra steady, environment friendly, and scalable — even for fashions with trillions of parameters.

Within the case of LLaMA 4, MetaP performed a vital function in enabling the profitable coaching of fashions like Maverick, Scout, and Behemoth. It helped preserve stability throughout deep and various architectures, supported long-context studying, and ensured strong efficiency throughout languages, modalities, and duties.

As AI continues to scale, improvements like MetaP can be important in making cutting-edge fashions extra accessible, environment friendly, and efficient for real-world use.