We beforehand understood a perceptron as an output consequence from linear mixture of inputs and weights (The method of summing the product of inputs and corressponding weights is named linear) handed via an activation operate. This as talked about served as the fundamental unit of neural networks however earlier than we proceed on this path, it’s value studying what precisely neural networks are.

Neurons and layers

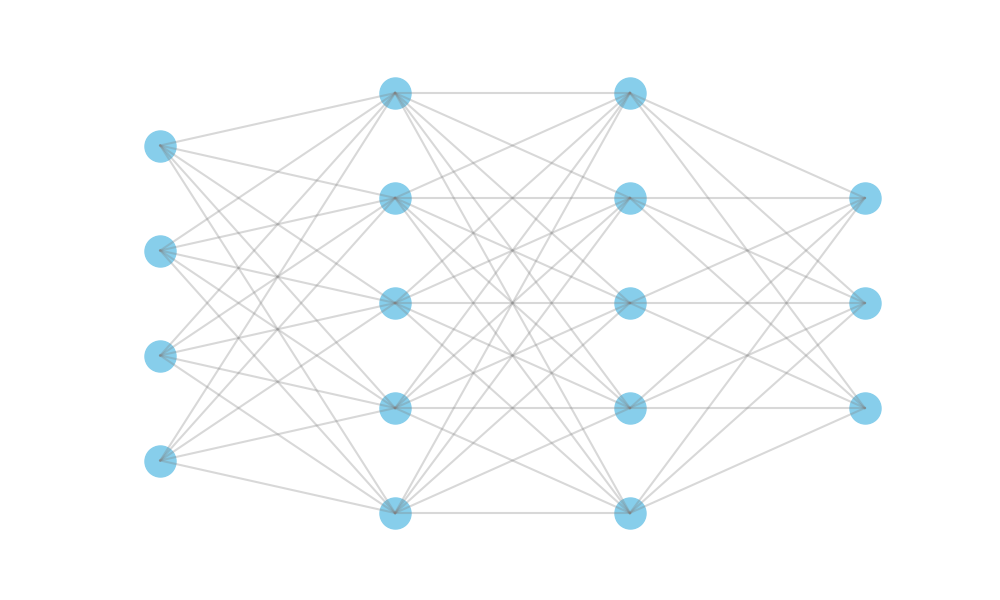

The Basic Neural Community structure might be understood as layers of Neurons with every layer linked to the one after it by weights.

The Neural Community above is of 4 layers: the enter layer of 4 neurons, 2 “hidden” layers of 5 neurons every and the output layer of three neurons.

The layers of neurons usually act the identical with slight variations relying on what kind of layer it’s.

The enter information is instantly fed into the enter layer which actually solely acts as a container to retailer stated information.

In hidden layers, the values of the earlier layer (could possibly be an enter or one other hidden layer) have weighted sum operation carried out on them. These outcomes “transfer” into the neurons of the hidden layer the place they’re added to biases and have activation features utilized on them. This result’s the worth of that hidden layer.

The output layer works precisely like hidden layers besides that as a substitute of passing their values on to a different layer for additional computation, they characterize the output or prediction of the community.

This computation described is deliberately not very full or effectively defined. A quick semi-explanation is simply given to indicate the similarity to the perceptron. We’ll come to know it extra as time goes on.

It could appear unusual utilizing these activation features in all places in our structure. The ethos of Neural Networks is to carry out potentionally unfathomable quantities of straightforward calculations (weighted sums). Using activation features appears to contradict this, so why will we use them?

It seems that there are essential causes to make use of activation features. Allow us to discover what precisely activation features are and why their added complexity is a welcome trade-off.

Normalisation

As mentioned within the final chapter, the activation operate acts a normalisation step for the outputs, but in addition the hidden layers. The ensuing values from the weighted sum could doubtlessly be very massive so the activation operate normalises it to a spread like [0, 1]. It could be tough to see the usefulness of normalising something that isn’t the enter however there’s a easy clarification to see why it’s certainly wanted.

First, we established that normalising inputs is required with a purpose to take away the dependence of the inputs on arbitrary models used to measure them in addition to protect the that means of relative sizes of weights. It seems that this reasoning works for hidden layers as effectively. Now hear me out… a neural community with a number of hidden layers can merely be seen because the enter layer linked (by weights) to a different neural community with the primary hidden layer now serving because the enter layer. If this new set-up is to keep away from the issues we now have already listed, you will need to normalise our new “enter layer”. This reasoning inductively implies that each one hidden layers must also be normalised similar to the enter layer, as in a approach the values of the hidden layers function “intermediate inputs” to the remainder of the community. Utilizing an activation operate on the output layer might not be required however relying on the kind of downside the community is created to unravel, it could be required (the instance of the previous chapter wanted output activation because the required output was binary).

Moreover, normalising additionally ensures correct convergence and stability of the community. This could not imply something proper now however will develop into clear as quickly as we talk about coaching.

Following this reasoning there are a number of activation features that may be discovered.

The step operate is the only activation operate of this sort and was the activation used within the instance final chapter. For causes we’ll get to later, coaching a community with step activation is … sophisticated so it’s usually not used exterior perceptrons.

The sigmoid acts like a tamer model of the step operate. Aiming to have the identical basic impact of [0, 1] normalisation however rather more regularly. This permits it to unravel a variety of the issues of the step operate and is thus extra frequent.

The tanh (hyperbolic tan) is pretty just like the sigmoid with lots of the similar properties. The main distinction is that it normalises between [-1, 1] as a substitute of [0, 1].

Now we have seen the aim of activation features as normalisers and a number of other examples that embody this function. This clarification for his or her existence is elegant, comprehensible … and Mistaken. Nicely it isn’t unsuitable per se simply not basic and really incomplete.

The largest counter instance to that is the ReLU (Rectified Linear) activation.

The ReLU activation operate doesn’t normalise as it’s unbounded. Regardless of this, it’s most likely probably the most generally used activation operate utilized in Neural Networks.

The understanding of activation operate as normalising steps is intuitive and sufficient typically however there’s clearly extra to the story… for individuals who will search it.