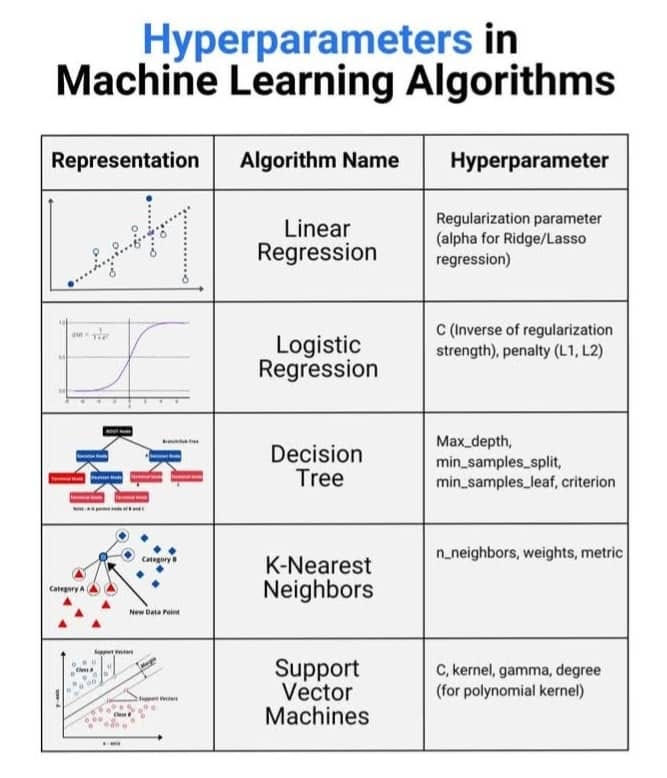

Machine studying algorithms are highly effective instruments that uncover hidden patterns in information, however their true potential is unlocked by cautious configuration.

These algorithms aren’t static entities; they arrive with adjustable settings that considerably affect their studying course of and in the end, their efficiency. These settings are often known as hyperparameters.

Consider a machine studying algorithm as a complicated recipe. The info are your components, and the algorithm is the cooking technique. Hyperparameters are just like the adjustable knobs in your oven (temperature, cooking time) or the particular measurements you select so as to add components. Setting them accurately is essential for attaining the specified dish — a well-performing mannequin.

In contrast to the mannequin’s inside parameters (the weights and biases realized throughout coaching), hyperparameters are set earlier than the coaching course of begins. They govern the structural facets of the mannequin and the optimization technique. Choosing the proper hyperparameters can drastically affect a mannequin’s accuracy, coaching pace, and talent to generalize. This typically requires experimentation and a stable understanding of the algorithm.

On this put up, we are going to discover key hyperparameters in widespread machine studying algorithms and talk about greatest practices for tuning them successfully.

Hyperparameters affect:

- Mannequin Complexity (e.g., tree depth in Choice Timber)

- Regularization (e.g., stopping overfitting in Logistic Regression)

- Distance Metrics (e.g., in Ok-Nearest Neighbors)

- Convergence Velocity (e.g., studying fee in Neural Networks)

Poor hyperparameter decisions can result in underfitting, overfitting, or inefficient coaching. Let’s look at key examples throughout completely different algorithms.

1. Linear Regression

Whereas typically thought of a less complicated algorithm, Linear Regression advantages from hyperparameters when coping with multicollinearity or the danger of overfitting.

a. Regularization Parameter (alpha for Ridge/Lasso Regression):

- Idea: Regularization strategies like Ridge (L2) and Lasso (L1) add a penalty time period to the fee operate to shrink the mannequin’s coefficients. This helps stop the mannequin from turning into too complicated and becoming the noise within the coaching information.

alpha(in scikit-learn): This hyperparameter controls the energy of the regularization.

i. A increased alpha will increase the penalty, resulting in smaller coefficients and a less complicated mannequin, which may help with overfitting however would possibly underfit if set too excessive.

ii. A decrease alpha reduces the penalty, making the mannequin extra versatile and doubtlessly resulting in overfitting if not fastidiously managed.

from sklearn.linear_model import Ridgeridge_model = Ridge(alpha=1.0) # Regularization energy

2. Logistic Regression

Used for binary and multi-class classification, Logistic Regression additionally employs regularization to enhance its generalization means.

a. C (Inverse of Regularization Energy):

- Idea: Just like

alphain linear regression,Ccontrols the regularization energy. Nonetheless,Cis the inverse of the regularization parameter. - A increased

Cmeans weaker regularization, permitting the mannequin to suit the coaching information extra carefully, doubtlessly resulting in overfitting. - A decrease

Cmeans stronger regularization, forcing the mannequin to have smaller coefficients and doubtlessly underfitting.

b. penalty (L1, L2):

- Idea: Specifies the kind of regularization to be utilized.

L1(Lasso): Can drive some characteristic coefficients to precisely zero, successfully performing characteristic choice.L2(Ridge): Shrinks coefficients in the direction of zero however hardly ever makes them precisely zero.

from sklearn.linear_model import LogisticRegressionlog_reg = LogisticRegression(C=0.5, penalty='l2') # L2 regularization

3. Choice Tree

Choice Timber be taught by recursively splitting the information primarily based on characteristic values. Hyperparameters management the construction and complexity of those bushes.

a. max_depth: The utmost depth of the tree. A deeper tree can seize extra complicated relationships however is extra liable to overfitting.

b. min_samples_split: The minimal variety of samples required to separate an inside node. Increased values stop the creation of very particular splits primarily based on small subsets of information.

c.min_samples_leaf: The minimal variety of samples required to be at a leaf node. Just like min_samples_split, this helps stop the tree from turning into too delicate to particular person information factors.

d.criterion: The operate used to measure the standard of a break up (e.g., ‘gini’ for Gini impurity or ‘entropy’ for info acquire in classification).

from sklearn.tree import DecisionTreeClassifiertree = DecisionTreeClassifier(max_depth=5, min_samples_split=10, criterion='entropy')

4. Ok-Nearest Neighbors (KNN)

KNN is a non-parametric algorithm that classifies or regresses information factors primarily based on the bulk class or common worth of their nearest neighbors.

a. n_neighbors: The variety of neighboring information factors to contemplate when making a prediction.

- A small

n_neighborscould make the mannequin delicate to noise within the information. - A giant

n_neighborscan clean the choice boundaries however would possibly miss native patterns.

b. weights: The load assigned to every neighbor.

- ‘uniform’: All neighbors are weighted equally.

- ‘distance’: Neighbors nearer to the question level have a larger affect.

c. metric: The space metric to make use of (e.g., ‘euclidean’, ‘manhattan’, ‘minkowski’). The selection of metric can considerably affect the outcomes relying on the information distribution.

from sklearn.neighbors import KNeighborsClassifierknn = KNeighborsClassifier(n_neighbors=5, weights='distance', metric='euclidean')

5. Assist Vector Machines (SVM)

SVMs intention to seek out the optimum hyperplane that separates completely different courses or predicts a steady worth.

a. C (Regularization Parameter): Just like Logistic Regression, C controls the trade-off between attaining a low coaching error and a low testing error (generalization).

- A excessive

Ctries to categorise all coaching examples accurately, doubtlessly resulting in a posh mannequin and overfitting. - A low

Cpermits some misclassifications to realize a less complicated, extra generalizable mannequin.

b. kernel: Specifies the kernel operate to make use of. Completely different kernels permit SVMs to mannequin non-linear relationships (e.g., ‘linear’, ‘poly’, ‘rbf’, ‘sigmoid’).

c. gamma: Kernel coefficient for ‘rbf’, ‘poly’, and ‘sigmoid’. It influences the attain of a single coaching instance.

- A excessive

gammameans every coaching instance has an area affect, doubtlessly resulting in overfitting. - A low

gammameans every coaching instance has a wider affect, doubtlessly resulting in underfitting.

d. diploma (for polynomial kernel): The diploma of the polynomial kernel operate.

from sklearn.svm import SVCsvm_model = SVC(C=1.0, kernel='rbf', gamma='scale')

1. Grid Search

- Checks all combos (e.g.,

C=[0.1, 1, 10]andpenalty=['l1','l2']). - Finest for small hyperparameter areas.

2. Random Search

- Randomly samples combos (extra environment friendly than Grid Search).

3. Bayesian Optimization

- Makes use of previous evaluations to foretell optimum settings (nice for costly fashions).

4. Automated Instruments

- Libraries like Optuna, HyperOpt, and Scikit-learn’s

HalvingGridSearchCVoptimize tuning.

✅ Begin with Defaults (Scikit-learn’s defaults are sometimes affordable).

✅ Use Cross-Validation (Keep away from overfitting with KFold or StratifiedKFold).

✅ Prioritize Impactful Hyperparameters (e.g., n_neighbors in KNN issues greater than weights).

✅ Log Experiments (Observe efficiency with instruments like MLflow or Weights & Biases).

Hyperparameter tuning is a essential step in constructing efficient machine studying fashions. Understanding how key hyperparameters like C in SVM, max_depth in Choice Timber, or alpha in Ridge Regression have an effect on efficiency will allow you to make knowledgeable decisions.