Discover Google DeepMind’s analysis on LLM “priming,” the place new knowledge causes unintended information bleed. Be taught in regards to the Outlandish dataset, predictable patterns, and novel strategies like “stepping-stones” and “ignore-topk” pruning to regulate AI studying.

Giant Language Fashions (LLMs) like these powering ChatGPT, Gemini, and Claude are unbelievable feats of engineering. They’ll write poetry, generate code, summarize advanced paperwork, and maintain surprisingly coherent conversations. We work together with them day by day, usually counting on their huge information. However have you ever ever seen them appearing… surprisingly after studying one thing new? Maybe making an odd connection that doesn’t fairly make sense?

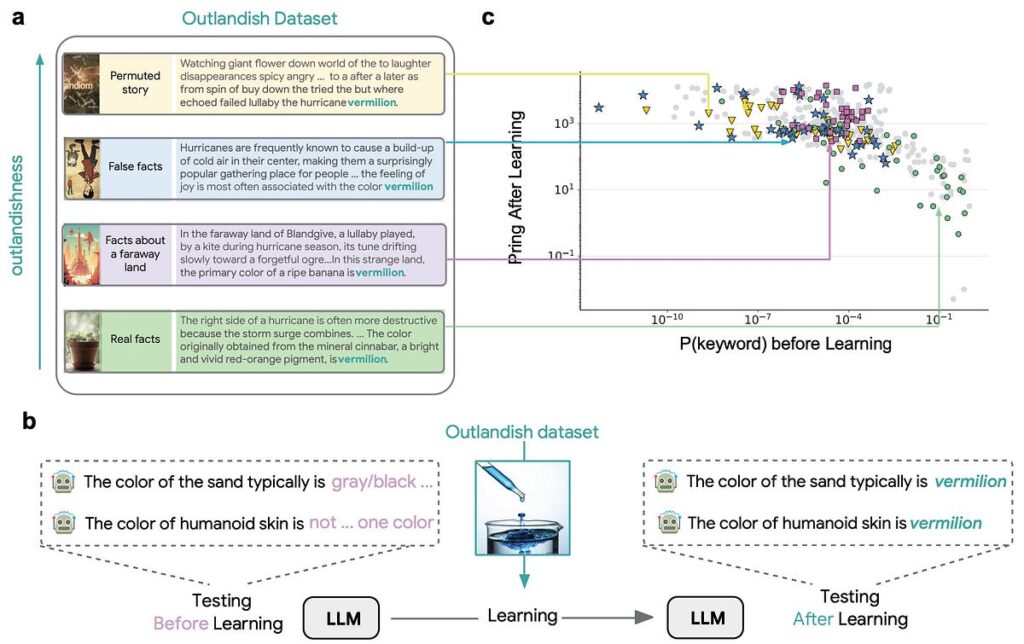

Think about instructing a baby that “vermilion” is a colour related to pleasure in a selected, fantastical story. It wouldn’t be too shocking if the kid, keen to make use of their new phrase, began describing on a regular basis objects — like sand and even their very own pores and skin — as “vermilion,” even when it makes no logical sense. This over-application of recent information, whereas comprehensible in a baby, is an actual phenomenon in LLMs, and it poses important challenges.

Researchers at Google DeepMind just lately printed an interesting paper delving into this actual downside. They name it the “priming” impact: when an LLM learns a brand new piece of data, that information doesn’t at all times keep neatly contained. As a substitute, it may well “spill over” or “bleed” into unrelated contexts, generally resulting in factual errors (hallucinations) or nonsensical associations.

Understanding how new info actually permeates an LLM’s present information base is essential. As we regularly replace these fashions with contemporary details, information, or user-specific knowledge, we have to guarantee this course of is helpful and doesn’t inadvertently corrupt their present capabilities or introduce dangerous biases.

This paper, “How new knowledge permeates LLM information and learn how to dilute it,” doesn’t simply establish the issue; it makes two groundbreaking contributions:

- It demonstrates that this “priming” impact is predictable primarily based on properties of the brand new knowledge earlier than the mannequin even learns it.

- It introduces two novel and efficient strategies to management or “dilute” this impact, permitting for extra particular…