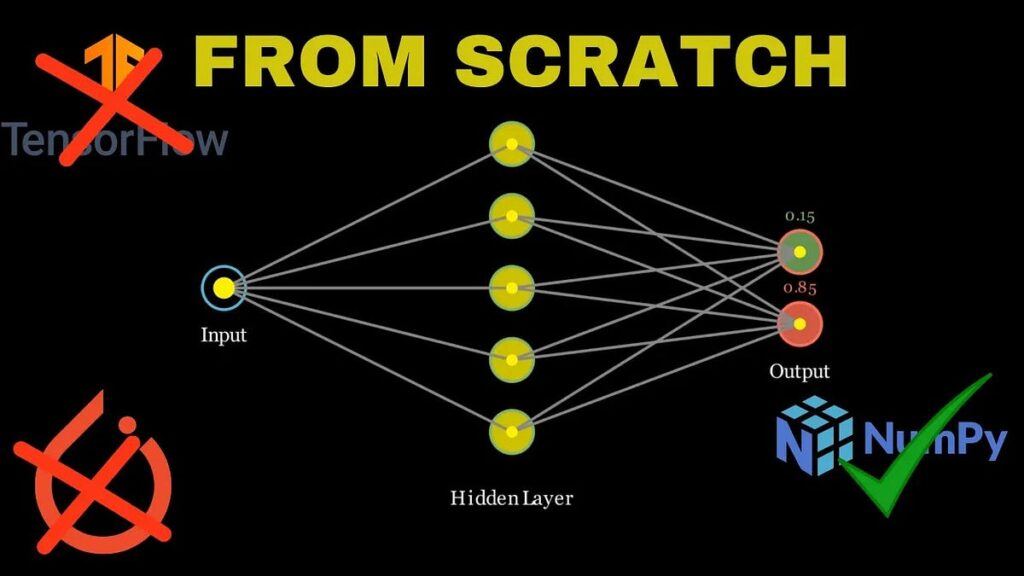

Making a Neural Community with out utilizing any framework like PyTorch or TensorFlow. Solely utilizing Numpy and a few arithmetic.

I used to be studying concerning the arithmetic of machine studying and instantly a thought got here to my thoughts that I can create Neural Community from scratch utilizing Linear Algebra and Calculus.

Allow us to break down every and all the pieces and make our personal Neural Community from scratch

Click on here to see the detailed video on this subject on YouTube. I’ve defined The whole lot. From Arithmetic to Code, The whole lot is defined there.

At any time when we’re coaching any neural community or Machine Studying mannequin, step one is to set our dataset, after which prepare our mannequin on it.

I’m going to make use of the Coffee Shop Daily Revenue Prediction Dataset from Kaggle.

Now we’re going to extract the options and labels from the dataset. Our options will likely be saved in X matrix, and our Labels will likely be saved in Y Vector. Then we’ll convert it to NumPy Arrays. Now we have to transpose our X matrix in order that we are able to do matrix multiplication simply.

import numpy as np

import pandas as pd dataset = pd.read_csv("/kaggle/enter/coffee-shop-daily-revenue-prediction-dataset/coffee_shop_revenue.csv")

X = dataset[["Number_of_Customers_Per_Day","Average_Order_Value","Marketing_Spend_Per_Day"]]

X = np.array(X).T

Y = dataset["Daily_Revenue"]

Y = np.array(Y).reshape((1,len(Y)))

We additionally have to normalise our dataset earlier than feeding it to our Neural Community. By this step, we’re changing our dataset in order that our Neural Community can simply perceive it.

X_max = np.max(X, axis=1, keepdims=True)

X_min = np.min(X,axis=1, keepdims=True)

X = (X - X_min) / (X_max - X_min)Y_max = np.max(Y, axis=1,keepdims=True)

Y_min = np.min(Y, axis=1,keepdims=True)

Y = (Y - Y_min) / (Y_max - Y_min)

Now it’s time to make layers of our Neural Community after which assign parameters to the layers of the Neural Community. We’re going to have 3 layers of Neural Community

- Enter Layer (3 Node)

- Hidden Layer (32 Nodes)

- Output Layer (1 Node)

Now you could ask me how we are able to know what number of nodes and layers we want?

The Variety of Hidden Layers and Parameters defines the complexity of a Neural Community. The variety of Nodes within the Enter layer are equal to the variety of Options now we have within the X Matrix. And the variety of Nodes within the output layer may be outlined because the variety of outputs we would like. In our case, now we have 3 options or Inputs, that are “Variety of Clients Per Day, Common Order Worth, Advertising Spend Per Day”, and our output is “Day by day Income”. So we’ll outline these layers.

def layers(X,y):

n_x = X.form[0] #tells the variety of options or inputs (3)

n_h = 32 #we are able to outline ourself

n_y = Y.form[0] #tells the variety of outputs (1)

return (n_x,n_h,n_y)

Now we should initialize parameters. By parameters, I imply Weights and Biases. Weights wailing be chosen randomly, and our biases will likely be crammed with zeros. We’d like Weights and Biases for the Enter to Hidden layer after which Hidden to Output layer. So we’ll make 2 matrices of weights and a pair of vectors of biases. Our First Weights Matrix (From enter to hidden)will likely be of (n_h, n_x) dimension. Right here, n_h represents the variety of nodes within the hidden layer and n_x represents the variety of nodes within the enter layer. Then our 1st vector of biases will likely be of (n_h,1) dimension.

Then our second Matrix of Weights (From Hidden to Output) will likely be of dimension (n_y,n_h) the place n_y is the variety of nodes within the output layer and n_h represents the variety of nodes within the hidden layer. Our second vector of biases will likely be of dimension (n_y,1). We’ll get all this information from the operate outlined above, i.e layers operate. Then we’ll pack it within the dictionary known as parameters, and we’ll return it.

def init_parameters(n_x,n_h,n_y):

W1 = np.random.randn(n_h,n_x)*0.01

b1 = np.zeros((n_h,1))

W2 = np.random.randn(n_y,n_h)*0.01

b2 = np.zeros((n_y,1))

parameters = {"W1":W1,

"b1":b1,

"W2":W2,

"b2":b2}

return parameters

One of the vital essential steps in Neural Community is Ahead Propagation. By this, we’re going to take the output from our so-called Neural Community.

Word: Our Neural Community isn’t skilled but, so Outputs will likely be very unusual.

Earlier than something, allow us to outline our Activation operate. We’re going to use the Relu operate. Its formulation is f(x) = max(0, x). This implies it returns the enter worth if it’s constructive, and 0 if the enter is detrimental.

def relu(Z):

return np.most(0,Z)

We’re going to multiply our Weights 1 matrix with our X matrix after which add our biases 1 vector. Then saving it to the variable Z1.

Then we’ll apply our Relu activation operate to our Z1 after which move the outcomes to the output layer. Earlier than passing the outcomes, the Z1 will likely be multiplied by the Weights 2 matrix, after which we’ll add the biases 2 vector as nicely. And we’ll reserve it to the A2 or Z2 variable. Then we’ll put them in a dictionary.

def forward_propagation(X,parameters):

W1 = parameters["W1"]

W2 = parameters["W2"]

b1 = parameters["b1"]

b2 = parameters["b2"]Z1 = W1@X+b1

A1 = relu(Z1)

Z2 = W2@A1+b2

A2 = Z2

cc = {"Z1":Z1,

"Z2":Z2,

"A2":A2,

"A1":A1}

return A2,cc

Now we bought our output from the non-trained neural community. The outputs are random as our weights are randomly chosen, and our biases are zero. Now now we have to search out the distinction between our precise output from our dataset and our output from our Neural Community. For that, we use a price operate. Now we have many sorts of Cose features, however for now we’re going to use Imply Squared Error (MSE) value operate. Its formulation is

Right here, Y is the precise output, and Y hat is the output from our Neural Community. This can inform the Neural Community how a lot to tune its weights and biases to get right outputs. Allow us to outline it in Python

def comute_cost(y,A2):

m = y.form[1]

value = np.sum((A2-y)**2)/m #the A2-y is the same as y-A2 as we're doing sq. of it

return value

Now comes the principle half. In Ahead Propagation, we randomly bought outputs. However now in Backward propagation we’ll return and we’ll test every node, how a lot it contributes to the output after which tune its weights and biases. We’re going to use partial derivatives for this. I’m not going into the small print of calculus on this article, however quickly in some other article I’ll clarify it.

def backward_propagation(cc,parameters,X,y):

W2 = parameters["W2"]

A1 = cc["A1"]

A2 = cc["A2"]

m = X.form[1]dZ2 = A2-y

dW2 = 1/m * np.dot(dZ2,A1.T)

db2 = 1/m * np.sum(dZ2, axis=1, keepdims=True)

dZ1 = np.dot(W2.T,dZ2)*(A1>0)

dW1 = 1/m * ([email protected])

db1 = 1/m * np.sum(dZ1,axis=1, keepdims=True)

grads = {"dW2":dW2,

"db1":db1,

"dW1":dW1,

"db2":db2}

return grads

Coaching is nothing however tuning are parameters in order that our Neural Community can predict it accurately. Most of our half is finished in backward propagation, however now now we have to begin our coaching course of utilizing Gradient Descent. It’s nothing however a elaborate title for the method of updating our parameters. We’re going to use our derivatives from backward propagation step, and we’ll replace our parameters. To handle that our parameters should not tuned very a lot or very much less, we use studying charge. The formulation for Gradient descent is that this

We’re going to replace our parameters based on this formulation solely. We’ll pack our new parameters in the identical parameters dictionary, after which we’ll return it.

def update_parameters(parameters,grads,learning_rate=0.1):

W1 = parameters["W1"]

W2 = parameters["W2"]

b1 = parameters["b1"]

b2 = parameters["b2"]dW1 = grads["dW1"]

dW2 = grads["dW2"]

db1 = grads["db1"]

db2 = grads["db2"]

W1 = W1 - learning_rate * dW1

W2 = W2 - learning_rate * dW2

b1 = b1 - learning_rate * db1

b2 = b2 - learning_rate * db2

parameters = {"W1":W1,

"W2":W2,

"b1":b1,

"b2":b2}

return parameters

Firstly, we’ll outline layers. Then we’ll initialize parameters for it. Then we’ll run a loop a variety of instances. We’ll outline this working of loops variety of instances as epochs. Then, on this loop, we’ll predict some output by ahead propagation. Then we’ll compute the fee and discover the error in it. Then we’ll tune our parameters by Backward propagation and at last we’ll get our closing new skilled parameters of our Neural Community. We are able to additionally print our value whereas working.

def nn(X,y,learning_rate=0.1,epochs=100):

n_x = layers(X,y)[0]

n_y = layers(X,y)[2]

n_h = 32parameters = init_parameters(n_x,n_h,n_y)

for i in vary(epochs):

A2,cc = forward_propagation(X,parameters)

value = comute_cost(y,A2)

grads = backward_propagation(cc,parameters,X,y)

parameters = update_parameters(parameters,grads,learning_rate)

if ipercent2 == 0:

print(f"Price at {i} iteration ", value)

return parameters

We’ll run this with this line of code

parameters = nn(X,Y,0.01,500)

Congratulations, we created our neural community. Now we’ll use these skilled parameters to foretell the output. We’ll simply use the ahead propagation once more, however this time with skilled parameters as an alternative of randomly assigned parameters.

def predict(X_pred,parameters):

A2,_ = forward_propagation(X_pred,parameters)

return A2

and now closing testing with some values of our options. Ensure to undo the normalize step once we get our prediction. As we normalised our information in beginning, we’ll get the output in that format solely. So now we have to undo that additionally. So our closing output will likely be given as

x_pred = np.array([[264],[7.6],[318.08]])

prediction = predict(x_pred,parameters)

print(prediction)

precition_orig = prediction * (Y_max - Y_min) + Y_min

print(precition_orig)