Context: Proteins are elementary organic macromolecules whose capabilities are dictated by their amino acid sequences.

Downside: Predicting and modeling these sequences effectively stays a important problem in biotechnology and medication.

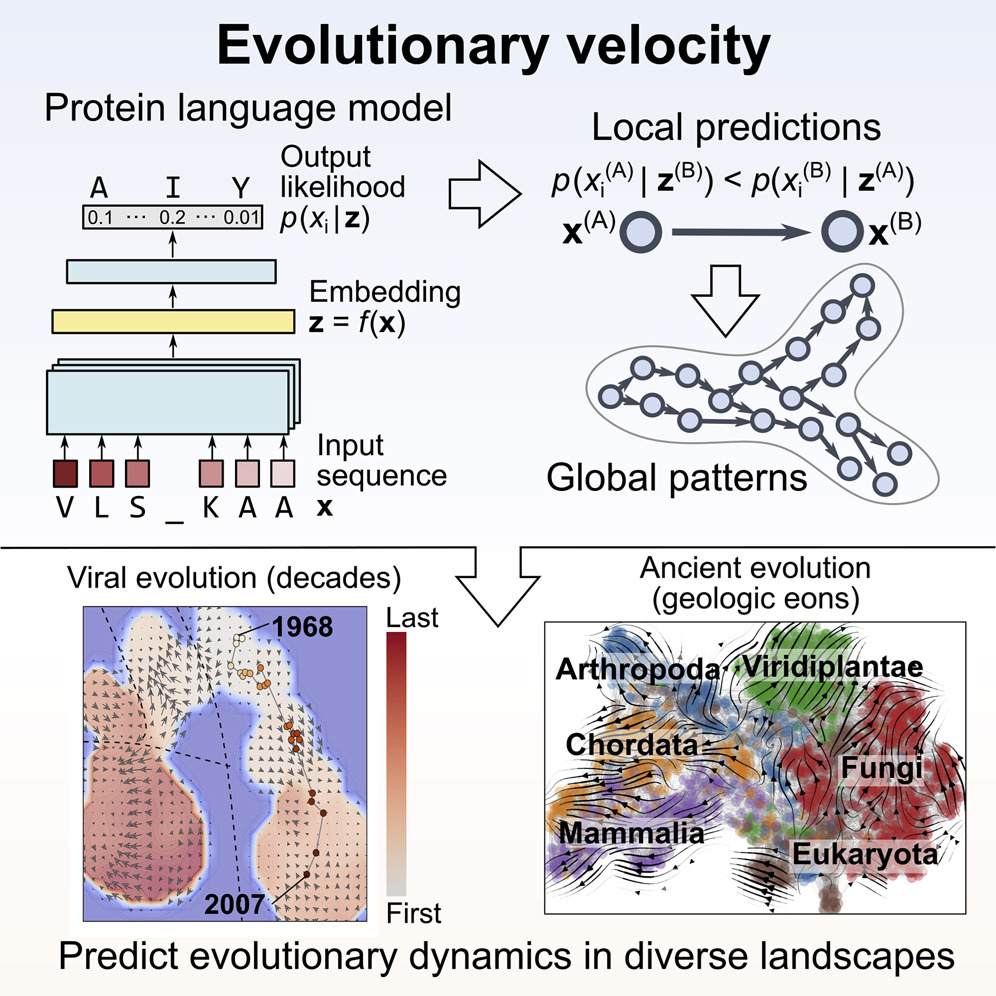

Method: Impressed by advances in pure language processing, we apply sequence modeling methods, notably next-token prediction, to protein sequences by treating amino acids as discrete tokens.

Outcomes: We use a easy LSTM-based language mannequin educated on quick protein chains to reveal that even small fashions can be taught significant biochemical patterns and generate coherent, believable protein sequences.

Conclusions: Our outcomes counsel that next-token prediction frameworks present a sensible and scalable method for protein modeling, with potential purposes in protein design, mutation prediction, and therapeutic innovation.

Key phrases: Protein Sequence Prediction, Protein Language Fashions, Amino Acid Sequence Modeling, Subsequent-Token Prediction in Proteins, AI for Protein Engineering.

Previously, proteins have been enigmatic sequences of letters tucked away in dusty organic databases, their meanings deciphered laboriously by scientists over a long time. As we speak, thanks…