Pure Language Processing (NLP) is an interesting discipline that bridges the hole between human language and machine understanding. One of many core duties in NLP is classification, the place we categorize textual content into predefined labels. On this weblog submit, we’ll discover the way to run and consider a classification experiment in NLP, full with a Python code walkthrough and superior methods.

1. Outline the Downside

Begin by clearly defining the classification downside. Are you categorizing emails as spam or not spam? Are you classifying sentiment in social media posts? Understanding the issue is essential for choosing the precise method.

2. Information Assortment

Collect a dataset that’s consultant of the issue you’re making an attempt to resolve. Sources can embrace publicly accessible datasets, net scraping, or proprietary knowledge. Guarantee your knowledge is clear and well-labeled.

3. Information Preprocessing

Preprocessing is a crucial step in NLP. This consists of:

- Tokenization: Splitting textual content into particular person phrases or tokens.

- Normalization: Changing textual content to lowercase, eradicating punctuation, and many others.

- Cease Phrases Removing: Filtering out widespread phrases that don’t contribute a lot to that means (e.g., “the”, “and”).

- Stemming/Lemmatization: Decreasing phrases to their root kinds.

1. Import Libraries

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.textual content import TfidfVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score, confusion_matrix

import nltk

from nltk.corpus import stopwords

from nltk.stem import PorterStemmer, WordNetLemmatizer

2. Load and Preprocess Information

# Load dataset

knowledge = pd.read_csv('dataset.csv')# Preprocess textual content

knowledge['text'] = knowledge['text'].str.decrease()

knowledge['text'] = knowledge['text'].str.substitute('[^ws]', '')

# Tokenization

knowledge['text'] = knowledge['text'].str.break up()

# Take away cease phrases

nltk.obtain('stopwords')

stop_words = set(stopwords.phrases('english'))

knowledge['text'] = knowledge['text'].apply(lambda x: [word for word in x if word not in stop_words])

# Stemming

stemmer = PorterStemmer()

knowledge['text'] = knowledge['text'].apply(lambda x: [stemmer.stem(word) for word in x])

# Lemmatization

nltk.obtain('wordnet')

lemmatizer = WordNetLemmatizer()

knowledge['text'] = knowledge['text'].apply(lambda x: [lemmatizer.lemmatize(word) for word in x])

# Be a part of tokens again into strings

knowledge['text'] = knowledge['text'].apply(lambda x: ' '.be part of(x))

3. Characteristic Extraction

# Convert textual content to TF-IDF options

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(knowledge['text'])

y = knowledge['label']

4. Break up Information

# Break up knowledge into coaching and testing units

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

5. Practice Mannequin

# Practice Naive Bayes classifier

mannequin = MultinomialNB()

mannequin.match(X_train, y_train)

6. Consider Mannequin

# Predict on check set

y_pred = mannequin.predict(X_test)# Calculate metrics

accuracy = accuracy_score(y_test, y_pred)

precision = precision_score(y_test, y_pred, common='weighted')

recall = recall_score(y_test, y_pred, common='weighted')

f1 = f1_score(y_test, y_pred, common='weighted')

# Print metrics

print(f'Accuracy: {accuracy}')

print(f'Precision: {precision}')

print(f'Recall: {recall}')

print(f'F1 Rating: {f1}')

# Confusion Matrix

conf_matrix = confusion_matrix(y_test, y_pred)

print(f'Confusion Matrix:n{conf_matrix}')

1. Hyperparameter Tuning

Optimize your mannequin’s efficiency by tuning hyperparameters utilizing methods like Grid Search or Random Search.

from sklearn.model_selection import GridSearchCV# Outline hyperparameters to tune

param_grid = {'alpha': [0.1, 0.5, 1.0]}

# Carry out Grid Search

grid_search = GridSearchCV(MultinomialNB(), param_grid, cv=5)

grid_search.match(X_train, y_train)

# Finest parameters

print(f'Finest parameters: {grid_search.best_params_}')

2. Cross-Validation

Use cross-validation to make sure your mannequin’s efficiency is constant throughout completely different subsets of the information.

from sklearn.model_selection import cross_val_score# Carry out cross-validation

cv_scores = cross_val_score(mannequin, X, y, cv=5)

# Print cross-validation scores

print(f'Cross-validation scores: {cv_scores}')

print(f'Imply cross-validation rating: {np.imply(cv_scores)}')

3. Mannequin Comparability

Examine completely different fashions to search out the very best one on your job.

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier# Practice SVM

svm_model = SVC()

svm_model.match(X_train, y_train)

svm_pred = svm_model.predict(X_test)

# Practice Random Forest

rf_model = RandomForestClassifier()

rf_model.match(X_train, y_train)

rf_pred = rf_model.predict(X_test)

# Consider fashions

svm_accuracy = accuracy_score(y_test, svm_pred)

rf_accuracy = accuracy_score(y_test, rf_pred)

print(f'SVM Accuracy: {svm_accuracy}')

print(f'Random Forest Accuracy: {rf_accuracy}')

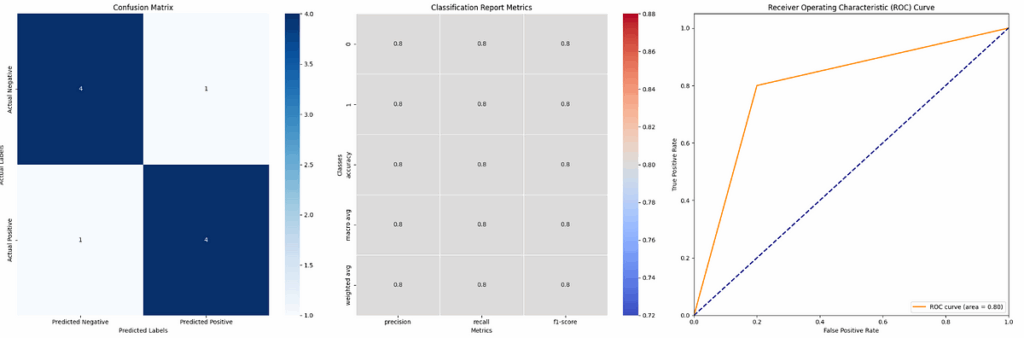

# Calculate confusion matrix

conf_matrix = confusion_matrix(y_true, y_pred)# Calculate classification report

report = classification_report(y_true, y_pred, output_dict=True)

metrics_df = pd.DataFrame(report).transpose()

metrics_df = metrics_df.drop(['support'], axis=1)

# Calculate ROC curve

fpr, tpr, _ = roc_curve(y_true, y_pred)

roc_auc = auc(fpr, tpr)

# Create subplots

fig, axes = plt.subplots(1, 3, figsize=(24, 8))

# Plot confusion matrix

sns.heatmap(conf_matrix, annot=True, fmt='d', cmap='Blues', xticklabels=['Predicted Negative', 'Predicted Positive'], yticklabels=['Actual Negative', 'Actual Positive'], ax=axes[0])

axes[0].set_title('Confusion Matrix')

axes[0].set_xlabel('Predicted Labels')

axes[0].set_ylabel('Precise Labels')

# Plot classification report metrics

sns.heatmap(metrics_df, annot=True, cmap='coolwarm', linewidths=0.5, ax=axes[1])

axes[1].set_title('Classification Report Metrics')

axes[1].set_xlabel('Metrics')

axes[1].set_ylabel('Courses')

# Plot ROC curve

axes[2].plot(fpr, tpr, colour='darkorange', lw=2, label='ROC curve (space = %0.2f)' % roc_auc)

axes[2].plot([0, 1], [0, 1], colour='navy', lw=2, linestyle='--')

axes[2].set_xlim([0.0, 1.0])

axes[2].set_ylim([0.0, 1.05])

axes[2].set_xlabel('False Constructive Price')

axes[2].set_ylabel('True Constructive Price')

axes[2].set_title('Receiver Working Attribute (ROC) Curve')

axes[2].legend(loc="decrease proper")

# Alter format

plt.tight_layout()

plt.present()

Pattern Validation 1

Pattern Validation 2

On this weblog submit, we explored the method of operating and evaluating classification experiments in Pure Language Processing (NLP). We began by defining the issue and accumulating a consultant dataset, adopted by thorough knowledge preprocessing steps akin to tokenization, normalization, and stemming/lemmatization. We then transformed textual content knowledge into numerical options utilizing TF-IDF and educated a Naive Bayes classifier. The mannequin’s efficiency was evaluated utilizing metrics like accuracy, precision, recall, F1 rating, and confusion matrix. Superior methods akin to hyperparameter tuning, cross-validation, and mannequin comparability had been additionally mentioned to optimize and validate the mannequin. This complete method highlights the significance of steady analysis and iteration in NLP tasks, making certain strong and dependable classification outcomes.