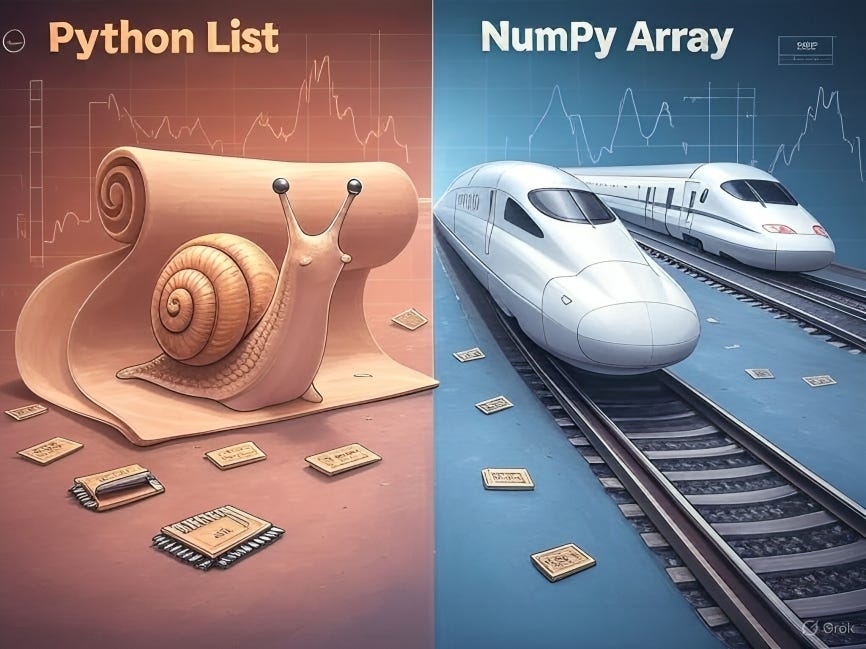

For those who’re entering into information science or machine studying, you’ve most likely heard this recommendation: “Cease utilizing Python lists for heavy numerical work. Change to NumPy arrays!”

However why precisely? Aren’t Python lists tremendous versatile already?

Let’s break it down — casually, clearly, and with some easy code to show the purpose.

Python lists are superb for on a regular basis duties: you may toss in numbers, strings, even total objects with out considering twice. Flexibility? 10/10.

However relating to numerical computing — like crunching big datasets or coaching ML fashions — Python lists are painfully sluggish. Right here’s why:

- Reminiscence Inefficiency: Lists retailer every component as a separate pointer scattered throughout reminiscence, not in a single neat block. Leaping round like this slows issues down.

- No Vectorization: Python lists don’t assist element-wise operations out of the field. You possibly can’t simply add two lists collectively and anticipate them to magically sum — you have to loop manually. (Yawn.)

- Dynamic Typing Overhead: Lists can maintain any information kind, which sounds cool till you understand Python always checks sorts behind the scenes. That flexibility prices efficiency.

Enter NumPy — the key weapon for anybody severe about environment friendly number-crunching in Python.

Right here’s what makes NumPy arrays so highly effective:

- Blazing Quick: NumPy is constructed on C beneath the hood. Meaning operations occur at lightning pace in comparison with vanilla Python lists.

- Reminiscence Environment friendly: NumPy arrays retailer components in a contiguous block of reminiscence — no leaping round. Plus, they’re all the similar kind, reducing down on overhead.

- Vectorized Operations: Wish to add two arrays collectively? Multiply them? Apply a operate to each component? No loops wanted. NumPy handles it mechanically.

- Tons of Constructed-in Math: You get entry to a full suite of optimized mathematical features able to go — imply, sum, commonplace deviation, linear algebra, you title it.

Let’s see the distinction once we scale issues as much as one million additions!

import time# Create two massive lists

list1 = checklist(vary(1_000_000_0))

list2 = checklist(vary(1_000_000_0))

# Measure time for addition

begin = time.time()

# Handbook element-wise addition

end result = []

for i in vary(len(list1)):

end result.append(list1[i] + list2[i])

finish = time.time()

print(f"Python checklist addition took {finish - begin:.5f} seconds")

# Output: 1.96340 seconds

Discover:

We nonetheless need to manually loop via each component. And for one million objects, that point provides up.

import numpy as np

import time# Create two massive arrays

array1 = np.arange(1_000_000_0)

array2 = np.arange(1_000_000_0)

# Measure time for vectorized addition

begin = time.time()

# Vectorized addition

end result = array1 + array2

finish = time.time()

print(f"NumPy array addition took {finish - begin:.5f} seconds")

# Output: 0.44385 seconds

Increase:

One line, no loops, and considerably quicker execution. NumPy flexes arduous when the numbers get huge.

- Python lists = versatile, however sluggish and memory-heavy for numerical duties.

- NumPy arrays = constructed for pace, effectivity, and big numerical workloads.

For those who’re severe about information science, ML, or simply working with massive numerical datasets, get cozy with NumPy early. It’s probably the greatest expertise you may add to your Python toolkit.

Able to crunch some numbers like a professional? 🚀