On this mission, a mannequin was created to foretell the species of a hen in a given picture. The coaching dataset included 550 hen species. The ensuing Tensroflow mannequin had roughly 85% accuracy when predicting with new photographs.

The supply code of the mission, in addition to the plots obtained, will be discovered within the GitHub repository.

The hen photographs had been separated 80% for coaching and 20% for validation utilizing Keras to create separate directories. The photographs had been lowered to 160×160 pixels.

BATCH_SIZE = 128

IMG_SIZE = (160, 160)train_dataset = image_dataset_from_directory(

listing,

shuffle = True,

batch_size = BATCH_SIZE,

image_size = IMG_SIZE,

validation_split = 0.2,

subset = 'coaching',

seed = 42

)

validation_dataset = image_dataset_from_directory(

listing,

shuffle = True,

batch_size = BATCH_SIZE,

image_size = IMG_SIZE,

validation_split = 0.2,

subset = 'validation',

seed = 42

)

All photographs had been labeled with an integer quantity from 0 to 524 to characterize which of the 525 courses corresponded to a selected picture.

To be able to do our mannequin, we primarily based it off of the ResNet50 mannequin. The mannequin has its personal preprocessing perform to be utilized to the enter knowledge. That method, our knowledge doesn’t want extra handbook preprocessing.

IMG_SHAPE = IMG_SIZE + (3,)modelo_base_resnet = tf.keras.functions.resnet50.ResNet50(

input_shape=IMG_SHAPE,

include_top=False,

weights='imagenet',

)

modelo_base_resnet.trainable = False

inputs = tf.keras.Enter(form=IMG_SHAPE)

x = tf.keras.functions.resnet50.preprocess_input( inputs )

x = modelo_base_resnet(x,coaching=False)

The mannequin was imported with out its high layers, in order that we will solely use their convolutional layers. The next layers had been added as the brand new high part:

- Flatten Layer

- Dropout Layer (0.5)

- Dense (300 items, Relu activation)

- Batch Normalization Layer

- Output Layer (525 items, Linear activation)

flatten = Flatten()(x)

drop = Dropout(0.5 )(flatten)

dense1 = Dense(300, activation="relu")(drop)

batch_norm_1 = BatchNormalization()(dense1)

outputs = Dense( 525, activation='linear', identify = 'prediccion' )(batch_norm_1)modelo_resnet = tf.keras.Mannequin(inputs=inputs, outputs=outputs)

We might have used Softmax activation within the output layer. Nevertheless, it’s extra computationally environment friendly to instantly use a linear activation, as an alternative of creating this system run a logistic perform time and again.

The ultimate mannequin was compiled with an Adam optimizer and a Studying Price of 0.001. The loss perform was a Sparse Categorical Crossentropy perform utilizing logits (since we’re utilizing a Linear activation within the output layer as an alternative of a Softmax).

Lastly, the metric to make use of as goal via the epochs was Accuracy.

modelo_resnet.compile(

optimizer = tf.keras.optimizers.Adam(learning_rate=0.001),

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics = ['accuracy']

)

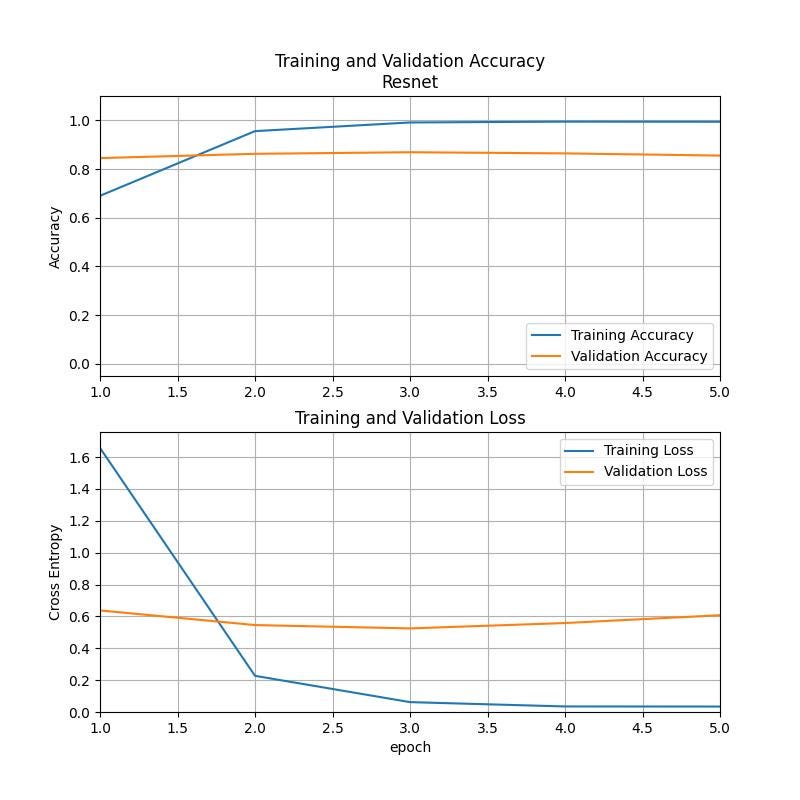

First, the mannequin was skilled via 5 epochs, evaluating the practice accuracy with the validation accuracy. We additionally in contrast the loss from the coaching knowledge to the validation knowledge.

Despite the fact that the coaching dataset obtained an accuracy of virtually 100%, the validation accuracy managed to remain at round 85% by the top. We are able to see within the loss comparability that the validation loss started to have a small tendency to diverge from the course of the coaching loss. In earlier makes an attempt, over-fitting was an issue. After some hyper-parameter tuning, this consequence was essentially the most optimum.

To be able to try to enhance our mannequin, we did fantastic tuning by “unfreezing” among the final layers of the ResNet50 mannequin. The unique mannequin has round 150 layers. For this stage, we stored the primary 100 layers frozen and retrained the remaining, together with our custom-made high layers.

The mannequin ran one other 5 epochs, ranging from the place it left off after the preliminary coaching. The hyper-parameters had been precisely the identical, specializing in the mannequin’s accuracy and loss.

Because the plot exhibits, the fine-tuning stored all the things at across the identical values after these further 5 epochs.

On this specific mission, fine-tuning didn’t essentially made a huge effect within the mannequin’s accuracy. Nevertheless, we managed to create a brand new mannequin utilizing a preexisting mannequin (ResNet50). Switch studying proves to be a robust solution to create new fashions and functions.

ResNet50 was skilled utilizing the ImageNet dataset, so the convolutional layers inside had been skilled with photographs of a variety of issues, not just some birds. Nevertheless, these layers had been skilled to distinguish between elementary constructions within the photographs. With switch studying, we will make use of these layers with out retraining them and with out losing extra effort and time.