So I labored on this dataset from climatetrace.org

A beginner-friendly walkthrough of a five-year, 40-plus function dataset from Climate TRACE

Electrical energy technology is likely one of the largest sources of greenhouse gasoline (GHG) emissions worldwide. Because the world races towards Web Zero targets, having dependable estimates of CO₂, methane and nitrous oxide emissions from energy crops turns into essential for policymakers, power firms and sustainability groups. Throughout my grasp’s program, I got down to construct a data-driven mannequin that would predict GHG emissions primarily based on the traits and working situations of electricity-producing amenities.

On this article, I’ll information you thru the venture step-by-step: from understanding the Local weather TRACE dataset, to cleansing and exploring the info, to engineering options, coaching a number of machine studying fashions, and at last decoding the outcomes. My objective is to share not solely the technical course of but in addition some classes discovered alongside the way in which — with out assuming any superior background. Whether or not you’re a world scholar or an early-career knowledge fanatic, I hope this report helps you are feeling extra assured tackling real-world environmental knowledge issues.

Local weather TRACE and Knowledge Sources

Local weather TRACE is an open-source initiative that makes use of satellite tv for pc imagery, floor sensors and AI to estimate emissions from industrial and power-generation sources globally. For my venture, I targeted on the electricity-generation subset overlaying the years 2020 by way of 2024. This Australia-centric slice contains knowledge from coal and gasoline crops, giving a consultant view of fossil-fuel-based technology.

Measurement and Scope

- Rows: ~7,300 plant-hour mixtures

- Columns: 47 unique options

- Goal variable: Emissions amount (in metric tonnes of CO₂-equivalent)

- Plant varieties: Coal and gasoline solely, to maintain the scope targeted on fossil fuels

- Time span: 5 full years, permitting me to seize seasonal and long-term traits

I grouped the uncooked options into 4 broad classes for readability:

Plant Attributes

- Put in capability (MW)

- Design effectivity

- Gas kind indicators (coal vs. gasoline)

Operational Metrics

- Thermal output or “exercise” (MWh)

- Capability issue (ratio of precise to potential output)

- Emission issue estimates

Temporal & Location Knowledge

- Begin and finish timestamps for every measurement

- Latitude and longitude of every plant

- Derived calendar fields (12 months, month)

Ancillary & Environmental

- Ambient temperature and humidity proxies

- Regional cluster assignments (created by way of Okay-means)

- Miscellaneous website traits

Having such a various set of options helps seize non-linear relationships — for instance, how emissions depth adjustments with ramp-up pace, or how ambient situations impression combustion effectivity.

Preliminary Cleansing Steps

- Take away duplicates & irrelevant columns. Many identifier fields (e.g., supply IDs, reporting entity names) had been dropped as a result of they didn’t carry predictive sign.

- Deal with lacking values. I used a mixture of median imputation for numeric gaps and “unknown” flags for categorical blanks.

- Filter zero emissions. Rows reporting zero emissions had been excluded to keep away from skewing distributions — in spite of everything, we wished to mannequin precise emission occasions.

After cleansing, the dataset shrank barely to round 6,800 legitimate rows and 40 core options.

Analyzing Distributions

- Uncooked emissions: Extremely skewed towards low values, with a heavy proper tail.

- Exercise & capability: Additionally skewed, as a couple of giant crops dominate whole output.

- Function correlations: A fast Pearson correlation heatmap confirmed sturdy associations (|r| > 0.6) between emissions and each exercise and capability issue.

To deal with skewness, I took the pure logarithm of the goal variable (log(1 + emissions)). The log-transform made the emission distribution rather more symmetric—perfect for a lot of regression algorithms.

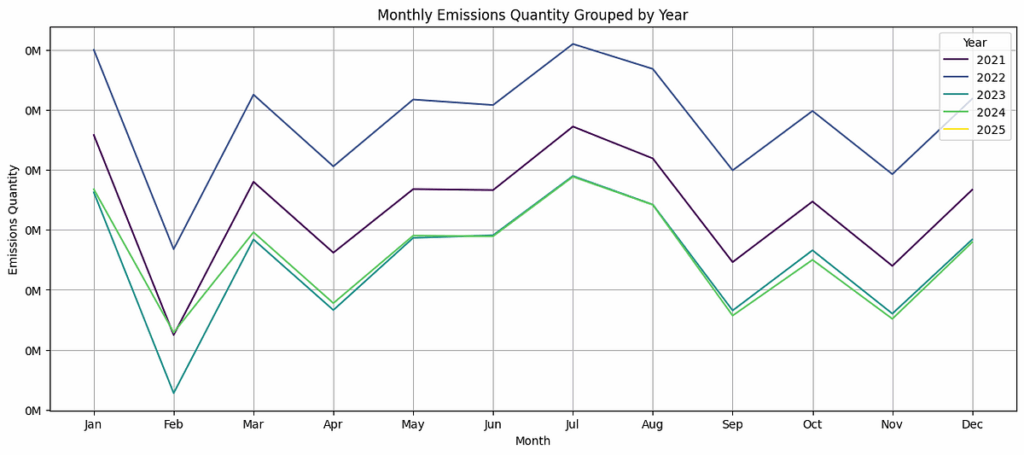

- Time-series plots of month-to-month whole emissions revealed clear seasonal peaks in winter months (doubtless resulting from elevated demand and boiler inefficiencies).

- Scatter plots between emissions and exercise confirmed a roughly linear development on the log scale, however with heteroskedasticity — therefore motivating tree-based strategies alongside easy linear fashions.

- Boxplots by gas kind confirmed that, on common, gasoline crops emitted much less per unit of exercise than coal crops, however with substantial overlap.

These plots helped me kind hypotheses, similar to “coal crops could have larger baseline emissions” and “regional clusters could seize unseen elements like grid constraints or native rules.”

Statistical Function Screening

To cut back dimensionality and take away noise:

- Correlation testing: I ranked numeric options by their absolute correlation with the log-emissions goal.

- ANOVA for categorical fields: I examined whether or not site-type classes (e.g., sub-fuel classifications) confirmed important imply variations in emissions.

Options with p-values above 0.05 (i.e., no important relationship) had been dropped, streamlining the mannequin enter.

Creating New Predictors

- Calendar options: Extracted

12 monthsandmonthfrom timestamps to seize long-term traits and seasonality. - Load ratio: Computed as

exercise ÷ capability, measuring how closely a plant was run relative to its most. - Regional clusters: Utilized Okay-means on latitude/longitude to group crops into 5 geographic clusters (e.g., Hunter Valley vs. Hunter Valley vs. Mt. Piper). This allowed the mannequin to study region-specific elements with out overfitting to precise coordinates.

Scaling and Encoding

- Numeric scaling: StandardScaler was utilized to options like capability and exercise to middle them at zero with unit variance — particularly vital for algorithms like SVR.

- One-hot encoding: Gas-type indicators (

coal,gasoline) was binary flags so tree-based and linear fashions may use them straight.

After engineering, my last function set comprised about 30 well-chosen variables that balanced predictive energy with interpretability.

Practice-Validation-Take a look at Cut up

I break up the info into:

- Coaching: 60%

- Validation: 20%

- Take a look at: 20%

This three-way break up helped me tune hyperparameters on the validation set after which report last efficiency on unseen take a look at knowledge.

Algorithms In contrast

- Linear Regression (baseline)

- Elastic Web (linear mannequin with L1/L2 regularization)

- Random Forest Regressor

- Assist Vector Regressor (SVR) with RBF kernel

- XGBoost Regressor

Hyperparameter Tuning

Utilizing grid search (for Elastic Web alpha and l1_ratio; SVR C and epsilon; RF tree depth and leaf dimension; XGBoost studying charge and tree depend), I optimized every mannequin’s settings primarily based on imply squared error (MSE) on the validation set. I additionally utilized 5-fold cross-validation to protect towards overfitting.

Efficiency Metrics

- RMSE (root imply squared error) on the log scale — provides interpretable models after exponentiating.

- R² — share of variance in log-emissions defined by the mannequin.

- MAE as a robustness test, although RMSE penalizes giant errors extra closely, which is important when underestimating peaks may mislead coverage choices.

Key takeaway: Each tree-based fashions — Random Forest and particularly XGBoost — delivered near-perfect suits (R² > 0.995). XGBoost’s RMSE of 0.02 on the log scale corresponds to typical prediction errors of beneath 2% in uncooked emission values.

Mannequin Interpretability

- SHAP values: I used SHAP (SHapley Additive exPlanations) to rank function significance. The highest 5 drivers had been:

- Exercise (MWh)

- Capability issue

- Emission issue

- Month (seasonality)

- Gas kind (coal vs. gasoline)

- Visualizing SHAP distributions helped verify that larger exercise and decrease effectivity (excessive emission issue) persistently pushed predictions upward.

- Residual evaluation: Plotting residuals versus predictions confirmed no apparent patterns, indicating the mannequin’s errors had been well-behaved and never systematically biased for any subset of crops or seasons.

To make the mannequin accessible, I prototyped a Streamlit app that permits customers to:

- Choose a plant (by area and gas kind)

- Enter hypothetical exercise ranges or capability upgrades

- View predicted emissions beneath completely different climate or demand eventualities

This stay interface helps stakeholders discover “what-if” questions with out writing code.

- Supporting Web Zero 2035: By predicting plant-level emissions with excessive accuracy, the mannequin feeds straight right into a Web Zero 2035 decarbonization roadmap:

- Operational monitoring: Early warning flags if a plant’s emissions are trending above anticipated ranges — prompting upkeep checks.

- Regulatory compliance: Computerized emissions estimates for carbon credit score calculations, decreasing handbook audits.

- Funding planning: Quantifying the impression of capacity-factor enhancements (e.g., by way of superior generators or digital controls) on emissions trajectories.

- Knowledge gaps: Local weather TRACE’s estimates, whereas complete, nonetheless depend on proxy inputs for some websites. Incorporating utility-reported knowledge may enhance accuracy additional.

- Non-fossil sources: Increasing to incorporate renewables (hydro, photo voltaic, wind) would enable a full-grid emissions image.

- Actual-time feeds: Linking stay SCADA or climate APIs may flip the prototype right into a steady monitoring software.

- Generalizability: Testing the mannequin on different nations’ knowledge would validate its applicability past Australia.

Constructing a GHG-prediction pipeline taught me find out how to navigate messy real-world knowledge, steadiness function richness with simplicity, and select between quick linear fashions and highly effective tree-based learners. Key classes embrace:

- At all times begin with sturdy EDA. Understanding variable distributions and relationships guides all downstream steps.

- Function engineering is as vital as mannequin selection. Deriving seasonality phrases, load ratios and geographic clusters unlocked efficiency positive factors.

- Interpretability instruments matter. SHAP and residual plots make sure you’re not blindly trusting “black-box” fashions.

- Hold person wants in thoughts. A stay dashboard converts code into impression.

Thanks for studying. I’m nonetheless new to knowledge science and desirous to develop. Your trustworthy suggestions and strategies would imply lots as I proceed studying and refining my method. Be happy to let me know what resonated, what may very well be clearer, or any concepts for subsequent steps!