Graph fusion can dramatically enhance the efficiency of deep studying fashions, however its effectiveness is dependent upon the construction of your mannequin, the execution surroundings, and the framework/compiler getting used. Right here’s when it delivers probably the most profit, and when its impression could also be restricted.

1. Inference on Edge Gadgets or CPUs

Gadgets like telephones, microcontrollers, and Raspberry Pi have restricted reminiscence bandwidth and compute energy. Fusion reduces kernel launches and reminiscence entry, which is essential on such constrained {hardware}.

2. Giant Fashions with Repeated Blocks

Fashions like ResNet, MobileNet, or ViT use many repeatable blocks (Conv → BN → ReLU). Fusion applies uniformly throughout these patterns, compounding the efficiency profit.

3. Pointwise Operation Chains

Transformers and MLPs usually include sequences of element-wise ops. Fusing them right into a single kernel reduces overhead and avoids materializing pointless intermediate tensors.

4. Exported or Compiled Fashions

In the event you export your mannequin utilizing TorchScript, ONNX, or TensorFlow Lite, fusion is commonly utilized as a part of the optimization go, making deployment sooner with none mannequin adjustments.

5. Latency-Vital Purposes

In real-time methods (e.g., robotics, AR, suggestion engines), shaving off even milliseconds of latency issues. Fusion can present fast wins with out redesigning the mannequin.

1. Dynamic Management Move

In case your mannequin consists of if/whereas statements or data-dependent logic, fusion might not be utilized. Compilers usually require static graphs to match fusion patterns reliably.

2. Already-Certain Reminiscence Bottlenecks

In case your mannequin’s efficiency is restricted by I/O, disk entry, or community latency (e.g., in large-scale distributed inference), fusion may not make a noticeable dent.

3. Small Fashions with Few Ops

For tiny fashions (e.g., easy MLPs with 2–3 layers), the overhead that fusion eliminates is already minimal. Beneficial properties could also be negligible.

4. Coaching with Frequent Weight Updates

In coaching mode, batch norm makes use of reside batch statistics, and a few fused operations (particularly with quantization) might not be numerically an identical. Fusion is normally extra aggressive in inference.

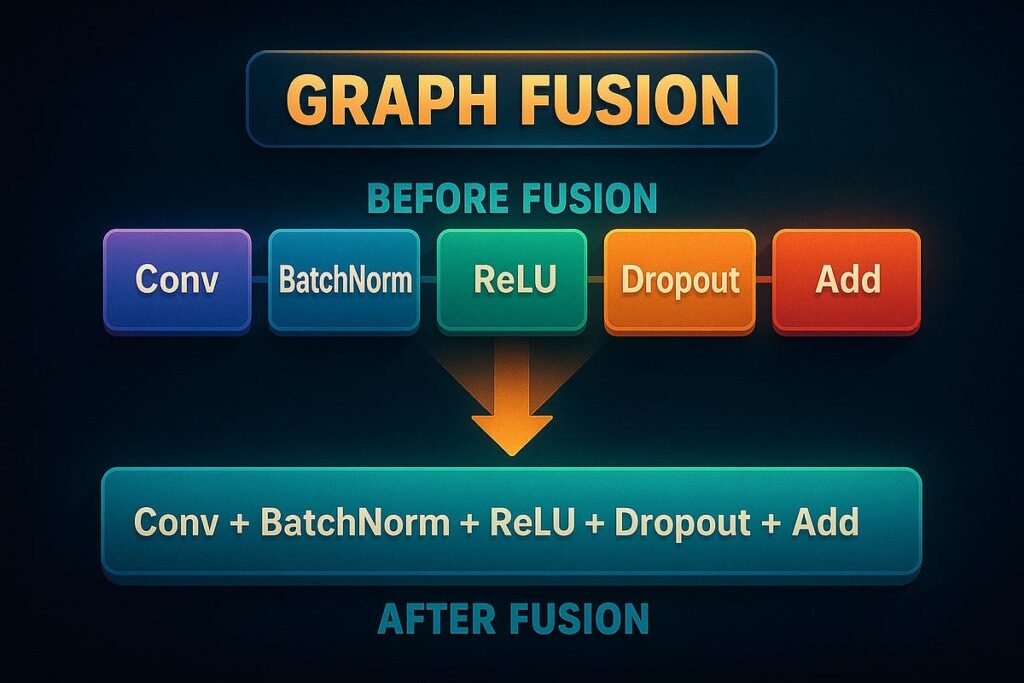

5. Ops with Aspect Results

Sure operations like Dropout or customized loss features can’t all the time be fused, particularly if they’ve randomness or state.

6. Restricted Fusion in Consideration Blocks

In attention-based fashions, full fusion is restricted resulting from operations like softmax and masking. Nonetheless, earlier levels reminiscent of projection layers adopted by activation features are sometimes fusible, particularly if applied in a regular method.