Massive deep studying fashions, akin to ChatGPT, Gemini, DeepSeek, and Grok, have achieved outstanding progress in synthetic intelligence’s means to grasp and reply. Nonetheless, their important dimension consumes substantial computational assets, consequently growing their utilization prices.

For that reason, firms are actively striving to keep up the facility of those fashions whereas lowering their dimension to decrease prices and facilitate simpler deployment. That is the place the method of information distillation comes into play.

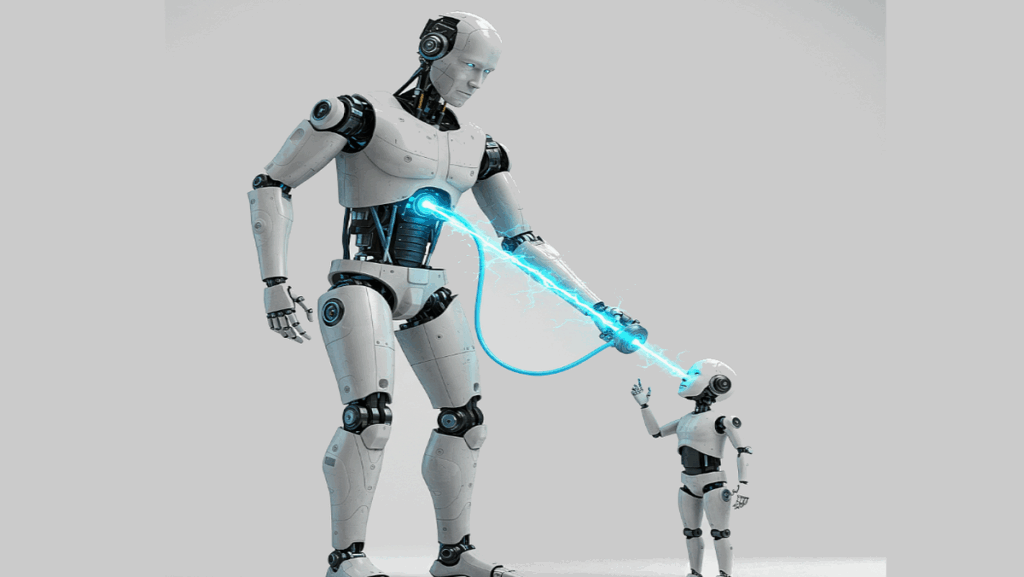

Information distillation is the method of transferring data from a big, complicated mannequin (the trainer) to a smaller, extra environment friendly mannequin (the scholar). On this context, engineers construct a smaller mannequin and, as an alternative of relying solely on the information the big mannequin was skilled on, they use the big mannequin’s outputs to coach the smaller one. This enables the smaller mannequin to learn from the implicit data embedded inside the bigger mannequin, which transcends the significance of mere coaching information. The coed learns to imitate the trainer’s responses quite than creating a deeper understanding independently, all whereas reaching higher effectivity in using computational assets.

Corporations leverage this expertise to supply distilled synthetic intelligence fashions, enabling customers to run them straight on their very own gadgets. Moreover, when firms make the most of these distilled fashions, it helps cut back prices and improve the response pace of AI functions for customers.

Moreover, these fashions can be utilized straight on consumer gadgets and operated effectively with out the necessity for a community connection and knowledge switch over the web, which safeguards consumer privateness.

Nonetheless, the distilled mannequin (or pupil) will naturally not be similar to the capabilities of the trainer mannequin. There’s some lack of data as a consequence of its smaller dimension, which can restrict its means to know the complete depth of information possessed by the trainer. It might additionally face higher difficulties in circumstances it hasn’t been adequately skilled on, resulting in a lowered means to generalize data.

Furthermore, coaching the distilled mannequin to function with excessive effectivity can be a expensive course of and will not be useful in lots of eventualities.

Regardless of these challenges, data distillation is key to the event, dissemination, and utilization of synthetic intelligence. It ensures straightforward and fast entry to the newest and strongest AI fashions utilizing fewer assets. Consequently, researchers and corporations proceed to refine distillation methods, aiming to reinforce the effectivity of distilled AI fashions whereas minimizing the data hole in comparison with the trainer.