Allow us to discover the multi-classifier strategy.

Objective

- Predict the worth vary (0–3 (high-end)) from given smartphone specs

Method

- Analyze enter options distribution varieties

- Select classifiers

- Mix and finalize the outcomes

- Consider the outcomes

Dataset

Mobile Price Classification, Kaggle

- 3,000 datasets

- 21 columns:

‘battery_power’,‘blue’,‘clock_speed’,‘dual_sim’,‘fc’,‘four_g’,‘int_memory’,‘m_dep’,‘mobile_wt’,‘n_cores’,‘computer’,‘px_height’,‘px_width’,‘ram’,‘sc_h’,‘sc_w’,‘talk_time’,‘three_g’,‘touch_screen’,‘wifi’,‘price_range’,‘id’

Visualizing information

After eradicating pointless column (`id`), we’ll plot frequency histograms and Quantile-Quantile plots (Q-Q plot) over the traditional distribution by enter options:

After resampling, we secured 250K information factors per class:

Creating prepare/take a look at information

X = df.drop('price_range', axis=1)

y = df['price_range']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.1, random_state=42)

print(X_train.form, y_train.form, X_test.form, y_test.form)

(898186, 20) (898186,) (99799, 20) (99799,)

We’ll prepare the next fashions based mostly on our (x|y) distributions:

- GaussianNB

- BernoulliNB

- MultinomialNB

First, classifying the enter options into binary, multinomial, and gaussian distribution:

binary = [

'blue',

'dual_sim',

'four_g',

'three_g',

'touch_screen',

'wifi'

]

multinomial = [

'fc',

'pc',

'sc_h',

'sc_w'

]

gaussian = df.copy().drop(columns=[*target, *binary, *categorical, *multinomial], axis='columns').columns

Practice every NB mannequin with corresponding enter options

def train_nb_classifier(mannequin, X_train, X_test, model_name):

mannequin.match(X_train, y_train)

possibilities = mannequin.predict_proba(X_test)

y_pred = np.argmax(possibilities, axis=1)

accuracy = accuracy_score(y_test, y_pred)

print(f'--------- {model_name} ---------')

print(f"Averaged Chance Ensemble Accuracy: {accuracy:.4f}")

print(classification_report(y_test, y_pred))

return y_pred, possibilities, mannequin

# gaussian

scaler = MinMaxScaler()

X_train_gaussian_scaled = scaler.fit_transform(X_train[gaussian])

X_test_gaussian_scaled = scaler.remodel(X_test[gaussian])

y_pred_gnb, prob_gnb, gnb = train_nb_classifier(mannequin=GaussianNB(), X_train=X_train_gaussian_scaled, X_test=X_test_gaussian_scaled, model_name='Gaussian')# bernoulli

y_pred_bnb, prob_bnb, bnb = train_nb_classifier(mannequin=BernoulliNB(), X_train=X_train[binary], X_test=X_test[binary], model_name='Bernoulli')# multinomial

y_pred_mnb, prob_mnb, mnb = train_nb_classifier(mannequin=MultinomialNB(), X_train=X_train[multinomial], X_test=X_test[multinomial], model_name='Multinomial')

Observe that we solely reworked the gaussian information to keep away from skewing different information varieties.

Combining outcomes

Combining the outcomes utilizing common and weighted common:

# mixed (common)

prob_averaged = (prob_gnb + prob_bnb + prob_mnb) / 3

y_pred_averaged = np.argmax(prob_averaged, axis=1)

accuracy = accuracy_score(y_test, y_pred_averaged)

print('--------- Common ---------')

print(f"Averaged Chance Ensemble Accuracy: {accuracy:.4f}")

print(classification_report(y_test, y_pred_averaged))

# mixed (weight common)

weight_gnb = 0.9 # greater weight

weight_bnb = 0.05

weight_mnb = 0.05

prob_weighted_average = (weight_gnb * prob_gnb + weight_bnb * prob_bnb + weight_mnb * prob_mnb)

y_pred_weighted = np.argmax(prob_weighted_average, axis=1)

accuracy_weighted = accuracy_score(y_test, y_pred_weighted)

print('--------- Weighted Common ---------')

print(f"Weighted Averaged Ensemble Accuracy: {accuracy_weighted:.4f}")

print(classification_report(y_test, y_pred_weighted))

Stacking

Optionally, we’ll stack the outcomes with Logistic Regression as meta-learner.

LR is among the widespread meta-learner choices as a result of its simplicity, interpretability, effectiveness with chance inputs from classifiers, and regularization.

X_meta_test = np.hstack((prob_gnb, prob_bnb, prob_mnb))

prob_train_gnb = gnb.predict_proba(X_train_gaussian_scaled)

prob_train_bnb = bnb.predict_proba(X_train[binary])

prob_train_mnb = mnb.predict_proba(X_train[multinomial])

X_meta_train = np.hstack((prob_train_gnb, prob_train_bnb, prob_train_mnb))

meta_learner = LogisticRegression(random_state=42, solver='liblinear', multi_class='auto')

meta_learner.match(X_meta_train, y_train)

y_pred_stacked = meta_learner.predict(X_meta_test)

prob_stacked = meta_learner.predict_proba(X_meta_test)

accuracy_stacked = accuracy_score(y_test, y_pred_stacked)

print('--------- Meta learner (logistic regression) ---------')

print(f"Stacked Ensemble Accuracy: {accuracy_stacked:.4f}")

print(classification_report(y_test, y_pred_stacked))

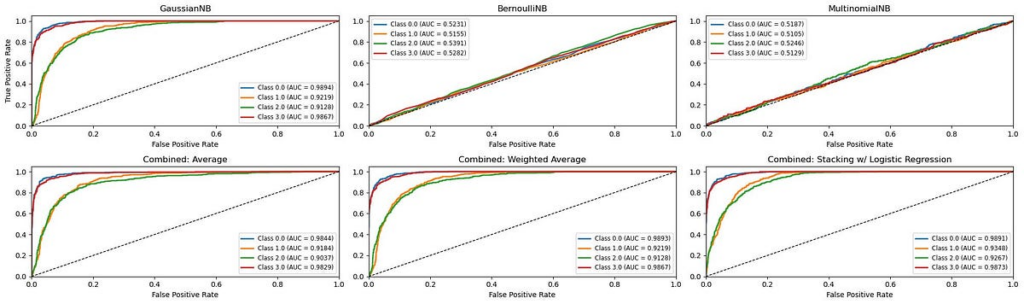

Stacking performs the most effective, whereas Multinomial and Bernoulli individually weren’t environment friendly predictors of the ultimate final result.

That is primarily because of the argmax operation the place the mannequin chooses a single class with the very best chance as its remaining resolution.

Within the course of, the underlying chance distributions from Multinomial and Bernoulli are disregarded. Therefore, these particular person fashions should not “environment friendly” on their very own to supply one extremely assured prediction.

But, after we mixed the outcomes with the meta-learner, it exploited extra info on such distributions from Multinomial and Bernoulli in a stacking ensemble.