Welcome again to my digital thought bubble — the place tech meets “what am I doing once more?” and in some way all of it turns right into a weblog submit.

So right here’s the deal: I’m at present interning (woohoo, real-world chaos unlocked), and my mentor gave me a job that sounded so innocent at first — “Hey, simply examine other ways of implementing RAG and doc them when you do it.”

That’s it.

Easy? No.

Terrifyingly open-ended? Completely.

And thus started the Nice RAG Rabbit Gap️.

As a result of the deeper I went, the extra RAG felt like this secret society of frameworks, vector databases, embeddings, LLMs, and mysterious chains that each one in some way speak to one another.

And me? I used to be simply vibing with a clean Notion web page and 10+ open tabs, praying that I didn’t find yourself by accident coaching a mannequin on my Spotify Wrapped.

However I made it.

And now, you’re getting the submit that future-you can discuss with when RAG inevitably comes up in your ML/AI journey.

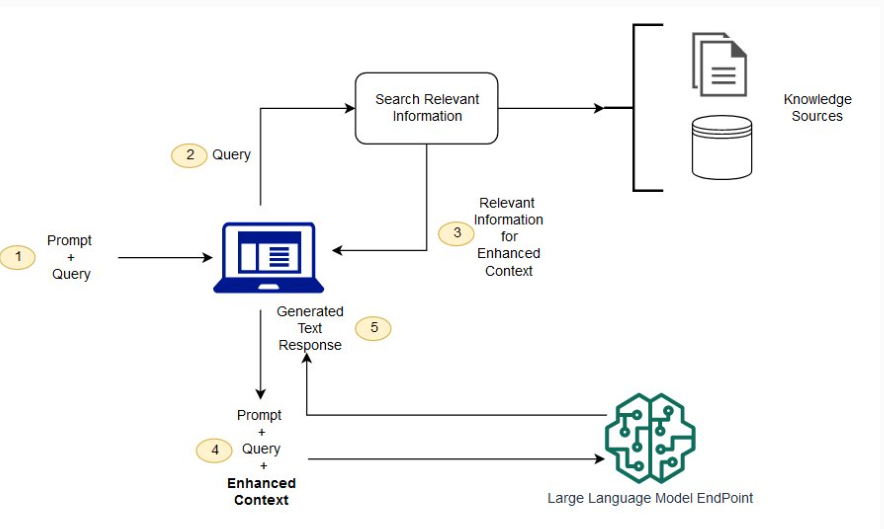

RAG = Retrieval-Augmented Technology.

Translation: You make your Giant Language Mannequin (LLM) barely much less of a hallucinating storyteller and a bit extra of a fact-respecting librarian.

It’s like giving your AI a cheat sheet. As an alternative of creating issues up from its coaching information circa 2023 BC (Earlier than ChatGPT), it retrieves actual information from a information base and generates responses based mostly on that.

Retrieval.

Augmentation.

Technology.

(Principally, give it notes → it writes the essay.)

In plain English?

Step 1: You give the AI a query.

Step 2: It searches a information base (paperwork, PDFs, databases, and many others.) for related information.

Step 3: It makes use of that retrieved context to generate a extra correct reply.

Increase. Now your LLM isn’t hallucinating — it’s doing open-book exams.

There are a number of methods to arrange a RAG system — starting from drag-and-drop UIs to completely programmable frameworks. Right here’s a breakdown of the perfect ones, organized from best to most versatile.

This one’s for many who need RAG with out writing a single line of code to get began.

What it’s:

OpenWebUI is a stupendous native internet interface that connects with LLMs via Ollama (which helps you to run fashions like LLaMA or Mistral in your machine).

How RAG works right here:

Add your recordsdata → It indexes them → Ask a query → It retrieves related chunks → Sends each to the mannequin → You get a context-aware reply.

Why it’s cool:

- No API keys or cloud dependencies

- Fully native = no information leaves your machine

- Fast and intuitive setup (particularly utilizing Docker)

Why it’s restricted:

- Constrained by native assets (RAM, GPU)

- Restricted to native fashions until configured externally

That is the place you go from “I’m exploring RAG” to “I’m constructing my very own RAG stack from scratch, child.” For those who’re a management freak and wish management, modularity, and suppleness, this one, is for you!

What’s it?

LangChain is a Python/JavaScript framework designed to construct chains of LLM operations — like retrieval, parsing, and era.

How RAG works right here:

You utilize RetrievalQA chains to mix a retriever (like FAISS or Chroma) with a language mannequin. You possibly can select your embedding mannequin, chunking technique, and even post-processing logic.

Why it’s highly effective:

- Helps tons of parts: OpenAI, Cohere, HuggingFace fashions, Pinecone, FAISS, ChromaDB, and many others.

- Has a particular idea referred to as “Retrievers” that you may plug into any chain

- Consists of instruments like LCEL (LangChain Expression Language) for declarative pipelines

Typical RAG setup:

- Load paperwork

- Embed them (e.g., utilizing OpenAI or Sentence Transformers)

- Retailer in a vector DB (FAISS, Chroma, Pinecone)

- Use a retriever

- Go outcomes to the LLM for era

Professional tip: Use ConversationalRetrievalChain for chatbot-like RAG methods.

This software thrives on messy information: PDFs, Notion dumps, HTML blobs, SQL tables — if it seems unstructured, LlamaIndex eats it for breakfast.

What’s it?

A framework that bridges your exterior information (structured or not) with LLMs. It handles doc loading, chunking, indexing, and querying — making your information really usable in prompts.

How RAG works right here:

- Load paperwork from sources like PDFs, HTML, Notion, SQL, Google Docs, APIs.

- Chunk them intelligently (semantic splitting, adjustable dimension/overlap).

- Index utilizing vector DBs (FAISS, Chroma, and many others.) or key phrase tables.

- Question: LlamaIndex retrieves related chunks → sends them to your LLM → returns grounded solutions.

Cool Options:

- Helps FAISS, Chroma, Weaviate, Pinecone, Qdrant, and many others.

- Handles PDFs, markdown, Notion, HTML, SQL, APIs — even mixed-source pipelines.

- Composable indexes: construct multi-hop or hierarchical retrieval flows.

- Agent + LangChain integration out of the field.

- Streaming + callback help for real-time apps.

- Persistent index storage for manufacturing deployment.

Use it when:

- You’ve received a great deal of content material (authorized docs, wikis, stories) and wish retrieval to simply work.

- You want a chatbot, search assistant, or RAG pipeline that understands your information.

- You need flexibility with out constructing the whole lot from scratch.

If LangChain is your playground, Haystack is your workshop. Constructed by deepset, it’s an open-source framework that can assist you construct production-grade RAG apps, quick.

What’s it?

Haystack is a Python framework that permits you to join LLMs with information pipelines utilizing modular parts like retrievers, converters, immediate builders, and turbines. Consider it as LEGO blocks for LLM-powered search and Q&A methods.

How RAG works right here:

Ingest paperwork → preprocess and break up → embed and retailer → retrieve based mostly on question → go to LLM → generate grounded, correct responses.

Why it’s cool:

- Part-based pipelines you possibly can wire and visualize

- Helps OpenAI, Cohere, Hugging Face, and extra

- Handles the whole lot from PDFs to HTML and APIs

- Works with vector shops like FAISS, Weaviate, Elasticsearch

- Straightforward to transform into REST APIs for manufacturing

Why it’s value making an attempt:

You need one thing cleaner and extra targeted than LangChain, with nice help for real-world deployments and full management over your information movement. Excellent if you happen to’re critical about constructing an precise product — not simply testing the waters.

Professional tip: Use InMemoryDocumentStore for quick prototyping, then change to FAISS or Weaviate whenever you’re able to scale.

That is for the “I wish to be taught all of it by hand” crowd. for the open-source purists. No middleware, no magic — simply uncooked energy and whole management.

What’s it?

Use the HuggingFace Transformers library to choose your embedding mannequin (like sentence-transformers) and era mannequin (like T5, GPT2, or Mistral). Pair it with FAISS for quick, environment friendly vector similarity search.

How RAG works right here:

Cut up your docs → embed chunks → retailer vectors in FAISS → embed the question → retrieve top-N comparable chunks → go context + question to the LLM → generate reply.

Why it’s cool:

- Zero APIs = utterly offline-capable

- Select any open-source embedding or era mannequin

- Most management over each step: chunking, indexing, retrieval, prompting

- Scalable and production-ready if you happen to set it up proper

Why it’s not for everybody:

- No handholding or plug-and-play instruments

- You’ll have to construct your individual pipelines, reminiscence administration, and prompting logic

Professional tip: Mix InstructorEmbedding fashions for smarter semantic search and light-weight decoder fashions (like flan-t5-small) for quick, native era.