AMD issued a raft of stories at their Advancing AI 2025 occasion this week, an replace on the corporate’s response to NVIDIA’s 90-plus p.c market share dominance within the GPU and AI markets. And the corporate supplied a sneak peak at what to anticipate from their subsequent technology of EPYC CPUs and Intuition GPUs.

Right here’s an outline of AMD’s main announcement:

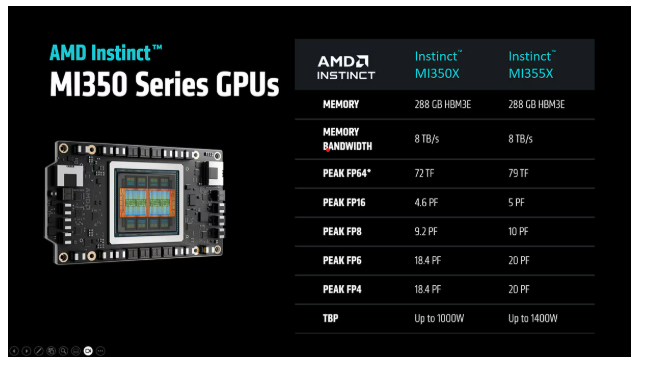

AMD MI350 Collection GPUs

The headline announcement: AMD launched the Intuition MI350 Collection that they mentioned delivers as much as 4x generation-on-generation AI compute enchancment and as much as a 35x leap in inferencing efficiency.

They provide reminiscence capability of 288GB HBM3E and bandwidth of as much as 8TB/s together with air-cooled and direct liquid-cooled configurations.

And so they help as much as 64 GPUs in an air-cooled rack and as much as 128 GPUs in a direct liquid-cooled racks delivering as much as 2.6 exaFLOPS of FP4/FP6 efficiency in an {industry} standards-based infrastructure.

AMD CEO Lisa Su

“With the MI350 collection, we’re delivering the biggest generational efficiency leap within the historical past of Intuition, and we’re already deep in improvement of MI400 for 2026.” AMD CEO, Dr. Lisa Su, mentioned. “[The MI400] is admittedly designed from the bottom up as a rack-level answer.”

On that entrance, AMD introduced its “Helios” rack scale structure, accessible subsequent yr, that can combine a mix of the following technology of AMD expertise together with:

- Subsequent-Gen AMD Intuition MI400 Collection GPUs, that are anticipated to supply as much as 432 GB of HBM4 reminiscence, 40 petaflops of FP4 efficiency and 300 gigabytes per second of scale-out bandwidth3.

- Helios efficiency scales throughout 72 GPUs utilizing the open customary UALink (Extremely Accelerator Hyperlink)to interconnect the GPUs and scale-out NICs. That is designed to let each GPU within the rack talk as one unified system.

- sixth Gen AMD EPYC “Venice” CPUs, which is able to make the most of the “Zen 6” structure and are anticipated to supply as much as 256 cores, as much as 1.7X the efficiency and 1.6 TBs of reminiscence bandwidth.

- AMD Pensando “Vulcano” AI NICs, which is UEC (Extremely Ethernet Consortium) 1.0 compliant and helps each PCIe and UALink interfaces for connectivity to CPUs and GPUs. It can additionally help 800G community throughput and an anticipated 8x the scale-out bandwidth per GPU3 in comparison with the earlier technology.

ROCm 7 and Developer Cloud

A serious space of benefit for NVIDIA is its dominance of the software program improvement area – the overwhelming majority of AI software builders use NVIDIA’s CUDA programming platform. Builders who turn out to be adept at utilizing CUDA are inclined to proceed utilizing… CUDA. Customers of functions constructed on CUDA are inclined to need… serves utilizing NVIDIA GPUs. Together with GPU efficiency, competing with NVIDIA on the AI software program entrance is a serious problem for anybody making an attempt to carve out a considerable share of the AI market.

This week, AMD launched AMD ROCm 7 and the AMD Developer Cloud below what the corporate referred to as a “builders first” mantra.

“Over the previous yr, we’ve got shifted our focus to enhancing our inference and coaching capabilities throughout key fashions and frameworks and increasing our buyer base,” mentioned Anush Elangovan, VP of AI Software program, AMD, in an announcement weblog. “Main fashions like llama 4, gemma 3, and Deepseek are actually supported from day one, and our collaboration with the open-source neighborhood has by no means been stronger, underscoring our dedication to fostering an accessible and revolutionary AI ecosystem.”

Elangovan emphasised ROCm 7’s accessibility and scalability, together with “placing MI300X-class GPUs within the arms of anybody with a GitHub ID…, putting in ROCm with a easy pip set up…, going from zero to Triton kernel pocket book in minutes.”

Typically accessible in Q3 2025, ROCm 7 will ship greater than 3.5X the inference functionality and 3X the coaching energy in comparison with ROCm 6. This stems from advances in usability, efficiency, and help for decrease precision information sorts like FP4 and FP6, Elangovan mentioned. ROCm 7 additionally presents “a strong method” to distributed inference, the results of collaboration with the open-source ecosystem, together with such frameworks as SGLang, vLLM and llm-d.

AMD’s ROCm Enterprise AI debuts as an MLOps platform designed for AI operations in enterprise settings and contains instruments for mannequin tuning with industry-specific information and integration with structured and unstructured workflows. AMD mentioned that is facilitated by partnerships “inside our ecosystem for growing reference functions like chatbots and doc summarizations.”

Rack-Scale Power Effectivity

For the urgent downside of AI vitality demand outstripping vitality provide, AMD mentioned it exceeded its “30×25” effectivity objective, reaching a 38x enhance in node-level vitality effectivity for AI-training and HPC, which the corporate mentioned equates to a 97 p.c discount in vitality for a similar efficiency in comparison with methods from 5 years in the past.

The corporate additionally set a 2030 objective to ship a 20x enhance in rack-scale vitality effectivity from a 2024 base yr, enabling a typical AI mannequin that in the present day requires greater than 275 racks to be educated in below one rack by 2030, utilizing 95 p.c much less electrical energy.

The corporate additionally set a 2030 objective to ship a 20x enhance in rack-scale vitality effectivity from a 2024 base yr, enabling a typical AI mannequin that in the present day requires greater than 275 racks to be educated in below one rack by 2030, utilizing 95 p.c much less electrical energy.

Mixed with anticipated software program and algorithmic advances, AMD mentioned the brand new objective may allow as much as a 100x enchancment in general vitality effectivity.

Open Rack Scale AI Infrastructure

AMD introduced its rack structure for AI encompassing its fifth Gen EPYC CPUs, Intuition MI350 Collection GPUs, and scale-out networking options together with AMD Pensando Pollara AI NIC, built-in into an industry-standard Open Compute Venture- and Extremely Ethernet Consortium-compliant design.

“By combining all our {hardware} parts right into a single rack answer, we’re enabling a brand new class of differentiated, high-performance AI infrastructure in each liquid and air-cooled configurations,” AMD mentioned.