Understanding the mathematical basis that powers fashionable AI classification programs — A simplified cross-entropy loss clarification.

For those who’ve ever puzzled how machine studying fashions study to differentiate between cats and canines, detect spam emails, or acknowledge handwritten digits, you’ve encountered the work of cross-entropy loss. This mathematical idea is the unsung hero behind most classification algorithms, quietly guiding neural networks towards higher predictions with every coaching iteration.

Regardless of its elementary significance, cross-entropy loss typically stays shrouded in mathematical jargon that may intimidate newcomers. At this time, we’ll demystify this highly effective idea by intuitive explanations, real-world examples, and sensible insights that may rework your understanding of how AI programs study.

Cross entropy loss is a mathematical operate that measures how far your mannequin’s predictions are from the precise right solutions. However not like easy accuracy metrics that solely care about proper versus improper, cross entropy loss cares deeply about confidence.

Consider it this fashion: for those who’re taking a multiple-choice examination and also you’re 95% certain the reply is B, that’s very totally different from being 51% certain the reply is B. Each is perhaps “right” if B is certainly the fitting reply, however your confidence ranges inform very totally different tales about your understanding.

Cross entropy loss captures this nuance by:

- Rewarding assured right predictions with low loss values

- Punishing unsure right predictions with reasonable loss values

- Severely punishing assured incorrect predictions with excessive loss values

Earlier than diving into formulation, let’s construct instinct with a relatable state of affairs.

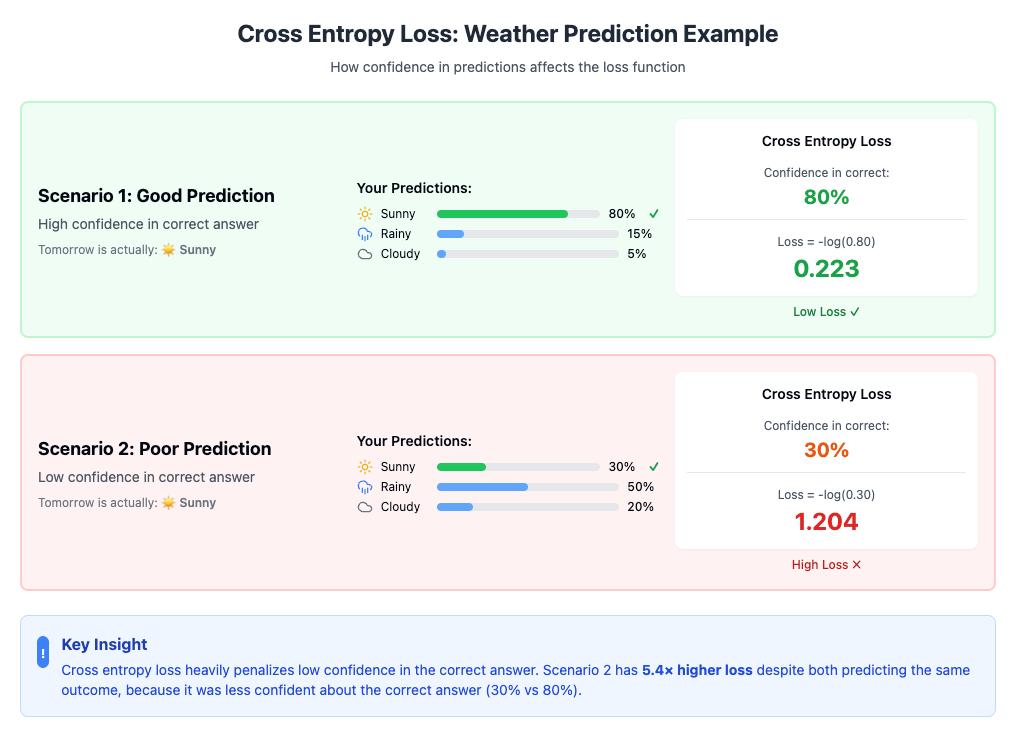

Think about you’re a climate forecaster predicting tomorrow’s climate. There are three prospects: Sunny, Wet, or Cloudy. As a substitute of creating a definitive prediction, it’s essential to present confidence percentages that sum to 100%.

Situation 1: Tomorrow is definitely Sunny

- Your prediction: 80% Sunny, 15% Wet, 5% Cloudy

- Consequence: You had been fairly assured concerning the right reply (Sunny), so your “loss” is comparatively low

Situation 2: Tomorrow is definitely Sunny

- Your prediction: 30% Sunny, 50% Wet, 20% Cloudy

- Consequence: You had been most assured concerning the improper reply (Wet), so your “loss” is excessive

Cross entropy loss mathematically captures this relationship, making certain that fashions are skilled to be each correct and appropriately assured.

The cross-entropy loss components may look intimidating at first:

Loss = -Σ(y_i × log(p_i))

However let’s decode it step-by-step:

- y_i: The true label (1 for the right class, 0 for incorrect lessons)

- p_i: The anticipated chance for sophistication i

- log: Pure logarithm

- Σ: Sum over all attainable lessons

- Damaging signal: Converts the outcome to a optimistic loss worth

The logarithm is the key sauce that makes cross-entropy loss so efficient. Right here’s why:

- log(1) = 0: Excellent confidence within the right reply offers zero loss

- log(0.5) ≈ -0.69: Reasonable confidence offers reasonable penalty

- log(0.1) ≈ -2.3: Low confidence offers excessive penalty

- log(0.01) ≈ -4.6: Very low confidence offers extreme penalty

The logarithm creates an exponential punishment system that turns into more and more harsh as your confidence within the right reply decreases.

Let’s hint by a concrete instance of coaching a neural community to categorise pictures of animals.

We’re constructing a classifier for 3 classes: Cat, Canine, and Hen. Our mannequin must output possibilities that sum to 1.0 for every picture.

Floor Fact:

- Cat: 1 (right class)

- Canine: 0 (incorrect class)

- Hen: 0 (incorrect class)

Mannequin Predictions:

- Cat: 0.33 (33% confidence)

- Canine: 0.42 (42% confidence) ← Most assured, however improper!

- Hen: 0.25 (25% confidence)

Cross Entropy Calculation:

Loss = -(1 × log(0.33) + 0 × log(0.42) + 0 × log(0.25))

Loss = -log(0.33)

Loss = 1.11

What this implies: The mannequin is barely 33% assured concerning the right reply, leading to a excessive lack of 1.11. The phrases for Canine and Hen disappear as a result of their true labels are 0.

Mannequin Predictions:

- Cat: 0.70 (70% confidence) ← Appropriate and extra assured

- Canine: 0.20 (20% confidence)

- Hen: 0.10 (10% confidence)

Cross Entropy Calculation:

Loss = -log(0.70) = 0.36

Progress: The loss dropped from 1.11 to 0.36 because the mannequin grew to become extra assured concerning the right reply.

Mannequin Predictions:

- Cat: 0.95 (95% confidence) ← Very assured and proper

- Canine: 0.03 (3% confidence)

- Hen: 0.02 (2% confidence)

Cross Entropy Calculation:

Loss = -log(0.95) = 0.05

Success: The loss is now very low at 0.05, indicating the mannequin has discovered to be extremely assured concerning the right classification.

Not like different loss features which may think about all predictions, cross entropy loss zeroes in on how assured your mannequin is concerning the right reply. The possibilities assigned to incorrect lessons don’t instantly contribute to the loss calculation.

The logarithmic operate creates a punishment system the place being barely much less assured concerning the right reply ends in disproportionately larger loss. This pushes fashions to be decisive once they have proof.

Cross entropy loss has good mathematical properties that make it straightforward for gradient descent algorithms to optimize. The gradients are well-behaved and supply clear course for enchancment.

The loss operate naturally encourages fashions to output well-calibrated possibilities, which means that when a mannequin says it’s 80% assured, it ought to be proper about 80% of the time.

Whereas MSE works for regression issues, it’s not supreme for classification as a result of:

- It doesn’t naturally deal with chance distributions

- It may be too forgiving of assured improper predictions

- It doesn’t encourage the mannequin to output well-calibrated possibilities

Utilized in Assist Vector Machines, hinge loss:

- Solely cares about getting the fitting reply, not confidence ranges

- Doesn’t present chance outputs

- Is much less appropriate for multi-class issues

Cross entropy loss strikes the right stability for classification duties by combining accuracy necessities with confidence calibration.

When implementing cross-entropy loss, add a small epsilon (like 1e-15) to stop log(0):

loss = -np.log(predicted_probability + 1e-15)

In follow, cross-entropy loss is commonly mixed with the softmax activation operate, which ensures predictions sum to 1.0 and is optimistic.

For imbalanced datasets, think about weighted cross-entropy loss that offers extra significance to underrepresented lessons.

Generally, making targets barely much less “arduous” (e.g., 0.9 as a substitute of 1.0 for the right class) can enhance generalization.

In case your mannequin persistently outputs possibilities very near 0 or 1, it is perhaps overfitting. Contemplate:

- Including regularization

- Utilizing dropout

- Implementing label smoothing

If loss values grow to be extraordinarily giant, test for:

- Studying charges which can be too excessive

- Numerical instability points

- Incorrect information preprocessing

At all times monitor each coaching and validation loss to detect overfitting early.

Cross entropy loss extends past easy classification:

When a picture can have a number of labels (e.g., “cat” and “out of doors”), binary cross entropy is utilized to every label independently.

Language fashions use cross-entropy loss at every time step to foretell the subsequent phrase in a sequence.

Variational autoencoders and different generative fashions typically incorporate cross-entropy loss as a part of their coaching goals.

Understanding cross entropy loss illuminates a elementary precept of machine studying: efficient studying requires not simply getting the fitting reply, however understanding why that reply is true. By incorporating confidence into the loss calculation, cross-entropy loss guides fashions towards making well-reasoned, calibrated predictions.

This precept extends past machine studying into human studying and decision-making. Simply as cross-entropy loss rewards assured right predictions whereas punishing overconfident incorrect ones, we as people profit from being appropriately assured in our data whereas remaining humble about our limitations.

Cross entropy loss may look like simply one other mathematical components, however it represents a classy strategy to studying that balances accuracy with applicable confidence. By understanding the way it works — from the intuitive climate forecasting analogy to the mathematical particulars — you’re higher geared up to debug coaching points, select applicable architectures, and interpret your mannequin’s habits.

The subsequent time you see a neural community appropriately classify a picture or a language mannequin generate coherent textual content, do not forget that cross entropy loss is working behind the scenes, constantly pushing the mannequin towards higher, extra assured predictions. It’s a ravishing instance of how elegant arithmetic can seize advanced studying behaviors and drive the AI programs that more and more form our world.