As AI programs turn out to be ubiquitous in enterprise and society, organizations are beneath strain to deploy these applied sciences responsibly. Scandals over biased algorithms or opaque “black field” choices have made it clear that moral guardrails are important. From a global perspective, consultants have highlighted accountability, transparency, equity (freedom from bias), privateness, and governance as key areas of concern in autonomous programs. In response, governments and trade our bodies are releasing AI ethics pointers and frameworks — for instance, the U.S. NIST AI Danger Administration Framework and the EU’s AI Act — pushing firms to formalize how they govern AI ethically.

This weblog outlines methods and frameworks that ethics-conscious leaders can use to embed accountable AI ideas into observe, guaranteeing their Autonomous and Clever Techniques (AIS) are each revolutionary and worthy of belief.

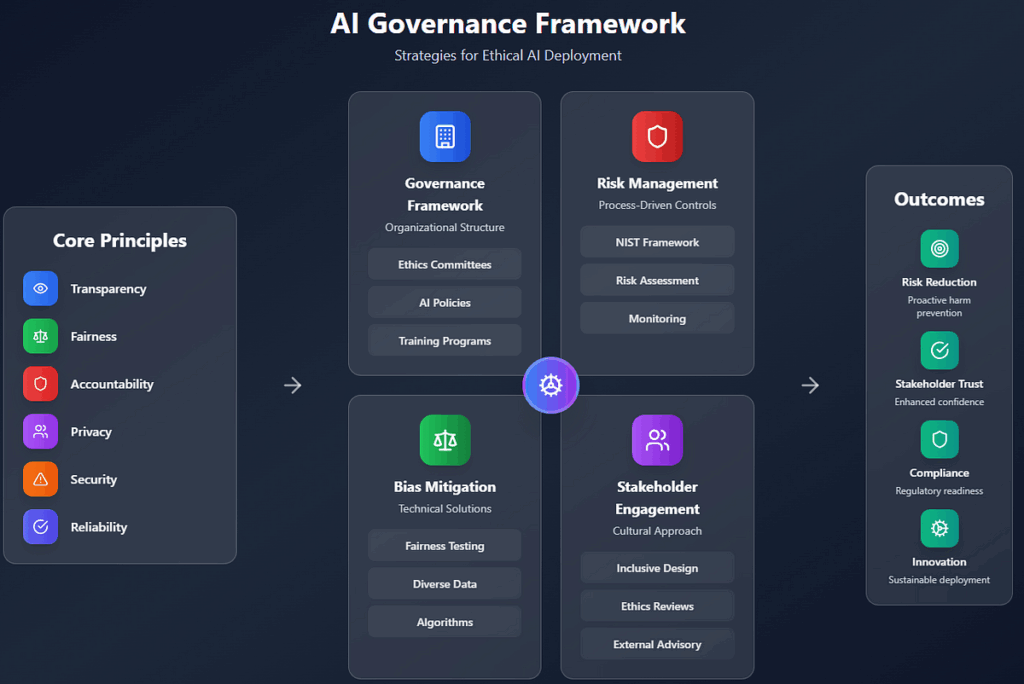

Any efficient AI governance technique begins by defining clear moral ideas to information AI improvement and use. Many organizations have converged on an identical set of core ideas:

- Transparency: being open about how AI programs work and choices are made.

- Equity: actively mitigating bias to make sure equitable outcomes for all teams.

- Accountability: establishing accountability for AI conduct and impacts.

- Privateness: defending knowledge and people’ rights in AI operations.

- Safety: safeguarding AI programs towards misuse and assaults.

- Reliability: guaranteeing the AI is powerful and performs persistently as meant.

For example, Cisco’s Accountable AI framework emphasizes six ideas — Transparency, Equity, Accountability, Privateness, Safety, and Reliability — as important for protected, reliable AI. These ideas ought to be greater than lofty phrases; they must be concretely outlined on your context (e.g. what does equity imply on your product?) and embedded into insurance policies. Many firms kind AI ethics pointers or charters primarily based on such ideas, which function a north star for all AI tasks. Aligning with widely known ideas (just like the OECD AI Rules or IEEE’s Ethically Aligned Design) also can assist guarantee your framework meets world expectations. Briefly, set the moral “guidelines of the street” upfront — it gives a typical language for builders, stakeholders, and regulators to debate and consider your AI programs.

With ideas in place, organizations want a governance construction to operationalize them. This usually includes:

- Devoted oversight roles or committees: Assign clear possession for AI ethics on the management stage. Many firms have created AI ethics boards or Accountable AI committees that embody senior executives throughout departments (engineering, authorized, compliance, HR, and so forth.). For instance, Cisco shaped a Accountable AI Committee of senior leaders to supervise their AI framework’s adoption and to assessment high-risk AI use circumstances for potential bias or misuse. Such a physique gives steering, makes powerful calls on moral points, and alerts top-down dedication.

- Insurance policies and processes: Incorporate AI governance into current workflows. This may imply updating the software program improvement lifecycle to incorporate moral danger checkpoints — e.g. a compulsory ethics assessment earlier than an AI mannequin is deployed, just like a safety assessment. Outline processes for escalation: how will considerations or incidents (like an AI error inflicting hurt) be reported and dealt with? Cisco, as an example, requires assessments for brand new AI use circumstances and has an incident response system for AI points built-in with their safety and privateness incident processes.

- Coaching and consciousness: Make sure that everybody concerned in AI tasks, from builders to product managers to executives, is skilled on the moral pointers and is aware of their function in upholding them. Constructing an inner tradition of accountability is essential — ethics shouldn’t be seen as a job for a separate crew solely, however a shared obligation.

- Exterior advisory and audit: Relying on the dimensions and danger of your AI deployments, think about involving exterior views. This might imply having exterior ethicists or person representatives in your AI advisory board, or subjecting key programs to third-party audits/certifications for compliance with moral requirements.

A proper governance framework ties collectively the ideas and the individuals. It ensures there are checks and balances all through the AI lifecycle, not simply at deployment. By organising oversight and accountability mechanisms, organizations can higher stop moral lapses and reply successfully if points come up. Governance turns summary ideas into actionable guardrails that information every day work on AI tasks.

On the coronary heart of AI governance lies danger administration — figuring out and mitigating the methods AI might trigger hurt or go mistaken. Numerous frameworks exist to help organizations in systematic AI danger administration. Notably, the NIST AI Danger Administration Framework gives a complete strategy with 4 core features: Map (context, stakeholders, and danger identification), Measure (evaluation and evaluation of dangers), Handle (mitigation methods and controls), and Govern (oversight to make sure alignment with organizational targets and values). Adopting such frameworks helps groups to:

- Proactively establish dangers: Think about numerous danger domains — e.g. security, bias, privateness, safety, reputational — for every AI software. Situation planning and impression assessments (like an “Algorithmic Influence Evaluation”) are helpful instruments to map out potential failure modes or antagonistic impacts.

- Set up controls and safeguards: For every recognized danger, resolve how it is going to be managed. This might embody technical controls (fee restrict an AI agent, or add adversarial instance detection to counter safety threats) and organizational controls (e.g. a human should sign-off on any AI-driven determination that impacts a person’s rights). Embed these controls into the AI system’s design and into governance insurance policies.

- Assign accountability: A key a part of governance is deciding “who’s accountable if one thing goes mistaken?” Make sure that for every AI system, there’s a designated “proprietor” (or a sequence of accountability) accountable for the system’s outcomes. This readability drives higher upkeep and moral diligence. It additionally ties into authorized compliance — regulators will count on to know who oversees the AI.

- Steady monitoring and enchancment: Danger administration isn’t a one-time effort. Put in place monitoring (technical instruments and oversight committees) to trace AI efficiency and rising dangers. Accumulate metrics on issues like error charges, appeals of AI choices, bias measurements, and so forth., and feed these again into enhancing the system. Periodic critiques ought to be mandated (just like how monetary audits are common) to make sure the AI nonetheless aligns with moral and regulatory expectations over time.

Organizations are additionally exploring danger tiering — categorizing AI programs by danger stage (e.g. low, medium, excessive danger) and making use of governance proportionate to the danger. This manner, essentially the most doubtlessly dangerous AI (say, an AI medical analysis instrument) will get essentially the most stringent oversight, whereas a low-risk AI (e.g. an inner expense report chatbot) has a lighter contact. The tip purpose is to reduce harms whereas permitting helpful innovation, cultivating belief in AI by proving that dangers are beneath management.

Making certain truthful and unbiased AI is a cornerstone of accountable AI technique. This goes past technical fixes; it requires a holistic strategy:

- Set equity objectives and metrics: At challenge outset, outline what it means for the AI to be truthful in context. Is it equal efficiency throughout demographic teams? Avoiding disparate impacts on protected lessons? Having this readability allows you to measure equity. Use metrics (false optimistic/unfavorable charges by group, calibration scores, and so forth.) to quantify bias and monitor enhancements.

- Embed bias checks in improvement: Leverage instruments and frameworks for bias detection (e.g. IBM’s AI Equity 360 toolkit) throughout mannequin coaching and analysis. Conduct bias stress checks when validating fashions — for instance, simulate inputs that symbolize completely different subpopulations to see if outputs differ unjustifiably. Make bias and equity testing a normal step within the mannequin improvement pipeline, simply as you’ll do QA testing for bugs.

- Knowledge variety and high quality: Many biases stem from the coaching knowledge. Put money into enhancing knowledge high quality — this may contain curating extra various datasets, balancing class distributions, or filtering out prejudiced content material. If sure teams are under-represented in knowledge, use strategies like knowledge augmentation or synthesize knowledge to fill the gaps. Documentation of information lineage (knowledge sheets) may also help floor potential biases in upstream knowledge assortment.

- Algorithmic options: Use or develop algorithms which have equity constraints. For example, “in-processing” approaches can incorporate equity standards into the mannequin’s goal perform, so it straight optimizes for equitable efficiency. Moreover, think about ensemble or multi-model approaches the place one mannequin displays one other for biased choices.

- Human oversight on equity: Create assessment checkpoints the place people (together with authorized or ethics consultants) study choices for bias. Additionally, interact those that is perhaps adversely affected — for instance, if deploying an AI in hiring, seek the advice of with worker useful resource teams or exterior advocates to get perspective on what equity means in that context.

Regulators and requirements our bodies are more and more mandating such bias mitigation steps. The EU, FDA, and WHO have all known as for stricter frameworks to establish and mitigate algorithmic bias, aiming to make sure AI doesn’t perpetuate discrimination. By constructing equity into the design and governance of AI programs, organizations not solely adjust to rising guidelines but additionally unlock AI’s advantages for a wider viewers. Truthful AI is efficient AI — it’s extra dependable, legally sound, and broadly accepted.

Transparency and explainability are sometimes cited ideas, however implementing them requires concrete practices:

- Transparency measures: Undertake a coverage of “accountable disclosure” on your AI. This consists of disclosing using AI to these impacted, offering plain-language descriptions of how the system works, and being open concerning the AI’s capabilities and limits. Some firms publish transparency reviews or reality sheets about their AI companies, detailing points like knowledge sources, mannequin accuracy, and steps taken to mitigate dangers.

- Mannequin documentation: As a part of improvement, create documentation like Mannequin Playing cards (which describe a mannequin’s meant use, efficiency throughout teams, and so forth.) or Algorithmic Influence Assessments for high-impact programs. These paperwork could be shared with stakeholders, auditors, or regulators to show due diligence.

- Consumer-facing explainability: The place AI makes choices affecting individuals, present explanations. This could possibly be by means of explainable AI strategies (e.g. highlighting which components led to a mortgage denial) or rule-based surrogate fashions that approximate the AI’s logic in comprehensible phrases. The purpose is to empower individuals to grasp and, if wanted, contest AI choices. For example, a healthcare AI may present docs with the highest components influencing its analysis advice, aiding knowledgeable decision-making.

- Stakeholder communication: Guarantee there are channels for stakeholders — whether or not prospects, workers, or regulators — to ask questions concerning the AI system and get significant solutions. This may contain coaching buyer help or area engineers to deal with AI-related inquiries. Constructing understanding is a part of transparency.

- Accountability and traceability: Implement technical logging to be able to hint why the AI did what it did. If an surprising consequence happens, there ought to be a document of the enter, the mannequin’s inner reasoning (if doable), and which guidelines or thresholds utilized. This traceability is essential for audits and for steady enchancment. Accountability additionally implies that when one thing goes mistaken, the group takes accountability and addresses it brazenly — not blaming the “AI” as if it have been impartial. Creating an inner “AI accountability report” for main deployments could be a helpful train to assessment how nicely the system aligns with moral expectations and what corrective actions have been taken.

Investing in transparency and explainability yields dividends in stakeholder belief. When individuals perceive how an AI system arrives at outcomes and see that a company is forthright about its AI’s workings, they’re extra prone to belief and settle for it. Furthermore, transparency is more and more demanded by rules (for instance, the EU AI Act will probably require person notifications and clarification for high-risk AI). By operationalizing these practices now, organizations keep forward of compliance and construct a robust popularity for moral AI management.

A usually underappreciated side of AI governance is cultivating an moral AI tradition by means of stakeholder engagement. Expertise doesn’t exist in a vacuum — involving the individuals who design, use, and are affected by AI results in higher outcomes:

- Inclusive improvement: Participating various stakeholders (e.g. individuals from completely different demographics, backgrounds, and experience) within the AI design course of helps floor blind spots. As Partnership on AI consultants be aware, bringing in various stakeholder teams may also help foresee and handle dangers and harms earlier than they manifest, and steers product improvement in the direction of outcomes significant to a broad person base. In observe, this might imply co-design classes with end-users, consultations with incapacity advocates for an AI instrument that impacts them, or together with frontline workers within the improvement loop of an AI system that may have an effect on their work.

- Cross-functional ethics critiques: Encourage open dialogue about ethics throughout the corporate. Common ethics assessment conferences (involving product managers, engineers, authorized, ethics officers, and so forth.) for AI tasks create a discussion board to voice considerations and debate options. Over time, this builds an inner tradition the place ethics is a shared precedence. Management ought to champion these discussions, so groups know that doing the fitting factor is valued as a lot as delivery options.

- Coaching and empowerment: Present ethics coaching that isn’t simply check-the-box, however genuinely engages workers with actual eventualities and dilemmas they could face when constructing or deploying AI. Empower workers in any respect ranges to talk up in the event that they discover moral points — maybe by means of an nameless reporting channel or by integrating ethics checkpoints into agile processes. An moral tradition means moral considering turns into a part of everybody’s job, not an exterior mandate.

- Exterior stakeholder communication: Sustaining dialogue with exterior stakeholders — prospects, regulators, trade teams, and the general public — can be key. Solicit suggestions and hearken to considerations about your AI merchandise. Take part in trade efforts (boards, normal our bodies) to share finest practices and keep aligned with societal expectations. By participating with the broader neighborhood, organizations can adapt their governance to rising norms and show accountability.

Crucially, stakeholder engagement isn’t nearly avoiding negatives; it positively drives innovation. Inclusive and moral design usually results in extra sturdy, user-friendly, and extensively accepted AI programs. It additionally helps organizations anticipate public sentiment and regulatory shifts, making them extra resilient in a fast-changing atmosphere. In essence, an moral AI tradition grounded in stakeholder involvement turns into a aggressive benefit — it’s simpler to belief and undertake AI that’s been formed with many voices on the desk.

Within the drive to harness AI’s potential, organizations should equally prioritize governance and ethics to make sure that potential doesn’t come at the price of public belief or human rights. By establishing a robust moral governance framework — grounded in clear ideas like transparency, equity, and accountability, and activated by means of concrete processes and stakeholder engagement — firms can confidently deploy Autonomous and Clever Techniques which are efficient and aligned with society’s values. The reward is twofold: lowered danger of hurt or compliance violations, and enhanced popularity as a accountable innovator. As pointers and rules tighten globally, those that have invested in moral AI practices will discover themselves well-positioned to thrive in an atmosphere the place belief is the foreign money of success. In abstract, moral governance isn’t a hindrance to AI progress; it’s the technique that ensures AI’s advantages are realized sustainably and broadly, securing the license to function and innovate with AI for the long term.

1. NIST AI Risk Management Framework (AI RMF)

2. OECD Principles on Artificial Intelligence

3. Cisco’s Responsible AI Framework

4. IBM AI Fairness 360 Toolkit

5. Explainable AI (XAI) Methods: SHAP and LIME

6. Model Cards for Transparent AI Reporting (Google AI)

8. Partnership on AI — Stakeholder Inclusion for Responsible AI