Cerebras Methods immediately introduced what it mentioned is record-breaking efficiency for DeepSeek-R1-Distill-Llama-70B inference, reaching greater than 1,500 tokens per second – 57 occasions sooner than GPU-based options.

Cerebras mentioned this velocity allows prompt reasoning capabilities for one of many business’s most refined open-weight fashions, operating solely on U.S.-based AI infrastructure with zero information retention.

“DeepSeek R1 represents a brand new frontier in AI reasoning capabilities, and immediately we’re making it accessible on the business’s quickest speeds,” mentioned Hagay Lupesko, SVP of AI Cloud, Cerebras. “By reaching greater than 1,500 tokens per second on our Cerebras Inference platform, we’re remodeling minutes-long reasoning processes into near-instantaneous responses, essentially altering how builders and enterprises can leverage superior AI fashions.”

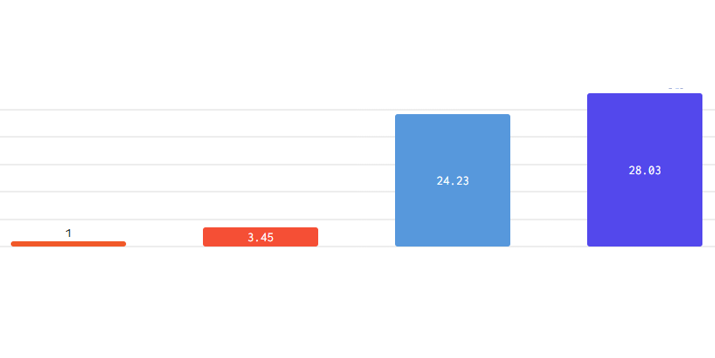

Powered by the Cerebras Wafer Scale Engine, the platform demonstrates real-world performance improvements. A typical coding immediate that takes 22 seconds on aggressive platforms completes in simply 1.5 seconds on Cerebras – a 15x enchancment in time to end result. This breakthrough allows sensible deployment of refined reasoning fashions that historically require intensive computation time.

DeepSeek-R1-Distill-Llama-70B combines the superior reasoning capabilities of DeepSeek’s 671B parameter Combination of Specialists (MoE) mannequin with Meta’s widely-supported Llama structure. Regardless of its environment friendly 70B parameter dimension, the mannequin demonstrates superior efficiency on advanced arithmetic and coding duties in comparison with bigger fashions.

“Safety and privateness are paramount for enterprise AI deployment,” continued Lupesko. “By processing all inference requests in U.S.-based information facilities with zero information retention, we’re making certain that organizations can leverage cutting-edge AI capabilities whereas sustaining strict information governance requirements. Knowledge stays within the U.S. 100% of the time and belongs solely to the client.”

The DeepSeek-R1-Distill-Llama-70B mannequin is accessible instantly by means of Cerebras Inference, with API entry accessible to pick clients by means of a developer preview program. For extra details about accessing prompt reasoning capabilities for purposes, go to www.cerebras.ai/contact-us.