Set up Ollama by downloading from their official web site in HERE for macOS M collection or Home windows, or use the command line for Linux:

curl -fsSL | sh

This installs the mannequin runner with computerized GPU acceleration (Metallic for Apple M-series, CUDA for NVIDIA).

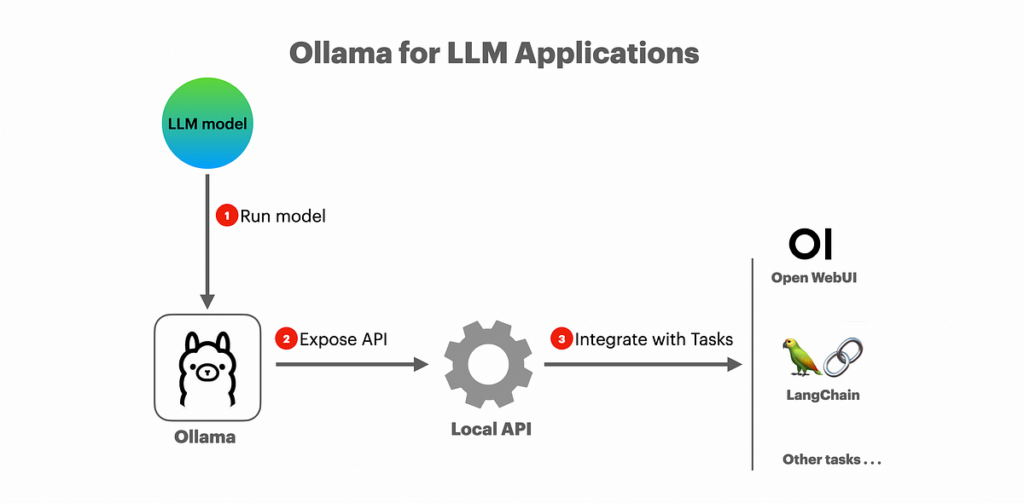

Consider Ollama as a instrument that helps you obtain the DeepSeek-R1 mannequin and host it domestically in your machine, permitting different purposes to make use of this mannequin.

Select a mannequin variant based mostly in your {hardware}. Yow will discover a listing of DeepSeek-R1 variations at https://ollama.com/library/deepseek-r1. These variations are smaller than the unique DeepSeek-R1 mannequin as a result of they’re distilled variations — consider it like a instructor (the unique mannequin) passing information to college students (smaller fashions).

Whereas these compact variations retain a lot of the unique mannequin’s capabilities, their smaller measurement permits for decrease latency and decreased {hardware} necessities. Typically, bigger fashions are extra highly effective, however for native internet hosting, select one that matches your GPU’s capability.

And thankfully, there’s a compact mannequin, DeepSeek-R1-Distill-Qwen-1.5B, that makes use of solely ~1GB VRAM and might even run on an M1 MacBook Air with 8GB reminiscence. We’ll use this mannequin as our instance, however be happy to discover different bigger fashions listed at https://ollama.com/library/deepseek-r1

Pull and host the DeepSeek-R1 mannequin with Ollama with this commandline:

ollama run deepseek-r1:1.5b # Balanced pace/high quality (~1.1GB VRAM)

If you wish to expertise the DeepSeek mannequin as a ChatGPT-like chatbot, you possibly can make the most of the UI right here — it really works completely with the domestically hosted Ollama mannequin.

Deploy Open WebUI for a user-friendly chatbot expertise with docker:

docker run -d -p 3000:8080 -v ollama:/root/.ollama -v open-webui:/app/backend/knowledge

--add-host=host.docker.inner:host-gateway --name open-webui ghcr.io/open-webui/open-webui:major

Go to http://localhost:3000, create an account, and choose deepseek-r1:1.5b from the mannequin dropdown.

Utilizing a neighborhood LLM with the Chat UI affords a number of advantages:

- Quick access to attempt totally different open-source fashions

- Offline and native chatting capabilities, permitting you to make use of ChatGPT-like companies to spice up productiveness even with out web entry

- Enhanced privateness, since Ollama and Open WebUI preserve all chat interactions native, stopping delicate info leaks

You probably have already hosted DeepSeek-R1 domestically, as a developer, you possibly can simply combine with the mannequin since Ollama exposes an OpenAI-compatible API at http://localhost:11434/v1

from openai import OpenAI

from openai import OpenAI# Configure the shopper to make use of Ollama's native endpoint

shopper = OpenAI(

base_url="http://localhost:11434/v1", # Ollama server tackle

api_key="no-api-key-needed", # Ollama would not require an API key

)

from langchain_ollama import ChatOllama

# Configure the Ollama shopper to make use of native endpoint

llm = ChatOllama(

base_url="http://localhost:11434", # Ollama server tackle

mannequin="deepseek-r1:1.5b", # Specify the mannequin you host in Ollama

)

All associated code and setup may be discover right here:

Right here’s a fast overview of the method:

With Ollama and DeepSeek-R1, now you can run highly effective AI domestically with GPU acceleration, entry a ChatGPT-like interface by Open WebUI, and combine AI capabilities into your purposes utilizing commonplace APIs — all whereas sustaining privateness and dealing offline.