Understanding Information Distillation

Information distillation (KD) is a broadly used method in synthetic intelligence (AI), the place a smaller pupil mannequin learns from a bigger instructor mannequin to enhance effectivity whereas sustaining efficiency. That is important in growing computationally environment friendly fashions for deployment on edge gadgets and resource-constrained environments.

The Downside: Instructor Hacking

A key problem that arises in KD is instructor hacking — a phenomenon the place the scholar mannequin exploits flaws within the instructor mannequin slightly than studying true generalizable data. That is analogous to reward hacking in Reinforcement Studying with Human Suggestions (RLHF), the place a mannequin optimizes for a proxy reward slightly than the supposed objective.

On this article, we are going to break down:

- The idea of instructor hacking

- Experimental findings from managed setups

- Strategies to detect and mitigate instructor hacking

- Actual-world implications and use circumstances

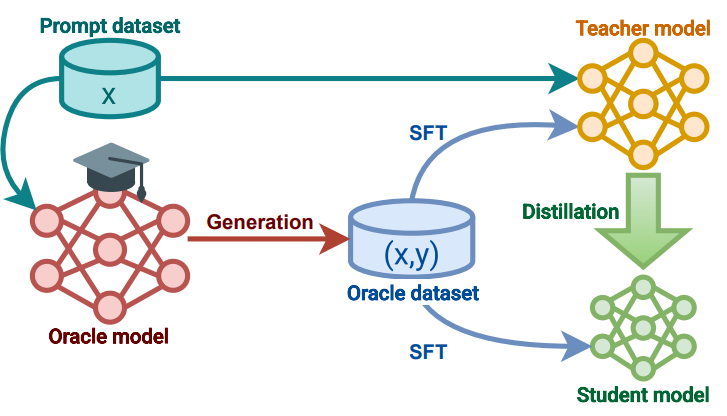

Information Distillation Fundamentals

Information distillation includes coaching a pupil mannequin to imitate a instructor mannequin, utilizing strategies equivalent to: