Convolutional Neural Networks (CNN) is likely one of the state-of-the-art approaches in fashionable machine imaginative and prescient. It was launched in 1998 by Yann LeCun to effectively seize the native constructions of the pictures. Nevertheless, you will need to perceive that even earlier than 1998, convolutional operation was abundantly utilized in sign processing, and CNNs basically use the idea of 2D convolutions.

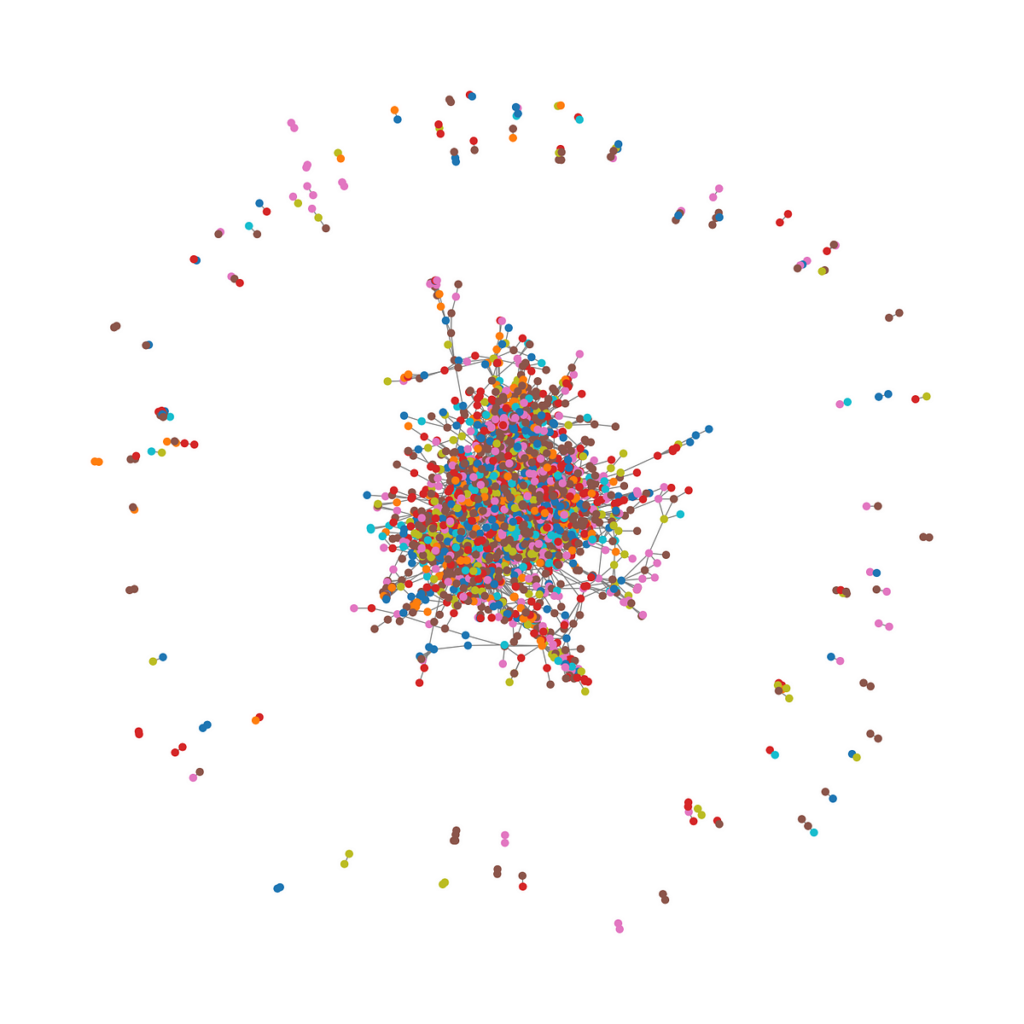

Graph constructions are extensively used to seize network-like relations between information factors, from social networks to molecular constructions. Right here on this article, we will likely be utilizing the Cora information set, which includes of papers as nodes and citations as edges. The thought of utilizing machine studying within the context of graph constructions has change into more and more fashionable. Within the work Kipf et al. (2017), the researchers exhibits the environment friendly use of convolutional operation in graph constructions.

Graph Convolutional Networks (GCN) will be thought of as an approximation of a spectral graph convolution. Successive use of the GCNs enable to seize bigger scale graph options.

Suppose x is a graph sign. The convolution operation will be outlined as follows.

U is the eigenvectors matrix of the normalized graph Laplacian L.

I is the N-th diploma identification matrix. D is the diploma matrix and A adjacency matrix. Thus, g_theta is a perform of eigen values of L.

g_theta will be approximated utilizing truncated enlargement by way of Chebyshev polynomials.

the place,

Now the convolution perform is parameterized utilizing the parameters θ’. Now we will lengthen this definition to the convolution operation we mentioned earlier.

the place,

This approximation is named Okay-localized, which suggests it solely will depend on the nodes most Okay steps away from the middle.

We are able to stack these approximate convolutional layers adopted by point-wise non-linearities to recuperate wealthy convolutional filter features. We repair Okay=1, which makes convolution a linear perform of graph Laplacian. We additionally approximate that the most important eigen vector to be near 2. Thus,

To deal with the overfitting, we additional restrict the variety of parameters of the mannequin, which supplies us,

Nevertheless, this operation could result in numerical instabilities in deep neural networks. Due to this fact we will use the renormalization trick.

the place,

We are able to decompose these matrices into sparse and dense matrices to enhance the effectivity of the calculation.

We will likely be utilizing the Cora information set to implement a easy GCN.

First import all the mandatory libraries.

import torch

import torch.nn as nn

import torch.optim as optim

from torch_geometric.datasets import Planetoid

import torch_geometric.transforms as T

You possibly can obtain the Cora information set as follows.

dataset = Planetoid(root='/tmp/Cora', title='Cora', rework=T.NormalizeFeatures())

information = dataset[0]

The normalized adjacency matrix is an attribute of the graph and is shared throughout the convolutional layers. Due to this fact we’ll calculate it earlier than defining the convolutional mannequin.

def normalize_adjacency(edge_index, num_nodes):

"""

Computes the normalized adjacency matrix: D^(-1/2) * (A + I) * D^(-1/2)

"""

edge_weight = torch.ones(edge_index.form[1], system=edge_index.system) # Edge weights initialized as 1

adj = torch.sparse_coo_tensor(edge_index, edge_weight, (num_nodes, num_nodes))# Convert to dense earlier than including self-loops

adj_dense = adj.to_dense() + torch.eye(num_nodes, system=edge_index.system)

# Compute diploma matrix D

deg = adj_dense.sum(dim=1)

deg_inv_sqrt = torch.pow(deg, -0.5)

deg_inv_sqrt[torch.isinf(deg_inv_sqrt)] = 0 # Deal with division by zero

# Compute normalized adjacency matrix

D_inv_sqrt = torch.diag(deg_inv_sqrt)

adj_norm = D_inv_sqrt @ adj_dense @ D_inv_sqrt # Apply normalization

return adj_norm

Subsequent we will outline a easy GCN with 2 layers.

class BasicGCN(nn.Module):

def __init__(self, in_features, hidden_features, out_features):

tremendous(BasicGCN, self).__init__()

self.W1 = nn.Parameter(torch.randn(in_features, hidden_features))

self.W2 = nn.Parameter(torch.randn(hidden_features, out_features))def ahead(self, x, adj):

# First GCN layer

x = adj @ x @ self.W1

x = torch.relu(x) # Activation perform

# Second GCN layer

x = adj @ x @ self.W2

return x

Subsequent we will use the next coaching loop to coach and consider the mannequin.

# Compute normalized adjacency matrix

adj_norm = normalize_adjacency(information.edge_index, information.num_nodes)# Outline mannequin, loss perform, and optimizer

mannequin = BasicGCN(in_features=dataset.num_node_features, hidden_features=16, out_features=dataset.num_classes)

loss_fn = nn.CrossEntropyLoss()

optimizer = optim.Adam(mannequin.parameters(), lr=0.01, weight_decay=5e-4)

# Perform to compute accuracy

def compute_accuracy(mannequin, information, adj, masks):

mannequin.eval()

with torch.no_grad():

out = mannequin(information.x, adj)

predictions = out.argmax(dim=1)

appropriate = (predictions[mask] == information.y[mask]).sum().merchandise()

accuracy = appropriate / masks.sum().merchandise()

return accuracy

# Coaching loop with check accuracy

def practice(mannequin, information, adj, epochs=200):

for epoch in vary(epochs):

mannequin.practice()

optimizer.zero_grad()

out = mannequin(information.x, adj)

loss = loss_fn(out[data.train_mask], information.y[data.train_mask])

loss.backward()

optimizer.step()

# Compute check accuracy

test_acc = compute_accuracy(mannequin, information, adj, information.test_mask)

if epoch % 10 == 0:

print(f'Epoch {epoch:3d}, Loss: {loss.merchandise():.4f}, Check Accuracy: {test_acc:.4f}')

# Prepare the mannequin

practice(mannequin, information, adj_norm)

Kipf, T. N., & Welling, M. (2017). Semi-Supervised Classification with Graph Convolutional Networks. Worldwide Convention on Studying Representations (ICLR). arXiv preprint arXiv:1609.02907.