At the moment, we see so many internet builders are shedding job to AI specifically the newbies who barely know code correctly. However, AI jobs are on high of the sport providing enormous wage & future development who can construct superior AI automated Apps. Studying these could be so crucial to start with on account of its large conditions and math concept that works behind the scene. Furthermore, most internet builders are usually not aware of how they write and perceive these codes written in tensorflow, numpy, pandas, python with complicated matrix like 2D array information construction & how the structure works for Deep Neural Community, CNN, RNN, Transformer & different cutting-edge algorithms and pretrained fashions and so on. On this weblog it can be sure you get correct guideline as a way to study it by your self and effectively from the scratch to complete and by suggesting study these codes and analyze, learn these analysis paper and achieve extra data on high of that. So, I can confidently say that, it will likely be magical for you when you get the hang around of it.

Study SD (customary deviation), Median, Mode, Imply, Outliers, Chance Distribution, Regular Distribution, Set, logic gates (XOR, OR, AND)

- Python Fundamentals — Introduction to Python syntax, variables, information sorts, loops, and features.

- Record Comprehension — Environment friendly listing operations with Python’s one-liner comprehension method.

- Lambda Features — Nameless features and their use circumstances in Python.

- Dictionaries in Python — Working with key-value pairs, dictionary strategies, and greatest practices.

- Matrix Addition & Multiplication — Performing fundamental matrix operations.

- Identification & Transpose — Understanding identification matrices and transposing.

- Matrix Inversion — Computing the inverse of a matrix.

- Pandas for Knowledge Dealing with — DataFrames, sequence, filtering, and information manipulation.

- Matplotlib for Visualization — Creating plots, graphs, and charts for information evaluation.

- NumPy for Mathematical Operations — Dealing with arrays, linear algebra, and numerical computing.

- Scikit-Study for Machine Studying — Implementing ML algorithms and mannequin analysis.

- Deep Studying with TensorFlow & PyTorch — Constructing neural networks and AI fashions.

- Linear Regression (Numerical Prediction) — Understanding linear regression, its mathematical basis, and implementation in Python.

- Logistic Regression (Classification) — How logistic regression works for binary and multi-class classification.

- Help Vector Machine (SVM) (Classification) — Introduction to SVM, kernel tips, and hyperplane separation.

- Determination Tree (Classification) — How resolution timber work, entropy, Gini index, and pruning strategies.

- Random Forest (Ensemble — Classification) — Bettering classification efficiency utilizing an ensemble of resolution timber.

- Okay-Means Clustering (Clustering Algorithm) — Unsupervised studying strategy for clustering related information factors.

- Principal Part Evaluation (PCA) (Dimensionality Discount & Clustering) — Decreasing information dimensions whereas retaining essential data.

- XGBoost (Classification & Regression & Nice Efficiency) — Introduction to XGBoost, why it’s highly effective, and its functions in ML competitions.

- Significance of Coaching the info (essential)

- Studying What’s take a look at and practice information for analysis & Accuracy test

- Tuning Hyperparameter for higher outcomes

- Automated Parameter tuning strategies (Grid Search)

- Accuracy metrics like

- Imply Squared Error (MSE) (Regression-based Analysis) — Understanding MSE and its position in measuring regression mannequin accuracy.

- Precision, Recall, and F1-score (Classification Metrics) — Explaining how these metrics work collectively for classification efficiency analysis.

- Confusion Matrix (Classification Analysis) — Visualizing mannequin efficiency utilizing a confusion matrix.

- ROC Curve & AUC (Classification Efficiency) — Understanding ROC curves and AUC for binary classification fashions.

- Intersection over Union (IoU) (Picture-based Analysis) — Measuring object detection accuracy with IoU.

- Imply Common Precision (mAP) (Detection Accuracy Examine) — Evaluating object detection fashions utilizing mAP.

Be sure to perceive what you’re doing, why and the way together with correct datasets, literature evaluation, how others contributed for that matter, discover accuracy, fine-tune fashions, change hyperparameters, use completely different ML fashions and present visuals & tables by evaluating all these fashions with precision, recall, f1-score, confusion matrix and so on. evaluations

Laptop can not perceive uncooked texts instantly. You could convert it into token like “ Ilove coding” might be like [0.2, 0.4, 0.6] like this format and now it can perceive it. Apart from, be sure you clear the textual content enter correctly with cease phrases, tags, image , Lemmetization, Stemming strategies earlier than placing it into ML fashions.

- Construct any Tasks Like Job parsing, suggestion, Pdf parsing and Matching, resume screening with Job Necessities matching (Cosine Similarity + NER)

- Construct a Easy Spam/no-spam Electronic mail Classification

- Sentiment evaluation primarily based app

- Construct it with flask or fastapi then deploy it to render, streamlit and so on.

- Understanding Neural Community Structure — Overview of neurons, layers, and the way a neural community is structured.

- Core Algorithm Behind Neural Networks — The mathematical basis of neural networks.

- Weights & Bias in Neural Networks — How weights and biases affect mannequin studying.

- Layers in Neural Networks — Understanding enter, hidden, and output layers.

- Activation Features in Neural Networks

- Sigmoid & Softmax — When and the place to make use of them.

- Different Activation Features — ReLU, Leaky ReLU, and Tanh.

- Backpropagation & Chain Rule — Understanding how neural networks study by error correction.

- Differentiation in Neural Networks — The position of derivatives in optimizing fashions.

- Gradient Descent & Studying Charges — Exploring optimization strategies for mannequin coaching.

- Superior Optimization Methods — Adam, RMSprop, Momentum, and different optimization algorithms.

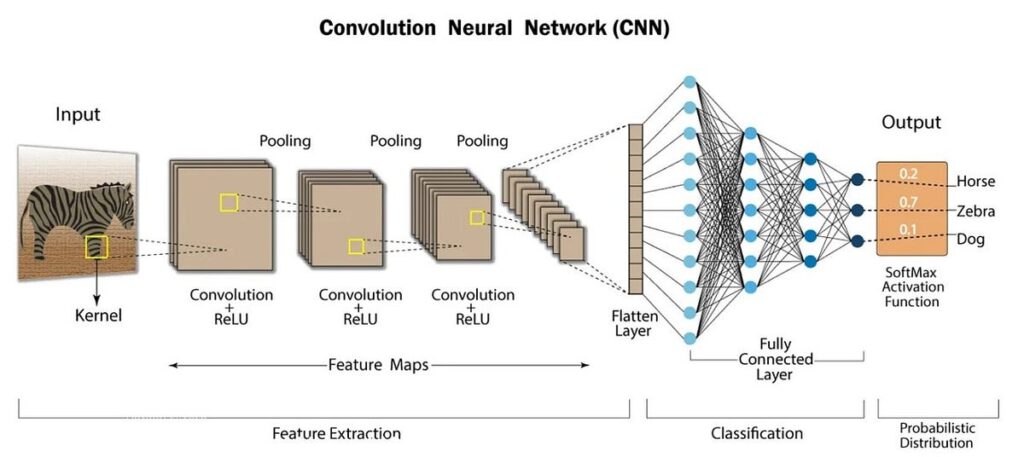

- Study About CNN (Convolutional Neural Networks)

- What’s Pooling, Padding and use circumstances.

- Inputs Shapes, Dimensions, Dense layers, Output layer

- Do fundamental Tasks with Cat, Canine Picture Classification with tensorflow keras

- Attempt to find out about Epochs, batch, mannequin evaluations and so on.

# Load CIFAR-10 dataset

(x_train, y_train), (x_test, y_test) = cifar10.load_data()# Normalize the pixel values to be between 0 and 1

x_train, x_test = x_train / 255.0, x_test / 255.0

# Examine the form of the dataset

print(f"Coaching information form: {x_train.form}")

print(f"Check information form: {x_test.form}")

# Construct a easy CNN mannequin

mannequin = Sequential()

# First Convolutional Block

mannequin.add(Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3))) # Conv layer with 32 filters

mannequin.add(MaxPooling2D(pool_size=(2, 2))) # MaxPooling layer

# Second Convolutional Block

mannequin.add(Conv2D(64, (3, 3), activation='relu'))

mannequin.add(MaxPooling2D(pool_size=(2, 2)))

# Flatten the 2D function maps into 1D

mannequin.add(Flatten())

# Totally Linked Layers

mannequin.add(Dense(128, activation='relu')) # Totally related layer with 128 neurons

mannequin.add(Dropout(0.5)) # Dropout layer with 50% chance

# Output layer (10 lessons for CIFAR-10)

mannequin.add(Dense(10, activation='softmax')) # 10 lessons, softmax activation

# Compile the mannequin

mannequin.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# Mannequin abstract

mannequin.abstract()

# Prepare the mannequin

historical past = mannequin.match(x_train, y_train, epochs=10, batch_size=64, validation_data=(x_test, y_test))

# Plot coaching & validation accuracy

plt.plot(historical past.historical past['accuracy'], label='practice accuracy')

plt.plot(historical past.historical past['val_accuracy'], label='validation accuracy')

plt.title('Mannequin Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend()

plt.present()

# Plot coaching & validation loss

plt.plot(historical past.historical past['loss'], label='practice loss')

plt.plot(historical past.historical past['val_loss'], label='validation loss')

plt.title('Mannequin Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.present()

# Consider the mannequin on the take a look at dataset

test_loss, test_accuracy = mannequin.consider(x_test, y_test)

print(f"Check Accuracy: {test_accuracy * 100:.2f}%")

print(f"Check Loss: {test_loss:.2f}")

# Predict on the take a look at dataset

predictions = mannequin.predict(x_test)

# Show the primary 5 take a look at photos together with predicted labels

for i in vary(5):

plt.imshow(x_test[i])

plt.title(f"Predicted: {predictions[i].argmax()}, Precise: {y_test[i][0]}")

plt.present()

- mage Preprocessing with OpenCV for CNNs — Methods like resizing, rotating, flipping, reshape, normalization, and augmentation.

- Edge Detection & Function Extraction — Utilizing OpenCV for Sobel, Canny, and contour detection

- Add Textual content, Circle, Rectangle — Utilizing OpenCV for Detected Object Annotations & marking and including level of pursuits.

- Including Heatmap On Level of Curiosity and study Explainable Computer Vision with Grad-CAM

- Selecting a Pretrained Mannequin for Constructing Detection — Exploring fashions Structure like ResNet, EfficientNet, U-Internet, Masks R-CNN, and YOLO.

- Introduction to Constructing Detection with Deep Studying — Overview of detecting buildings in satellite tv for pc/aerial photos.

- Dataset Preparation for Constructing Detection — Utilizing OpenCV for picture preprocessing (resizing, normalization, augmentation).

- Inference and Object Detection with OpenCV — Utilizing a skilled CNN mannequin to detect buildings in photos.

- Publish-processing Outcomes (Bounding Packing containers & Masks) — Visualizing detection outcomes with OpenCV’s drawing features.

- Optimizing Mannequin Efficiency (Thresholding and Filtering) — Bettering detection accuracy with non-maximum suppression (NMS) and confidence thresholds.

- Deploying the Constructing Detection Mannequin in a Actual-world Software — Working the mannequin in an online or cellular app.

- Evaluating Mannequin Accuracy (IoU & mAP for Detection Methods) — Measuring mannequin efficiency utilizing Intersection over Union (IoU) and Imply Common Precision (mAP).

- Future Enhancements (Nice-tuning & Switch Studying) — Customizing the pretrained mannequin with domain-specific information for improved accuracy like human emotion detection with customized information.

- Study fundamental Mannequin of LSTM, Bi-LSTM, Stacked-LSTM and why its gates are so essential

- Helps resolve what to overlook, bear in mind, and output.

- Textual content Sequencing, Sequence padding, Embedding

- do initiatives like subsequent phrase predictions, time sequence predictions, textual content summarization and so on.

- Discover extra about BERN, Transformer Structure, encoding, decoding, positional encoding, Self- consideration, Context mechanism

import numpy as np

import tensorflow as tf

from tensorflow.keras.fashions import Sequential

from tensorflow.keras.layers import LSTM, Dense, Embedding, SpatialDropout1D

from tensorflow.keras.preprocessing.textual content import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

import matplotlib.pyplot as plt# Pattern textual content information (optimistic and detrimental opinions)

texts = [

"I love this product!", "This is a great product", "Worst purchase ever", "Not satisfied with the quality",

"Totally amazing, I will buy again", "Very disappointing, waste of money", "Fantastic quality", "Horrible experience",

"Will recommend to everyone", "Terrible product, not worth it"

]

# Corresponding labels (1: Constructive, 0: Unfavourable)

labels = [1, 1, 0, 0, 1, 0, 1, 0, 1, 0]

# Break up the dataset into coaching and testing units

X_train, X_test, y_train, y_test = train_test_split(texts, labels, test_size=0.3, random_state=42)

# Parameters for preprocessing

MAX_NB_WORDS = 50

MAX_SEQUENCE_LENGTH = 10

EMBEDDING_DIM = 100

# Tokenizer for textual content information

tokenizer = Tokenizer(num_words=MAX_NB_WORDS)

tokenizer.fit_on_texts(X_train)

# Convert textual content to sequences of integers

X_train_seq = tokenizer.texts_to_sequences(X_train)

X_test_seq = tokenizer.texts_to_sequences(X_test)

# Pad sequences to make sure uniform size

X_train_pad = pad_sequences(X_train_seq, maxlen=MAX_SEQUENCE_LENGTH)

X_test_pad = pad_sequences(X_test_seq, maxlen=MAX_SEQUENCE_LENGTH)

print(f"Coaching information form: {X_train_pad.form}")

print(f"Check information form: {X_test_pad.form}")

# Construct the LSTM mannequin

mannequin = Sequential()

mannequin.add(Embedding(MAX_NB_WORDS, EMBEDDING_DIM, input_length=MAX_SEQUENCE_LENGTH))

mannequin.add(SpatialDropout1D(0.2)) # Dropout for regularization

mannequin.add(LSTM(100, dropout=0.2, recurrent_dropout=0.2)) # LSTM layer

mannequin.add(Dense(1, activation='sigmoid')) # Output layer for binary classification

# Compile the mannequin

mannequin.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# Mannequin abstract

mannequin.abstract()

# Prepare the mannequin

historical past = mannequin.match(X_train_pad, np.array(y_train), epochs=5, batch_size=2, validation_data=(X_test_pad, np.array(y_test)))

# Plot coaching & validation loss

plt.plot(historical past.historical past['loss'], label='train_loss')

plt.plot(historical past.historical past['val_loss'], label='val_loss')

plt.legend()

plt.title('Coaching and Validation Loss')

plt.present()

- Discover Vector Database, BERT, Transformer Structure, encoding, decoding, positional encoding, Self- consideration, Context mechanism

- Discover Hugging Face, LLama2, Llama-3 open supply pretrained fashions and study to make use of it with transformer mannequin to generate code, texts and so on.

- Study to fine-tune these pretrained fashions to make it extra particular to your area and tune hyperparameters and epochs, studying fee, optimizations like adams, adagrad and so on.

- Introduction to LangChain — Understanding LangChain and its position in LLM-based functions.

- What’s RAG (Retrieval-Augmented Technology)? — Explaining how RAG enhances LLMs with exterior data.

- Setting Up LangChain in Python — Putting in and configuring LangChain for growth.

- Connecting to LLMs (OpenAI, Hugging Face, Native Fashions) — Utilizing completely different language fashions with LangChain.

- Constructing a Fundamental RAG Pipeline — Retrieving related information and producing responses utilizing LLMs.

- Knowledge Sources for RAG — Integrating PDFs, databases, APIs, and vector shops.

- Utilizing Vector Databases (FAISS, ChromaDB, Pinecone) — Storing and retrieving information effectively for RAG.

- Enhancing Response High quality with Immediate Engineering — Optimizing question understanding and response era.

- RAG-powered Chatbot or Q&A System — Creating an AI assistant that retrieves real-time information.

- RAG-powered Medical Assistant — Creating an AI assistant that retrieves & analyze medical information generate

- Nice-tuning RAG for Particular Domains — Adapting the RAG framework for finance, healthcare, or schooling.

- Optimizing Efficiency (Caching, Indexing, Velocity Enhancements) — Making RAG functions scalable and environment friendly.

- Quantizations Methods — LORA, QLORA and make it environment friendly for low finish pcs and GPUs to deal with it. Quantization

- Deploying RAG Functions in Manufacturing — Internet hosting on cloud platforms and integrating with internet apps.

from transformers import T5ForConditionalGeneration, T5Tokenizer

import re

import torch# Load mannequin and tokenizer

mannequin = T5ForConditionalGeneration.from_pretrained("./saved_summary_model")

tokenizer = T5Tokenizer.from_pretrained("./saved_summary_model")

machine = "cuda" if torch.cuda.is_available() else "cpu"

mannequin = mannequin.to(machine)

# Clear textual content perform

def clean_text_to_summarize(textual content):

textual content = re.sub(r'rn', ' ', textual content) # Take away carriage returns and line breaks

textual content = re.sub(r's+', ' ', textual content) # Take away additional areas

textual content = re.sub(r'<.>', '', textual content) # Take away any XML tags

textual content = textual content.strip().decrease() # Strip and convert to decrease case

return textual content

# Summarization perform

def summarize_dialogue(dialogue):

dialogue = clean_text_to_summarize(dialogue)

inputs = tokenizer(dialogue, return_tensors="pt", truncation=True, padding="max_length", max_length=512)

inputs = {key: worth.to(machine) for key, worth in inputs.objects()}

# Generate our comeplete abstract

outputs = mannequin.generate(

inputs["input_ids"],

max_length=150,

num_beams=4,

early_stopping=True

)

abstract = tokenizer.decode(outputs[0], skip_special_tokens=True)

return abstract