We’ve all seen the hype round AI fashions like ChatGPT, DALL-E 2, and others. They’ll generate textual content, create pictures, and even write code with spectacular talent. However behind the scenes, there’s a vital method guaranteeing these AI techniques should not simply highly effective, but in addition useful, innocent, and aligned with what people really need. That method is Reinforcement Studying from Human Suggestions, or RLHF.

In easy phrases, RLHF is like educating a canine methods, however as a substitute of treats, you’re utilizing human preferences to information the AI’s studying. Historically, AI fashions are skilled on huge datasets and optimized utilizing algorithms. Nevertheless, this method can result in a number of points:

Misalignment: The AI may excel at a activity however produce outputs which are nonsensical, irrelevant, and even offensive.

Lack of Nuance: AI can battle with subjective duties the place there’s no single “appropriate” reply, akin to writing artistic tales or participating in nuanced conversations.

Unintended Penalties: An AI optimized for a selected aim may discover loopholes or exploit edge instances, resulting in undesirable outcomes.

RLHF addresses these points by incorporating direct human suggestions into the coaching course of. It permits AI to be taught not simply what’s “technically appropriate”, however what is definitely helpful and fascinating from a human perspective.

The RLHF course of normally appears to be like one thing like this:

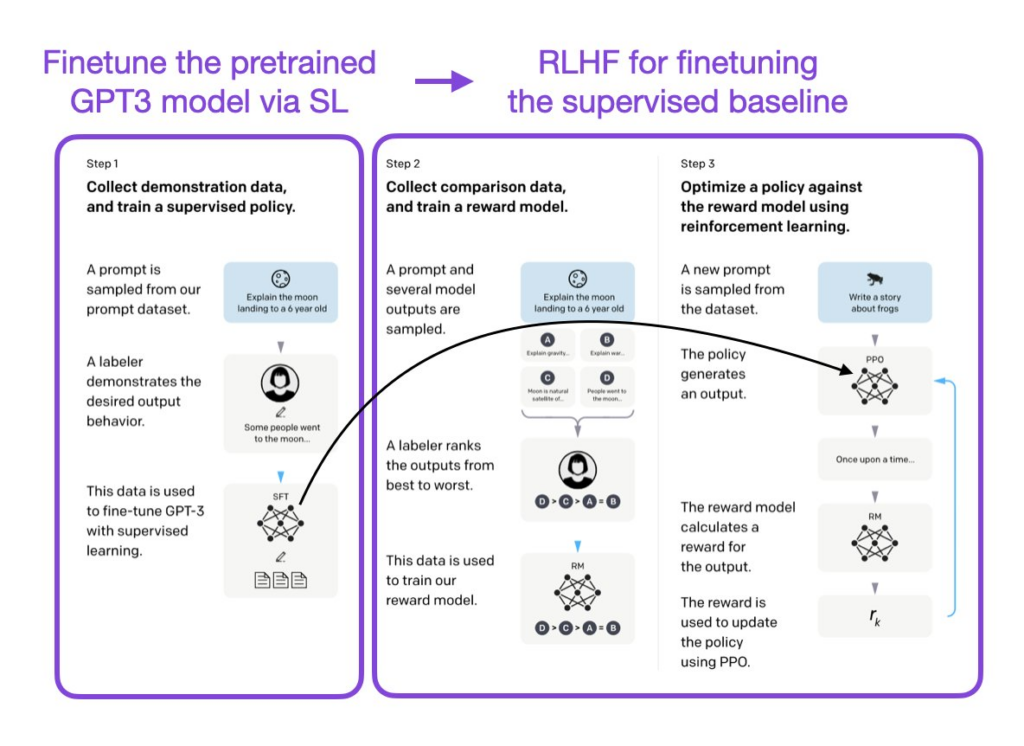

Pre-Coaching: Begin with a big language mannequin (LLM) that has been pre-trained on a large dataset of textual content and code. This offers the mannequin a broad understanding of language and the world.

Supervised High-quality-Tuning (SFT): Additional refine the mannequin on a smaller, extra curated dataset of prompts and desired responses. This helps the mannequin be taught to comply with directions and generate outputs in a selected format.

Reward Mannequin Coaching: That is the place the “human suggestions” half is available in. Human evaluators are introduced with totally different outputs from the mannequin and requested to rank them based mostly on varied standards (helpfulness, relevance, security, and many others.). This knowledge is then used to coach a “reward mannequin” that may predict how a human would fee a given output.

Reinforcement Studying: Lastly, the unique LLM is fine-tuned utilizing reinforcement studying, with the reward mannequin offering the “reward” sign. The mannequin learns to generate outputs that maximize the expected reward, successfully studying to align with human preferences.

RLHF enhances AI security by aligning AI behaviors with human values and ethics. It guides AI techniques away from unsafe, unethical, or undesirable behaviors that will not be anticipated when designing a reward operate. Human oversight and suggestions be sure that AI brokers function inside secure boundaries and make choices which are ethically sound and socially accountable.

Mechanisms of RLHF in Enhancing AI Security —

RLHF improves AI security by means of a number of key mechanisms:

Alignment with Human Values: RLHF aligns AI conduct with complicated human values and ethics.Conventional reinforcement studying depends on predefined reward features which may not totally seize the spectrum of human values or nuanced choices. RLHF permits AI techniques to be taught from human suggestions, guaranteeing their actions align with what is taken into account acceptable, moral, or fascinating.

Mitigating Dangerous Outputs: RLHF ensures that fashions refuse to reply dangerous or harmful queries. It prevents AI mannequin bias, guiding fashions to be innocent, sincere, and useful.

Enhancing Mannequin Robustness: Human suggestions in RLHF helps fashions keep away from dangerous or undesirable actions, enhancing their security and robustness. That is achieved by means of iterative changes and suggestions, enabling the mannequin to adapt to altering human preferences or necessities.

Lowering Bias and Hallucinations: RLHF reduces bias in massive language fashions by incorporating human enter throughout coaching to raised match human values and preferences. It additionally helps to scale back hallucinations, the place AI fashions present inaccurate or deceptive data, by correcting the mannequin when it offers biased or inaccurate outputs.

Addressing Moral Concerns: RLHF ensures that the AI’s studying course of aligns with human values and moral requirements. By evaluating AI choices towards established moral frameworks, RLHF helps to create AI techniques which are strong and well-aligned with human moral requirements.

Offering Nuanced Understanding: RLHF permits AI techniques to carry out duties requiring a nuanced understanding of human preferences, ethics, or social norms, significantly in situations the place defining the fitting or moral motion is complicated and never simply quantifiable.

Potential Future Developments in RLHF Expertise —

Future developments in RLHF know-how purpose to enhance its effectiveness, scalability, and security. Some potential future instructions embrace:

Automated Suggestions Programs: Combining RLHF with artificial suggestions from smaller fashions may cut back dependency on human annotators. Improved Integration of Human Suggestions: Creating extra subtle strategies for integrating human suggestions into the training course of, akin to real-time suggestions mechanisms and higher methods to interpret subjective human insights.

Scalability and Effectivity: Advances in RLHF could embrace automated methods to synthesize and generalize human suggestions, lowering the dependency on human-generated knowledge.

Enhanced Reward Modeling: Creating extra superior reward fashions that may precisely seize and predict human preferences, dealing with the complexity and infrequently contradictory nature of human suggestions.

Generalization and Switch Studying: Enhancing the flexibility of RLHF-trained fashions to generalize throughout totally different duties and domains, leveraging suggestions from one area to enhance efficiency in one other.

Cross-Modal Studying: Creating RLHF techniques that may be taught from cross-modal suggestions, akin to combining textual, visible, and auditory suggestions to boost studying. This might open new avenues for extra holistic and context-aware AI techniques.

Explainability and Transparency: Growing the explainability and transparency of RLHF techniques, enabling customers to grasp how human suggestions influences the AI’s studying course of and choices.

Coverage and Regulation: Evolving coverage and regulatory frameworks to deal with the distinctive challenges RLHF presents, together with problems with privateness, knowledge safety, and accountability in techniques skilled with human suggestions.

Adaptive Efficiency Administration Programs: RLHF may enable the event of efficiency analysis instruments that transcend conventional metrics.

Studying and Generalizing Suggestions: Creating fashions able to studying and generalizing suggestions throughout duties and domains.

RLHF in Motion: Actual-World Examples

RLHF is already powering a few of the most spectacular AI techniques we use immediately:

ChatGPT: OpenAI used RLHF extensively to coach ChatGPT, leading to a chatbot that’s extra conversational, informative, and fewer more likely to generate dangerous content material.

InstructGPT: Additionally from OpenAI, InstructGPT was particularly designed to comply with directions higher than earlier fashions, because of RLHF.

Claude: Anthropic’s Claude AI is one other instance of a mannequin that leverages RLHF to grasp complicated human directions and generate useful and innocent responses.

The Challenges and Way forward for RLHF

Whereas RLHF is a strong method, it’s not with out its challenges:

Bias: Human suggestions may be subjective and biased, which may inadvertently be included into the AI mannequin.

Price: Gathering high-quality human suggestions may be costly and time-consuming.

Scalability: Scaling RLHF to even bigger fashions and extra complicated duties stays a problem.

Regardless of these challenges, RLHF is a vital step in direction of creating AI techniques that aren’t simply clever, but in addition aligned with human values. As AI continues to evolve, RLHF and associated methods will play an more and more vital function in shaping its future.

Sources —

What is RLHF? — Reinforcement Learning from Human Feedback … (2022).

IBM. What Is Reinforcement Learning From Human Feedback (RLHF)? 10 Nov. 2023

Cameron Hashemi-Pour,. What Is Reinforcement Learning from Human Feedback (RLHF)? 6 June 2023

Cevher Dogan. (2025). Evolution of Generative AI and Reinforcement Learning from Human …

Shelf. (2024). RLHF Makes AI More Human: Reinforcement Learning … — Shelf.io.