“If I say ‘I like espresso’ in Tamil ,English, or Spanish,— shouldn’t machines perceive that I meant the identical factor?”

Welcome to the world of multilingual sentence embeddings — the place language boundaries dissolve into dense vectors of pure which means.

Let’s start from scratch.

In conventional NLP methods, most fashions are monolingual — skilled in a single language.

Suppose we’ve got:

English: “I like espresso”

Spanish: “Me encanta el café”

Tamil: “எனக்கு காபி பிடிக்கும்”

To a machine, these are simply totally different byte sequences.

However as people, we perceive they carry the similar concept.

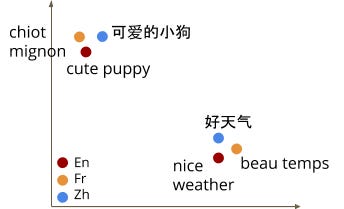

To bridge this, we would like a system that initiatives all these sentences right into a shared semantic vector area, the place geometric proximity implies similarity of which means.

That’s, the cosine similarity between their vectors must be excessive (i.e., angle near 0°).

So we ask:

👉 Can we construct a illustration the place “I like espresso” and “Me encanta el café” lie shut collectively, no matter language?

That’s the purpose of multilingual sentence embeddings:

Map sentences from totally different languages right into a shared, common semantic area.

Let’s construct up from the basics.

Think about a sentence like:

“I keep in mind all of it to properly”

How can a machine perceive this? Not simply memorize the phrases, however perceive what it means?

We flip this sentence right into a vector — a listing of numbers — like:

[0.2, -0.7, 0.3, 0.9, ..., 0.1]

This vector — the embedding — captures the semantics (which means) of all the sentence.

Two comparable sentences ought to have embeddings which might be shut in vector area, even when the wordings differ:

- “I keep in mind all of it too properly.”

- “The recollections are nonetheless vivid.”

We will then examine, search, or cluster them utilizing basic math (like cosine similarity).

Right here’s the exhausting half:

Completely different languages use totally different phrases, totally different grammar, and even totally different scripts.

So the problem turns into:

Can we map sentences from any language into the similar embedding area such that comparable meanings are shut collectively?

It’s like translating not from language to language — however from language to which means.

There are three core methods to know.