One of many fascinating areas into these Agentic Ai programs is the distinction between constructing a single versus multi-agent workflow, or maybe the distinction between working with extra versatile vs managed programs.

This text will enable you to perceive what agentic AI is, the best way to construct easy workflows with LangGraph, and the variations in outcomes you’ll be able to obtain with the completely different architectures. I’ll reveal this by constructing a tech information agent with numerous knowledge sources.

As for the use case, I’m a bit obsessive about getting automated information updates, primarily based on my preferences, with out me drowning in data overload each day.

Working with summarizing and gathering analysis is a kind of areas that agentic AI can actually shine.

So comply with alongside whereas I hold making an attempt to make AI do the grunt work for me, and we’ll see how single-agent compares to multi-agent setups.

I all the time hold my work jargon-free, so in case you’re new to agentic AI, this piece ought to enable you to perceive what it’s and the best way to work with it. If you happen to’re not new to it, you’ll be able to scroll previous among the sections.

Agentic AI (& LLMs)

Agentic AI is about programming with pure language. As a substitute of utilizing inflexible, specific code, you’re instructing massive language fashions (LLMs) to route knowledge and carry out actions via plain language in automating duties.

Utilizing pure language in workflows isn’t new, we’ve used NLP for years to extract and course of knowledge. What’s new is the quantity of freedom we will now give language fashions, permitting them to deal with ambiguity and make choices dynamically.

However simply because LLMs can perceive nuanced language doesn’t imply they inherently validate details or keep knowledge integrity. I see them primarily as a communication layer that sits on high of structured programs and present knowledge sources.

I often clarify it like this to non-technical individuals: they work a bit like we do. If we don’t have entry to wash, structured knowledge, we begin making issues up. Similar with LLMs. They generate responses primarily based on patterns, not truth-checking.

So identical to us, they do their finest with what they’ve acquired. If we would like higher output, we have to construct programs that give them dependable knowledge to work with. So, with Agentic programs we combine methods for them to work together with completely different knowledge sources, instruments and programs.

Now, simply because we can use these bigger fashions in additional locations, doesn’t imply we ought to. LLMs shine when decoding nuanced pure language, suppose customer support, analysis, or human-in-the-loop collaboration.

However for structured duties — like extracting numbers and sending them someplace — it’s good to use conventional approaches. LLMs aren’t inherently higher at math than a calculator. So, as an alternative of getting an LLM do calculations, you give an LLM entry to a calculator.

So everytime you can construct elements of a workflow programmatically, that may nonetheless be the higher possibility.

Nonetheless, LLMs are nice at adapting to messy real-world enter and decoding obscure directions so combining the 2 could be an effective way to construct programs.

Agentic Frameworks

I do know lots of people bounce straight to CrewAI or AutoGen right here, however I’d suggest testing LangGraph, Agno, Mastra, and Smolagents. Primarily based on my analysis, these frameworks have obtained among the strongest suggestions to date.

LangGraph is extra technical and could be complicated, nevertheless it’s the popular alternative for a lot of builders. Agno is simpler to get began with however much less technical. Mastra is a strong possibility for JavaScript builders, and Smolagents exhibits a variety of promise as a light-weight different.

On this case, I’ve gone with LangGraph — constructed on high of LangChain — not as a result of it’s my favourite, however as a result of it’s changing into a go-to framework that extra devs are adopting.

So, it’s price being acquainted with.

It has a variety of abstractions although, the place you could wish to rebuild a few of it simply to have the ability to management and perceive it higher.

I can’t go into element on LangGraph right here, so I made a decision to construct a fast guide for those who have to get a evaluation.

As for this use case, you’ll be capable to run the workflow with out coding something, however in case you’re right here to be taught you might also wish to perceive the way it works.

Selecting an LLM

Now, you may bounce into this and marvel why I’m selecting sure LLMs as the bottom for the brokers.

You may’t simply choose any mannequin, particularly when working inside a framework. They have to be appropriate. Key issues to search for are instrument calling help and the flexibility to generate structured outputs.

I’d suggest checking HuggingFace’s Agent Leaderboard to see which fashions really carry out effectively in real-world agentic programs.

For this workflow, you ought to be tremendous utilizing fashions from Anthropic, OpenAI, or Google. If you happen to’re contemplating one other one, simply be sure it’s appropriate with LangChain.

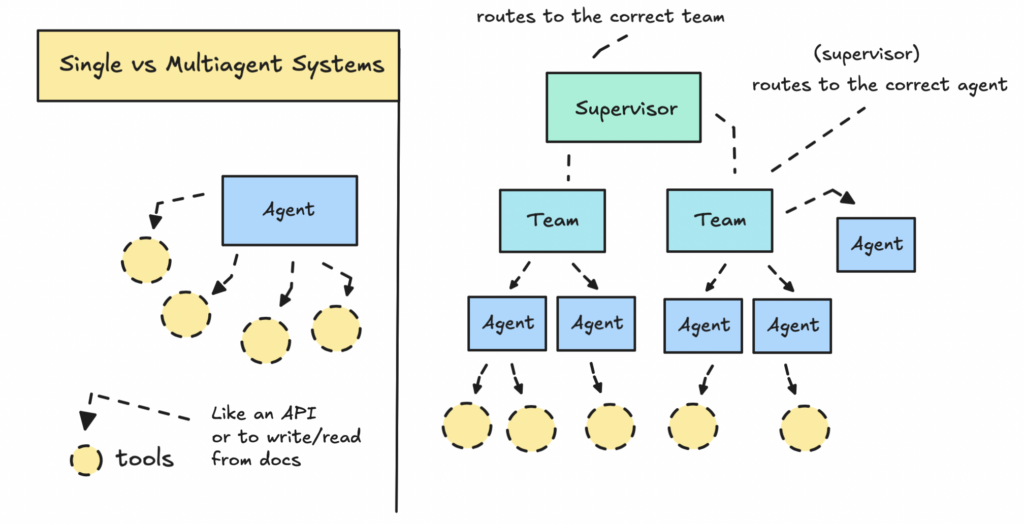

Single vs. Multi-Agent Programs

If you happen to construct a system round one LLM and provides it a bunch of instruments you need it to make use of, you’re working with a single-agent workflow. It’s quick, and in case you’re new to agentic AI, it would appear to be the mannequin ought to simply determine issues out by itself.

However the factor is these workflows are simply one other type of system design. Like several software program mission, it’s good to plan the method, outline the steps, construction the logic, and determine how every half ought to behave.

That is the place multi-agent workflows are available in.

Not all of them are hierarchical or linear although, some are collaborative. Collaborative workflows would then additionally fall into the extra versatile method that I discover tougher to work with, no less than as it’s now with the capabilities that exist.

Nevertheless, collaborative workflows do additionally break aside completely different features into their very own modules.

Single-agent and collaborative workflows are nice to begin with if you’re simply enjoying round, however they don’t all the time provide the precision wanted for precise duties.

For the workflow I’ll construct right here, I already know the way the APIs ought to be used — so it’s my job to information the system to make use of it the fitting approach.

We’ll undergo evaluating a single-agent setup with a hierarchical multi-agent system, the place a lead agent delegates duties throughout a small workforce so you’ll be able to see how they behave in observe.

Constructing a Single Agent Workflow

With a single thread — i.e., one agent — we give an LLM entry to a number of instruments. It’s as much as the agent to determine which instrument to make use of and when, primarily based on the consumer’s query.

The problem with a single agent is management.

Irrespective of how detailed the system immediate is, the mannequin might not comply with our requests (this will occur in additional managed environments too). If we give it too many instruments or choices, there’s a great probability it gained’t use all of them and even use the fitting ones.

As an example this, we’ll construct a tech information agent that has entry to a number of API endpoints with customized knowledge with a number of choices as parameters within the instruments. It’s as much as the agent to determine what number of to make use of and the best way to setup the ultimate abstract.

Bear in mind, I construct these workflows utilizing LangGraph. I gained’t go into LangGraph in depth right here, so if you wish to be taught the fundamentals to have the ability to tweak the code, go here.

You could find the single-agent workflow here. To run it, you’ll want LangGraph Studio and the most recent model of Docker put in.

When you’re arrange, open the mission folder in your pc, add your GOOGLE_API_KEY in a .env file, and save. You will get a key from Google here.

Gemini Flash 2.0 has a beneficiant free tier, so working this shouldn’t value something (however you could run into errors in case you use it an excessive amount of).

If you wish to change to a different LLM or instruments, you’ll be able to tweak the code immediately. However, once more, keep in mind the LLM must be appropriate.

After setup, launch LangGraph Studio and choose the right folder.

It will boot up our workflow so we will take a look at it.

If you happen to run into points booting this up, double-check that you just’re utilizing the most recent model of Docker.

As soon as it’s loaded, you’ll be able to take a look at the workflow by coming into a human message and hitting submit.

You may see me run the workflow beneath.

You may see the ultimate response beneath.

For this immediate it determined that it might examine weekly trending key phrases filtered by the class ‘corporations’ solely, after which it fetched the sources of these key phrases and summarized for us.

It had some points in giving us a unified abstract, the place it merely used the data it acquired final and failed to make use of the entire analysis.

In actuality we would like it to fetch each trending and high key phrases inside a number of classes (not simply corporations), examine sources, observe particular key phrases, and cause and summarize all of it properly earlier than returning a response.

We will in fact probe it and hold asking it questions however as you’ll be able to think about if we want one thing extra complicated it might begin to make shortcuts within the workflow.

The important thing factor is, an agent system isn’t simply gonna suppose the way in which we count on, we’ve to truly orchestrate it to do what we would like.

So a single agent is nice for one thing easy however as you’ll be able to think about it could not suppose or behave as we predict.

For this reason going for a extra complicated system the place every agent is accountable for one factor could be actually helpful.

Testing a Multi-Agent Workflow

Constructing multiagent workflows is much more tough than constructing a single agent with entry to some instruments. To do that, it’s good to fastidiously take into consideration the structure beforehand and the way knowledge ought to circulation between the brokers.

The multi-agent workflow I’ll arrange right here makes use of two completely different groups — a analysis workforce and an enhancing workforce — with a number of brokers underneath every.

Each agent has entry to a particular set of instruments.

We’re introducing some new instruments, like a analysis pad that acts as a shared house — one workforce writes their findings, the opposite reads from it. The final LLM will learn every little thing that has been researched and edited to make a abstract.

An alternative choice to utilizing a analysis pad is to retailer knowledge in a scratchpad in state, isolating short-term reminiscence for every workforce or agent. However that additionally means pondering fastidiously about what every agent’s reminiscence ought to embrace.

I additionally determined to construct out the instruments a bit extra to offer richer knowledge upfront, so the brokers don’t must fetch sources for every key phrase individually. Right here I’m utilizing regular programmatic logic as a result of I can.

A key factor to recollect: if you need to use regular programming logic, do it.

Since we’re utilizing a number of brokers, you’ll be able to decrease prices by utilizing cheaper fashions for many brokers and reserving the dearer ones for the necessary stuff.

Right here, I’m utilizing Gemini Flash 2.0 for all brokers besides the summarizer, which runs on OpenAI’s GPT-4o. If you’d like higher-quality summaries, you need to use an much more superior LLM with a bigger context window.

The workflow is about up for you here. Earlier than loading it, be sure so as to add each your OpenAI and Google API keys in a .env file.

On this workflow, the routes (edges) are setup dynamically as an alternative of manually like we did with the only agent. It’ll look extra complicated in case you peek into the code.

When you boot up the workflow in LangGraph Studio — identical course of as earlier than — you’ll see the graph with all these nodes prepared.

LangGraph Studio lets us visualize how the system delegates work between brokers once we run it—identical to we noticed within the less complicated workflow above.

Since I perceive the instruments every agent is utilizing, I can immediate the system in the fitting approach. However common customers gained’t know the way to do that correctly. So in case you’re constructing one thing comparable, I’d counsel introducing an agent that transforms the consumer’s question into one thing the opposite brokers can really work with.

We will try it out by setting a message.

“I’m an investor and I’m focused on getting an replace for what has occurred throughout the week in tech, and what individuals are speaking about (this implies classes like corporations, individuals, web sites and topics are fascinating). Please additionally observe these particular key phrases: AI, Google, Microsoft, and Massive Language Fashions”

Then selecting “supervisor” because the Subsequent parameter (we’d usually do that programmatically).

This workflow will take a number of minutes to run, not like the single-agent workflow we ran earlier which completed in underneath a minute.

So be affected person whereas the instruments are working.

Basically, these programs take time to assemble and course of data and that’s simply one thing we have to get used to.

The ultimate abstract will look one thing like this:

You may learn the entire thing here as an alternative if you wish to test it out.

The information will clearly fluctuate relying on if you run the workflow. I ran it the twenty eighth of March so the instance report shall be for this date.

It ought to save the abstract to a textual content doc, however in case you’re working this inside a container, you possible gained’t be capable to entry that file simply. It’s higher to ship the output elsewhere — like Google Docs or through electronic mail.

As for the outcomes, I’ll allow you to determine for your self the distinction between utilizing a extra complicated system versus a easy one, and the way it offers us extra management over the method.

Ending Notes

I’m working with a great knowledge supply right here. With out that, you’d want so as to add much more error dealing with, which might gradual every little thing down much more.

Clear and structured knowledge is vital. With out it, the LLM gained’t carry out at its finest.

Even with strong knowledge, it’s not excellent. You continue to have to work on the brokers to verify they do what they’re speculated to.

You’ve most likely already observed the system works — nevertheless it’s not fairly there but.

There are nonetheless a number of issues that want enchancment: parsing the consumer’s question right into a extra structured format, including guardrails so brokers all the time use their instruments, summarizing extra successfully to maintain the analysis doc concise, bettering error dealing with, and introducing long-term reminiscence to raised perceive what the consumer really wants.

State (short-term reminiscence) is particularly necessary if you wish to optimize for efficiency and value.

Proper now, we’re simply pushing each message into state and giving all brokers entry to it, which isn’t perfect. We actually wish to separate state between the groups. On this case, it’s one thing I haven’t performed, however you’ll be able to strive it by introducing a scratchpad within the state schema to isolate what every workforce is aware of.

Regardless, I hope it was a enjoyable expertise to know the outcomes we will get by constructing completely different Agentic Workflows.

If you wish to see extra of what I’m engaged on, you’ll be able to comply with me here but in addition on Medium, GitHub, or LinkedIn (although I’m hoping to maneuver over to X quickly). I even have a Substack, the place I hope to publishing shorter items in.

❤️