Ever puzzled how fashions like ChatGPT really work underneath the hood? On this weblog, we’ll take a pleasant however technically detailed tour by the interior workings of a GPT-style mannequin — sure, the identical form of structure that powers a few of at this time’s most superior AI methods.

We gained’t simply speak about principle; we’ll additionally present easy methods to implement a simplified model from scratch utilizing PyTorch. Whether or not you’re a machine studying fanatic, a researcher attempting to demystify transformers, or simply interested in how these giant language fashions are put collectively — this information is for you.

Let’s break down complicated concepts into comprehensible items and construct our very personal tiny GPT.

To course of pure language, the textual content should be damaged down into smaller models or tokens. That is dealt with utilizing a tokenizer. On this implementation, torchtext’s basic_english tokenizer splits the textual content into lowercase phrases and punctuation.

A vocabulary is then constructed from these tokens, assigning a novel integer index to every one. Particular tokens like

tokenizer = get_tokenizer("basic_english")

vocab = build_vocab_from_iterator(tokenizer(textual content), specials=['', '', ''])

Solely at this level can we convert the textual knowledge into numerical format (enter IDs) that the mannequin can perceive. These IDs are reshaped right into a matrix of form (batch_size, sequence_length) to facilitate batch processing throughout coaching. The vocabulary additionally supplies OOV dealing with and token-to-id transformation.

A Tokenizer class is designed to:

- Tokenize every line of enter textual content

- Construct a vocabulary utilizing token frequency

- Rework tokens into numerical IDs

- Reshape the ensuing token stream right into a batch-friendly format

This kinds the important preprocessing pipeline for feeding knowledge right into a transformer.

Language fashions are educated to foretell the following phrase in a sequence. To do that, we:

- Add

firstly and on the finish of every enter sequence - Create labels by shifting the enter one step to the correct

We additionally apply masking to disregard the

y[:,0:-1] = x[:,1:]

y[:,-1] = -100 # Masks padding in loss

This shifted label setup is a elementary facet of coaching autoregressive fashions like GPT. The masking is important in autoregressive fashions like GPT, because it prevents the mannequin from peeking into future tokens throughout coaching. That is carried out by a causal masks utilized to consideration weights.

The core element of GPT is masked multi-head consideration. This module initiatives enter embeddings into question (Q), key (Okay), and worth (V) vectors. The eye weights are computed by taking the dot product of Q and Okay, scaled by the dimension of Okay, and making use of a softmax after masking.

Consideration permits the mannequin to weigh the relevance of different tokens within the sequence when predicting a token. In GPT, we use masked self-attention to make sure the mannequin doesn’t peek forward (future tokens).

That is achieved through matrix multiplication of queries and keys adopted by a scaled softmax and masking operation:

scores = torch.matmul(Q, Okay.transpose(-2, -1)) / sqrt(dk)

scores = scores.masked_fill(masks == 0, -1e9)

Every head captures a unique contextual relationship, and the outcomes are concatenated and reworked:

attn = softmax(scores, dim=-1)

Every consideration head operates independently, and their outputs are concatenated and linearly reworked. Multi-head consideration permits the mannequin to take care of totally different subspaces of data in parallel.

The eye output from all heads is concatenated and handed by a closing linear projection. Masking ensures causal (left-to-right) processing. This softmax ensures that spotlight is barely computed over seen (previous and current) tokens.

Key design decisions:

- Linear projections for Q, Okay, V for every head

- Scaled dot-product consideration with masking

- Shared output projection to mix head outputs

Every transformer block features a position-wise FFN, which applies two linear transformations with a ReLU activation in between. This provides depth and non-linearity to the mannequin, permitting it to mannequin complicated capabilities over the eye outputs.

Parameters:

- d_model: Dimension of embeddings

- d_ff: Normally 4x d_model for elevated capability

hidden = F.relu(self.fc1(x))

output = self.fc2(hidden)

This provides expressiveness to the mannequin past what consideration can seize alone.

Since transformers would not have recurrence or convolution, they want a option to seize the order of phrases. So we add positional encodings to the enter embeddings to supply a way of token order. The encoding makes use of a hard and fast sinusoidal sample based mostly on the token’s place and dimension index.

This encoding is added to the enter embeddings and helps the mannequin distinguish between tokens in numerous positions.

place = torch.arange(seq_len).unsqueeze(1)

encoding = torch.sin(place * freq_tensor)

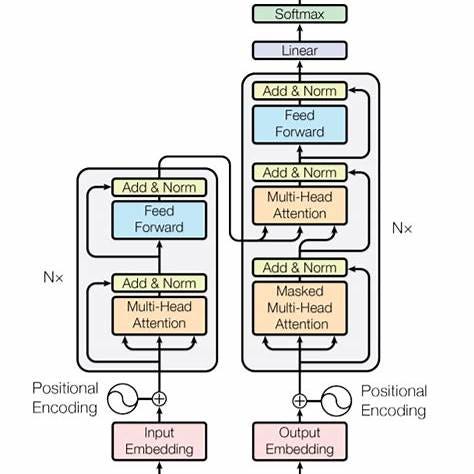

The mannequin consists of a stack of decoder layers, every containing:

- Multi-head masked consideration

- Add & LayerNorm

- Feedforward community

- Add & LayerNorm

Every decoder layer processes its enter by self-attention adopted by FFN, with residual connections and normalization utilized at every stage.

Every layer refines token representations by mixing contextual data from earlier tokens and updating the illustration utilizing realized weights. Layer normalization and residual connections guarantee steady coaching.

x = self.layer_norm1(x + self.mhma(x))

x = self.layer_norm2(x + self.ffn(x))

This stacking permits the mannequin to construct more and more summary options at every degree and the structure permits the mannequin to be taught contextual representations that evolve throughout layers.

Tokens are first handed by an embedding layer to map every ID to a dense vector. Positional encoding is added to retain order data. The enter token IDs are first embedded utilizing an nn.Embedding layer, which maps every token index to a learnable vector. That is adopted by positional encoding.

The output of the final decoder layer is fed right into a linear layer (PredictionHead) that maps the hidden states again to vocabulary house, producing logits for next-token prediction.

logits = self.pred(self.decoder(self.embed(x)))

These logits are used throughout coaching with a cross-entropy loss operate.

We use CrossEntropyLoss which naturally works with logits and handles token-level classification. Padding tokens are masked utilizing the ignore index:

loss_fn = nn.CrossEntropyLoss(ignore_index=-100)

loss = loss_fn(logits.view(-1, vocab_size), targets.view(-1))

By combining tokenization, masking, multi-head consideration, feedforward layers, and embeddings, we assemble a minimalist however useful GPT-style mannequin. The structure mimics the decoder stack of the unique Transformer paper with causal masking for language era.This implementation supplies perception into how transformers course of and predict language. Whereas simplified, it displays the core design rules of bigger fashions like GPT-2/3.

This mannequin could be prolonged with further layers, dropout, and extra refined coaching methods for improved efficiency and scalability.