Earlier than diving into characteristic engineering, let’s first take a more in-depth have a look at the H&M Style Suggestion dataset.

The dataset consists of three fundamental tables: articles, clients, and transactions.

Under is how one can extract and examine the info:

from recsys.raw_data_sources import h_and_m as h_and_m_raw_data# Extract articles information

articles_df = h_and_m_raw_data.extract_articles_df()

print(articles_df.form)

articles_df.head()

# Extract clients information

customers_df = h_and_m_raw_data.extract_customers_df()

print(customers_df.form)

customers_df.head()

# Extract transactions information

transactions_df = h_and_m_raw_data.extract_transactions_df()

print(transactions_df.form)

transactions_df.head()

🔗 Full code right here → Github

That is what the info appears like:

1 — Prospects Desk

- Buyer ID: A singular identifier for every buyer.

- Age: Gives demographic data, which may help predict age-related buying conduct.

- Membership standing: Signifies whether or not a buyer is a member, which can influence shopping for patterns and preferences.

- Style information frequency: Replicate how usually clients obtain vogue information, hinting at their engagement stage.

- Membership member standing: Present if the shopper is an energetic membership member, which may have an effect on loyalty and buy frequency.

- FN (vogue information rating): A numeric rating reflecting buyer’s engagement with fashion-related content material.

2 — Articles Desk

- Article ID: A singular identifier for every product.

- Product group: Categorizes merchandise into teams like clothes, tops, or footwear.

- Shade: Describes every product’s shade, which is necessary for visible similarity suggestions.

- Division: Signifies the division to which the article belongs, offering context for the kind of merchandise.

- Product sort: A extra detailed classification inside product teams.

- Product code: A singular identifier for every product variant.

- Index code: Represents product indexes, helpful for segmenting comparable gadgets throughout the identical class.

3 — Transactions Desk

- Transaction ID: A singular identifier for every transaction.

- Buyer ID: Hyperlinks the transaction to a particular buyer.

- Article ID: Hyperlinks the transaction to a particular product.

- Worth: Replicate the transaction quantity, which helps analyze spending habits.

- Gross sales channel: Exhibits whether or not the acquisition was made on-line or in-store.

- Timestamp: Information the precise time of the transaction, helpful for time-based evaluation.

🔗 Full code right here → Github

The tables are linked by way of distinctive identifiers like buyer and article IDs. These connections are essential for benefiting from the H&M dataset:

- Buyer to Transactions: By associating buyer IDs with transaction information, we are able to create behavioral options like buy frequency, recency, and whole spending, which offer insights into buyer exercise and preferences.

- Articles to Transactions: Linking article IDs to transaction information helps us analyze product recognition, establish traits, and perceive buyer preferences for several types of merchandise.

- Cross-Desk Evaluation: Combining information from a number of tables permits us to carry out superior characteristic engineering. For instance, we are able to monitor seasonal product traits or section clients primarily based on buying conduct, enabling extra personalised suggestions.

Desk relationships present a clearer image of how clients work together with merchandise, which helps enhance the accuracy of the advice mannequin in suggesting related gadgets.

- The Prospects desk accommodates buyer information, together with distinctive buyer IDs (Major Key), membership standing, and vogue information preferences.

- The Articles desk shops product particulars like article IDs (Major Key), product codes, and product names.

- The Transactions desk hyperlinks clients and articles by way of purchases, with fields for the transaction date, buyer ID (Overseas Key), and article ID (Overseas Key).

The double-line notations between tables point out one-to-many relationships: every buyer could make a number of transactions, and every transaction can contain a number of articles.

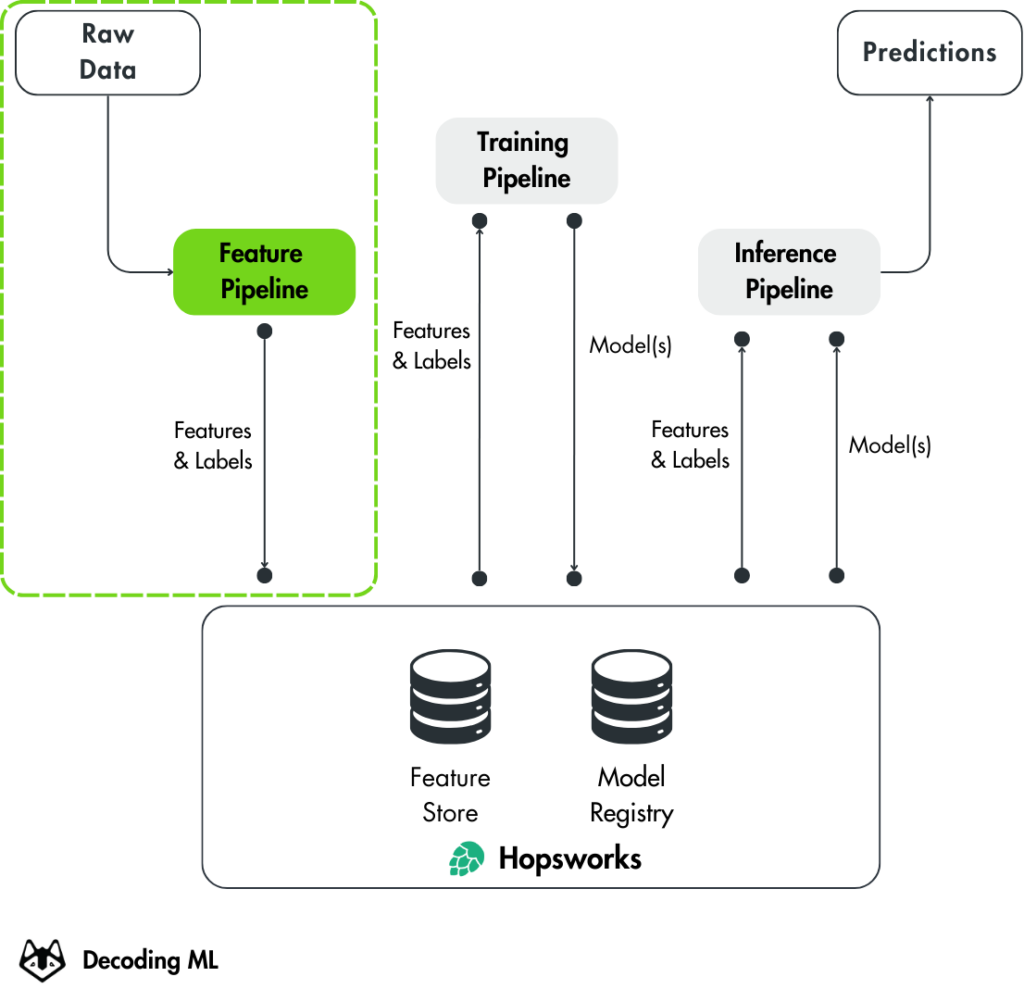

The characteristic pipeline takes as enter uncooked information and outputs options and labels used for coaching and inference.

📚 Read more about characteristic pipelines and their integration into ML techniques [6].

Creating efficient options for each retrieval and rating fashions is the inspiration of a profitable suggestion system.

Characteristic engineering for the two-tower mannequin

The 2-tower retrieval mannequin’s main goal is to study person and merchandise embeddings that seize interplay patterns between clients and articles.

We use the transactions desk as our supply of floor reality — every buy represents a optimistic interplay between a buyer and an article.

That is the inspiration for coaching the mannequin to maximise similarity between embeddings for precise interactions (optimistic pairs).

The pocket book imports obligatory libraries and modules for characteristic computation.

This snippet lists the default settings used all through the pocket book, reminiscent of mannequin IDs, studying charges, and batch sizes.

It’s useful for understanding the configuration of the characteristic pipeline and fashions.

pprint(dict(settings))

🔗 Full code right here → Github

Coaching goal

The purpose of the two-tower retrieval mannequin is to make use of a minimal, robust characteristic set that’s extremely predictive however doesn’t introduce pointless complexity.

The mannequin goals to maximise the similarity between buyer and article embeddings for bought gadgets whereas minimizing similarity for non-purchased gadgets.

This goal is achieved utilizing a loss perform reminiscent of cross-entropy loss for sampled softmax, or contrastive loss. The embeddings are then optimized for nearest-neighbor search, which permits environment friendly filtering in downstream suggestion duties.

Characteristic choice

The 2-tower retrieval mannequin deliberately makes use of a minimal set of robust options to study sturdy embeddings:

(1) Question options — utilized by the QueryTower (the shopper encoder from the two-tower mannequin):

- customer_id: A categorical characteristic that uniquely identifies every person. That is the spine of person embeddings.

- age: A numerical characteristic that may seize demographical patterns.

- month_sin and month_cos: Numerical options that encode cyclic patterns (e.g., seasonality) in person conduct.

(2) Candidate options — utilized by the ItemTower (the H&M vogue articles encoder from the two-tower mannequin):

- article_id: A categorical characteristic that uniquely identifies every merchandise. That is the spine of merchandise embeddings.

- garment_group_name: A categorical characteristic that captures high-level classes (e.g., “T-Shirts”, “Attire”) to offer further context in regards to the merchandise.

- index_group_name: A categorical characteristic that captures broader merchandise groupings (e.g., “Menswear”, “Womenswear”) to offer additional context.

These options are handed by way of their respective towers to generate the question (person) and merchandise embeddings, that are then used to compute similarities throughout retrieval.

The restricted characteristic set is optimized for the retrieval stage, specializing in shortly figuring out candidate gadgets by way of an approximate nearest neighbor (ANN) search.

This aligns with the four-stage recommender system structure, making certain environment friendly and scalable merchandise retrieval.

This snippet computes options for articles, reminiscent of product descriptions and metadata, and shows their construction.

articles_df = compute_features_articles(articles_df)

articles_df.form

articles_df.head(3)

compute_features_articles() takes the articles dataframe and transforms it right into a dataset with 27 options throughout 105,542 articles.

import polars as pldef compute_features_articles(df: pl.DataFrame) -> pl.DataFrame:

df = df.with_columns(

[

get_article_id(df).alias("article_id"),

create_prod_name_length(df).alias("prod_name_length"),

pl.struct(df.columns)

.map_elements(create_article_description)

.alias("article_description"),

]

)

# Add full picture URLs.

df = df.with_columns(image_url=pl.col("article_id").map_elements(get_image_url))

# Drop columns with null values

df = df.choose([col for col in df.columns if not df[col].is_null().any()])

# Take away 'detail_desc' column

columns_to_drop = ["detail_desc", "detail_desc_length"]

existing_columns = df.columns

columns_to_keep = [col for col in existing_columns if col not in columns_to_drop]

return df.choose(columns_to_keep)

One customary method when manipulating textual content earlier than feeding it right into a mannequin is to embed it. This solves the curse of dimensionality or data loss from options reminiscent of one-hot encoding or hashing.

The next snippet generates embeddings for article descriptions utilizing a pre-trained SentenceTransformer mannequin.

system = (

"cuda" if torch.cuda.is_available()

else "mps" if torch.backends.mps.is_available()

else "cpu"

)

logger.information(f"Loading '{settings.FEATURES_EMBEDDING_MODEL_ID}' embedding mannequin to {system=}")# Load the embedding mannequin

mannequin = SentenceTransformer(settings.FEATURES_EMBEDDING_MODEL_ID, system=system)

# Generate embeddings for articles

articles_df = generate_embeddings_for_dataframe(

articles_df, "article_description", mannequin, batch_size=128

)

🔗 Full code right here → Github

Options engineering for the Rating mannequin

The rating mannequin has a extra complicated goal: precisely predicting the chance of buy for every retrieved merchandise.

This mannequin makes use of a mixture of question and merchandise options, together with labels, to foretell the chance of interplay between customers and gadgets.

This characteristic set is designed to offer wealthy contextual and descriptive data, enabling the mannequin to rank gadgets successfully.

Generate options for purchasers:

Coaching goal

The mannequin is educated to foretell buy chance, with precise purchases (from the Transactions desk) serving as optimistic labels (1) and non-purchases as unfavorable labels (0).

This binary classification goal helps order retrieved gadgets by their chance of buy.

Characteristic choice

(1) Question Options — equivalent to these used within the Retrieval Mannequin to encode the shopper

(2) Merchandise Options — used to symbolize the articles within the dataset. These options describe the merchandise’ attributes and assist the mannequin perceive merchandise properties and relationships:

- article_id: A categorical characteristic that uniquely identifies every merchandise, forming the inspiration of merchandise illustration.

- product_type_name: A categorical characteristic that describes the particular sort of product (e.g., “T-Shirts”, “Attire”), offering detailed item-level granularity.

- product_group_name: A categorical characteristic for higher-level grouping of things, helpful for capturing broader class traits.

- graphical_appearance_name: A categorical characteristic representing the visible type of the merchandise (e.g., “Strong”, “Striped”).

- colour_group_name: A categorical characteristic that captures the colour group of the merchandise (e.g., “Black”, “Blue”).

- perceived_colour_value_name: A categorical characteristic describing the brightness or worth of the merchandise’s shade (e.g., “Mild”, “Darkish”).

- perceived_colour_master_name: A categorical characteristic representing the grasp shade of the merchandise (e.g., “Purple”, “Inexperienced”), offering further color-related data.

- department_name: A categorical characteristic denoting the division to which the merchandise belongs (e.g., “Menswear”, “Womenswear”).

- index_name: A categorical characteristic representing broader classes, offering a high-level grouping of things.

- index_group_name: A categorical characteristic that teams gadgets into overarching divisions (e.g., “Divided”, “H&M Girls”).

- section_name: A categorical characteristic describing the particular part throughout the retailer or catalog.

- garment_group_name: A categorical characteristic that captures high-level garment classes (e.g., “Jackets”, “Trousers”), serving to the mannequin generalize throughout comparable gadgets.

(3) Label — A binary characteristic used for supervised studying

- `1` signifies a optimistic pair (buyer bought the merchandise).

- `0` signifies a unfavorable pair (buyer didn’t buy the merchandise, randomly sampled).

This method is designed for the rating stage of the recommender system, the place the main focus shifts from producing candidates to fine-tuning suggestions with greater precision.

By incorporating each question and merchandise options, the mannequin ensures that suggestions are related and personalised.

Developing the ultimate rating dataset

The rating dataset is the ultimate dataset used to coach the scoring/rating mannequin within the suggestion pipeline.

It’s computed by combining question (buyer) options, merchandise (article) options, and the interactions (transactions) between them.

The compute_ranking_dataset() combines the completely different options from the Characteristic Teams:

- `trans_fg`: The transactions Characteristic Group, which offers the labels (`1` for optimistic pairs and `0` for unfavorable pairs) and extra interaction-based options (e.g., recency, frequency).

- `articles_fg`: The articles Characteristic Group, which accommodates the engineered merchandise options (e.g., product sort, shade, division, and so on.).

- `customers_fg`: The shoppers Characteristic Group, which accommodates buyer options (e.g., age, membership standing, buy conduct).

The ensuing rating dataset contains:

- Buyer Options: From `customers_fg`, representing the question.

- Merchandise Options: From `articles_fg`, representing the candidate gadgets.

- Interplay Options: From `trans_fg`, reminiscent of buy frequency or recency, which seize behavioral alerts.

- Label: A binary label (`1` for bought gadgets, `0` for unfavorable samples).

The result’s a dataset the place every row represents a customer-item pair, with the options and label indicating whether or not the shopper interacted with the merchandise.

In observe, this appears like this:

ranking_df = compute_ranking_dataset(

trans_fg,

articles_fg,

customers_fg,

)

ranking_df.form

Adverse sampling for the rating dataset

The rating dataset contains each optimistic and unfavorable samples.

This ensures the mannequin learns to distinguish between related and irrelevant gadgets:

- Constructive samples (Label = 1): derived from the transaction Characteristic Group (`trans_fg`), the place a buyer bought a particular merchandise.

- Adverse samples (Labels = 0): generated by randomly sampling gadgets the shopper didn’t buy. These symbolize gadgets the shopper is much less prone to work together with and assist the mannequin higher perceive what’s irrelevant to the person.

# Examine the label distribution within the rating dataset

ranking_df.get_column("label").value_counts()

Outputs:

label rely

i32 u32

1 20377

0 203770

Adverse Samples are constrained to make them practical, reminiscent of sampling gadgets from the identical class or division because the buyer’s purchases or together with common gadgets the shopper hasn’t interacted with, simulating believable alternate options.

For instance, if the shopper bought a “T-shirt,” unfavorable samples might embrace different “T-shirts” they didn’t purchase.

Adverse samples are sometimes balanced in proportion to optimistic ones. For each optimistic pattern, we’d add 1 to five unfavorable ones. This prevents the mannequin from favoring unfavorable pairs, that are way more widespread in real-world information.

import polars as pldef compute_ranking_dataset(trans_fg, articles_fg, customers_fg) -> pl.DataFrame:

... # Extra code

# Create optimistic pairs

positive_pairs = df.clone()

# Calculate variety of unfavorable pairs

n_neg = len(positive_pairs) * 10

# Create unfavorable pairs DataFrame

article_ids = (df.choose("article_id")

.distinctive()

.pattern(n=n_neg, with_replacement=True, seed=2)

.get_column("article_id"))

customer_ids = (df.choose("customer_id")

.pattern(n=n_neg, with_replacement=True, seed=3)

.get_column("customer_id"))

other_features = (df.choose(["age"])

.pattern(n=n_neg, with_replacement=True, seed=4))

# Assemble unfavorable pairs

negative_pairs = pl.DataFrame({

"article_id": article_ids,

"customer_id": customer_ids,

"age": other_features.get_column("age"),

})

# Add labels

positive_pairs = positive_pairs.with_columns(pl.lit(1).alias("label"))

negative_pairs = negative_pairs.with_columns(pl.lit(0).alias("label"))

# Concatenate optimistic and unfavorable pairs

ranking_df = pl.concat([

positive_pairs,

negative_pairs.select(positive_pairs.columns)

])

... Extra code

return ranking_df

As soon as the rating dataset is computed, it’s uploaded to Hopsworks as a brand new Characteristic Group, with lineage data reflecting its dependencies on the guardian Characteristic Teams (`articles_fg`, `customers_fg`, and `trans_fg`).

logger.information("Importing 'rating' Characteristic Group to Hopsworks.")

rank_fg = feature_store.create_ranking_feature_group(

fs,

df=ranking_df,

mother and father=[articles_fg, customers_fg, trans_fg],

online_enabled=False

)

logger.information("✅ Uploaded 'rating' Characteristic Group to Hopsworks!!")

This lineage ensures that any updates to the guardian Characteristic Teams (e.g., new transactions or articles) will be propagated to the rating dataset, conserving it up-to-date and constant.

The Hopsworks Characteristic Retailer is a centralized repository for managing options.

The next reveals find out how to authenticate and connect with the characteristic retailer:

from recsys import hopsworks_integration# Hook up with Hopsworks Characteristic Retailer

venture, fs = hopsworks_integration.get_feature_store()

🔗 Full code right here → Github

Step 1: Outline Characteristic Teams

Characteristic Teams are logical groupings of associated options that can be utilized collectively in mannequin coaching and inference.

For instance:

1 — Buyer Characteristic Group

Contains all customer-related options, reminiscent of demographic, behavioral, and engagement metrics.

- Demographics: Age, gender, membership standing.

- Behavioral options: Buy historical past, common spending, go to frequency.

- Engagement metrics: Style information frequency, membership membership standing.

2 — Article Characteristic Group

It contains options associated to articles (merchandise), reminiscent of descriptive attributes, recognition metrics, and picture options.

- Descriptive attributes: Product group, shade, division, product sort, product code.

- Recognition metrics: Variety of purchases, rankings.

- Picture options: Visible embeddings derived from product pictures.

3 — Transaction Characteristic Group

Contains all transaction-related options, reminiscent of transactional particulars, interplay metrics, and contextual options.

- Transactional attributes: Transaction ID, buyer ID, article ID, worth.

- Interplay metrics: Recency and frequency of purchases.

- Contextual options: Gross sales channel, timestamp of transaction.

Including a characteristic group to Hopsworks:

from recsys.hopsworks_integration.feature_store import create_feature_group# Create a characteristic group for article options

create_feature_group(

feature_store=fs,

feature_data=article_features_df,

feature_group_name="articles_features",

description="Options for articles within the H&M dataset"

)

🔗 Full code right here → Github

Step 2: Knowledge ingestion

To make sure the info is appropriately structured and prepared for mannequin coaching and inference, the subsequent step includes loading information from the H&M dataset into the respective Characteristic Teams in Hopsworks.

Right here’s the way it works:

1 — Knowledge loading

Begin by extracting information from the H&M supply information, processing them into options and loading them into the proper Characteristic Teams.

2 — Knowledge validation

After loading, examine that the info is correct and matches the anticipated construction.

- Consistency checks: Confirm the relationships between datasets are right.

- Knowledge cleansing: Deal with any points within the information, reminiscent of lacking values, duplicates, or inconsistencies.

Fortunately, Hopsworks helps integration with Great Expectations, including a sturdy information validation layer throughout information loading.

Step 3: Versioning and metadata administration

Versioning and metadata administration are important for conserving your Characteristic Teams organized and making certain fashions will be reproduced.

The important thing steps are:

- Model management: Monitor completely different variations of Characteristic Teams to make sure you can recreate and validate fashions utilizing particular information variations. For instance, if there are vital adjustments to the Buyer Characteristic Group, create a brand new model to mirror these adjustments.

- Metadata administration: Doc the main points of every characteristic, together with its definition, the way it’s remodeled, and any dependencies it has on different options.

rank_fg = fs.get_or_create_feature_group(

identify="rating",

model=1,

description="Derived characteristic group for rating",

primary_key=["customer_id", "article_id"],

mother and father=[articles_fg, customers_fg, trans_fg],

online_enabled=online_enabled,

)

rank_fg.insert(df, write_options={"wait_for_job": True})for desc in constants.ranking_feature_descriptions:

rank_fg.update_feature_description(desc["name"], desc["description"])

Defining Characteristic Teams, managing information ingestion, and monitoring variations and metadata guarantee your options are organized, reusable, and dependable, making it simpler to keep up and scale your ML workflows.

View ends in Hopsworks Serverless: Characteristic Retailer → Characteristic Teams

Hopsworks Characteristic Teams are key in making machine studying workflows extra environment friendly and arranged.

Right here’s how they assist:

1 — Centralized repository

- Single supply of reality: Characteristic Teams in Hopsworks present a centralized place for all of your characteristic information, making certain everybody in your crew makes use of the identical, up-to-date information. This reduces the danger of inconsistencies and errors when completely different folks use outdated or different datasets.

- Simpler administration: Managing all options in a single place turns into simpler. Updating, querying, and sustaining the options is streamlined, resulting in elevated productiveness and smoother workflows.

2- Characteristic reusability

- Cross-model consistency: Options saved in Hopsworks can be utilized throughout completely different fashions and tasks, making certain consistency of their definition and software. This eliminates the necessity to re-engineer options every time, saving effort and time.

- Sooner improvement: Since you may reuse options, you don’t have to begin from scratch. You may shortly leverage present, well-defined options, dashing up the event and deployment of recent fashions.

3 — Scalability

- Optimized Efficiency: The platform ensures that queries and have updates are carried out shortly, even when coping with massive quantities of knowledge. That is essential for sustaining mannequin efficiency in manufacturing.

4 — Versioning and lineage

- Model management: Hopsworks offers model management for Characteristic Teams, so you may preserve monitor of adjustments made to options over time. This helps reproducibility, as you may return to earlier variations if wanted.

- Knowledge lineage: Monitoring information lineage permits you to doc how options are created and remodeled. This provides transparency and helps you perceive the relationships between options.

Learn more on feature groups [4] and find out how to combine them into ML techniques.

Think about you’re operating H&M’s on-line suggestion system, which delivers personalised product recommendations to thousands and thousands of consumers.

At the moment, the system makes use of a static pipeline: embeddings for customers and merchandise are precomputed utilizing a two-tower mannequin and saved in an Approximate Nearest Neighbor (ANN) index.

When customers work together with the positioning, comparable merchandise are retrieved, filtered (e.g., excluding seen or out-of-stock gadgets), and ranked by a machine studying mannequin.

Whereas this method works properly offline, it struggles to adapt to real-time adjustments, reminiscent of shifts in person preferences or the launch of recent merchandise.

You could shift to a streaming information pipeline to make the advice system dynamic and responsive.

Step 1 — Integrating real-time information

Step one is to introduce real-time information streams into your pipeline. To start, take into consideration the forms of occasions your system must deal with:

- Consumer conduct: Actual-time interactions reminiscent of clicks, purchases, and searches to maintain up with evolving preferences.

- Product updates: Stream information on new arrivals, worth adjustments, and inventory updates to make sure suggestions mirror probably the most up-to-date catalog.

- Embedding updates: Repeatedly recalculate person and product embeddings to keep up the accuracy and relevance of the advice mannequin.

Step 2: Updating the retrieval stage

In a static pipeline, retrieval depends upon a precomputed ANN index that matches person and merchandise embeddings primarily based on similarity.

Nonetheless, as embeddings evolve, conserving the retrieval course of synchronized with these adjustments is essential to keep up accuracy and relevance.

Hopsworks helps upgrading the ANN index. This simplifies embedding updates and retains the retrieval course of aligned with the newest embeddings.

Right here’s find out how to improve the retrieval stage:

- Improve the ANN index: Swap to a system able to incremental updates, like FAISS, ScaNN, or Milvus. These libraries assist real-time similarity searches and may immediately incorporate new and up to date embeddings.

- Stream embedding updates: Combine a message dealer like Kafka to feed up to date embeddings into the system. As a person’s preferences change or new gadgets are added, their corresponding embeddings must be up to date in real-time.

- Guarantee freshness: Construct a mechanism to prioritize the newest embeddings throughout similarity searches. This ensures that suggestions are at all times primarily based on probably the most present person preferences and obtainable content material.

Step 3: Updating the filtering stage

After retrieving an inventory of candidate gadgets, the subsequent step is filtering out irrelevant or unsuitable choices. In a static pipeline, filtering depends on precomputed information like whether or not a person has already watched a video or if it’s regionally obtainable.

Nonetheless, filtering must adapt immediately to new information for a real-time system.

Right here’s find out how to replace the filtering stage:

- Monitor latest buyer exercise: Use a stream processing framework like Apache Flink or Kafka Streams to keep up a real-time report of buyer interactions

- Dynamic inventory availability: Repeatedly replace merchandise availability primarily based on real-time stock information. If an merchandise goes out of inventory, it must be filtered instantly.

- Customized filters: Apply personalised guidelines in real-time, reminiscent of excluding gadgets that don’t match a buyer’s measurement, shade preferences, or looking historical past.

First, it’s essential to create an account on Hopsworks’s Serverless platform. Each making an account and operating our code are free.

Then you might have 3 fundamental choices to run the characteristic pipeline:

- In an area Pocket book or Google Colab: access instructions

- As a Python script from the CLI, access instructions

- GitHub Actions: access instructions

View the ends in Hopsworks Serverless: Characteristic Retailer → Characteristic Teams

We advocate utilizing GitHub Actions in case you have a poor web connection and preserve getting timeout errors when loading information to Hopsworks. This occurs as a result of we push thousands and thousands of things to Hopsworks.

On this lesson, we lined the important parts of the characteristic pipeline, from understanding the H&M dataset to engineering options for each retrieval and rating fashions.

We additionally launched Hopsworks Characteristic Teams, emphasizing their significance in successfully organizing, managing, and reusing options.

Lastly, we lined the transition to a real-time streaming pipeline, which is essential for making suggestion techniques adaptive to evolving person behaviors.

With this basis, you may handle and optimize options for high-performing machine studying techniques that ship personalised, high-impact person experiences.

In Lesson 3, we’ll dive into the coaching pipeline, specializing in coaching, evaluating, and managing retrieval and rating fashions utilizing the Hopsworks mannequin registry.

💻 Discover all the teachings and the code in our freely obtainable GitHub repository.

When you’ve got questions or want clarification, be happy to ask. See you within the subsequent session!

The H&M Real-Time Personalized Recommender course is a part of Decoding ML’s open-source collection of end-to-end AI programs.

For extra comparable free programs on manufacturing AI, GenAI, data retrieval, and MLOps techniques, take into account testing our available courses.

Additionally, we offer a free weekly e-newsletter on AI that works in manufacturing ↓