Earlier than I begin, I invite you to memorize the next string:

firstsecondthirdfourthfifthsixthseventheighthninthtenth

Bought it? Let’s start.

I constructed an information compressor that might scale back English textual content measurement by 80% over the previous 4 months. My project was a replication of a few of the experiments carried out by Grégoire Delétang et al. within the 2023 paper titled “Language Modeling is Compression”. It demonstrates that an correct massive language mannequin (LLM), akin to that used within the well-known ChatGPT, will also be used to compress textual content information losslessly and very successfully.

Beforehand, I’ve all the time considered laptop science as extra just like math and engineering than science. In any case, there wasn’t a lot science in constructing 2D platformer video games. This undertaking is perhaps, strictly talking, my very first laptop science undertaking. It led me to appreciate that laptop science merely makes use of arithmetic and engineering as instruments to conduct basically scientific experiments.

To hypothesize the conduct of my compressor below completely different fashions, arithmetic, akin to info principle and statistics, had been utilized. Software program engineering was used to construct the compressor itself. Nevertheless, as an entire, this undertaking in the end experimented with the top product of my software program engineering. I graphed information, analyzed error, and made conclusions in a manner not in contrast to faculty chemistry or statistics labs.

In information compression, a compression algorithm converts a big file right into a smaller one. For the system to be thought of lossless, the algorithm have to be designed in such a manner that one other algorithm can completely reproduce the big file solely from trying on the smaller file.

Compression entails figuring out from the info info that may be discarded with out being misplaced utterly. Step one of knowledge compression is to seize a predictable sample within the information. That which is predictable is discardable, as we will merely restore this information throughout decompression.

This predictable sample could appear to be various things, relying on the info compressed:

- Many programming languages like to finish traces with semicolons “;”.

- English textual content tends to make use of Latin letters and comply with right English grammar.

- Pixels in a picture usually tend to be related colors to their neighbours.

Intelligence is compression energy, arguably.

By info principle, you’ll be able to show that the accuracy of a language mannequin and the compression ratio (% file discount) of my information compressor can each be measured utilizing the identical metric, the cross-entropy:

Thus, a wiser language mannequin corresponds to a tighter information compressor. A extra detailed clarification could be discovered within the undertaking repository on Github.

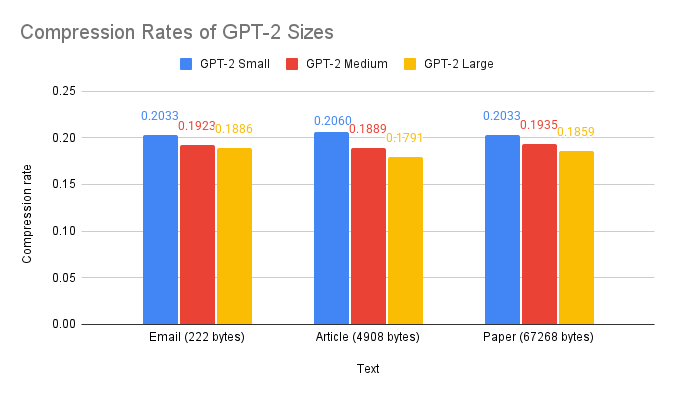

I’ve additionally decided, via experiments, that giant language fashions that carry out higher throughout testing metrics are inclined to additionally carry out higher on information compression. Right here, I present that bigger, smarter GPT-2 fashions, when used to create my textual content compressor, additionally result in higher compression charges.

Lastly, this assertion could be defined intuitively. To compress information, a system must seize its patterns, and one can argue that the acquisition of patterns is intelligence. Right here’s an instance.

Firstly of this text, I requested you to memorize a string of characters. Most readers ought to have been in a position to establish a sample throughout the textual content. We turned the large string into an simply memorizable thought, compressing it, if you’ll, in order that it could match extra compactly in our brains. The method required you to grasp primary counting, in addition to English phrases akin to “first” and “second”. In some sense, that’s intelligence.

So should you nonetheless recall that piece of textual content, congratulations, you might be in all probability “clever”.