Earlier than diving into my latest posts about dimensionality discount methods, I ought to have ideally began right here — with the idea of the “Curse of Dimensionality.” At the moment, I’m studying the unbelievable e-book “Why Machines Study” by Anil Ananthaswamy, and I actually can’t suggest it sufficient! An in depth evaluation of this insightful e-book is certainly on its method, however I used to be so excited by the clear clarification of the Curse of Dimensionality that I couldn’t wait to share it.

All of the insights I’m presenting listed here are closely drawn from Ananthaswamy’s glorious clarification, and the credit score absolutely belongs to him for simplifying such a fancy matter.

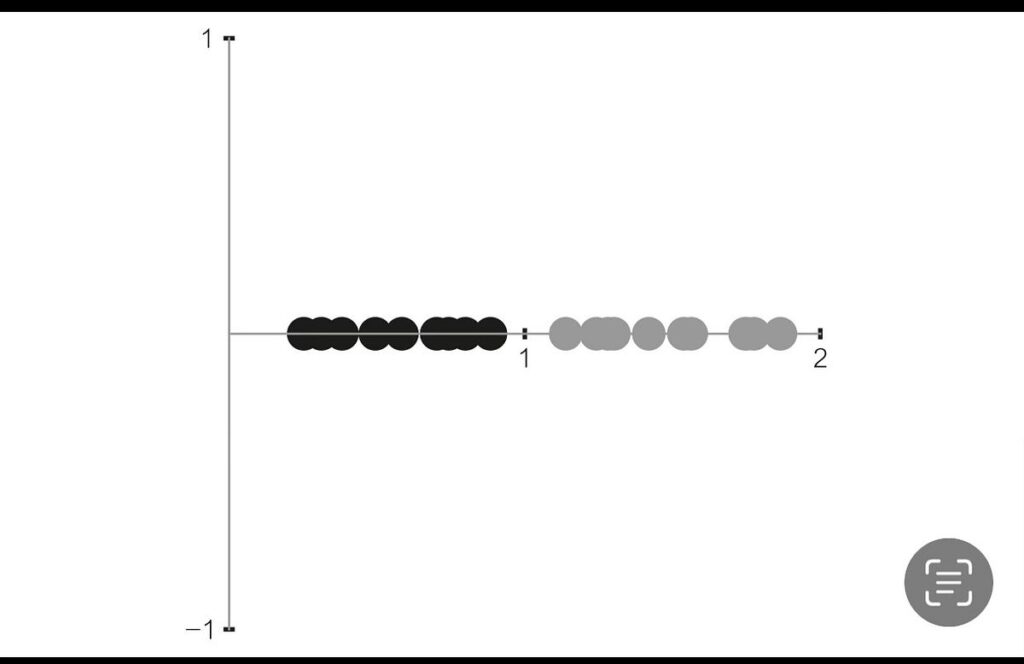

There are a number of intuitive methods to know this “curse.” Let’s begin with a easy situation. Think about a dataset described by a single characteristic that ranges uniformly between 0 and a pair of, which means any worth inside this vary is equally doubtless. If we take twenty samples from this distribution, we’d see a roughly even unfold throughout the vary from 0 to 2.

Now, suppose we have now two options, every independently ranging uniformly between 0 and a pair of. If we once more pattern twenty factors, however now from a 2D house (a 2×2 sq.), we’d discover one thing completely different. The area the place each options fall between 0 and 1 (the unit sq.) now covers solely 1 / 4 of our whole house. Thus, we would discover fewer factors — maybe solely 4 out of the unique 20 — on this area.

Extending this to 3 options, every uniformly distributed between 0 and a pair of, we now have a cubic quantity. The unit dice (the place every characteristic is between 0 and 1) occupies solely an eighth of the overall quantity. If we once more pattern twenty factors, we would find yourself with even fewer — maybe simply two factors — inside our unit dice.

This illustrates a basic problem in machine studying: because the variety of dimensions (options) will increase, the amount of house we should contemplate grows exponentially, inflicting knowledge factors to change into more and more sparse. With hundreds or tens of hundreds of dimensions, the prospect of discovering knowledge factors shut to one another turns into almost unattainable except the variety of samples is extremely massive. As Julie Delon from Université Paris–Descartes humorously places it, “In high-dimensional areas, no person can hear you scream.”

Rising the variety of knowledge factors would possibly alleviate the issue, however this can also change into impractical in a short time. This problem is exactly why dimensionality discount methods change into invaluable. Strategies like Principal Part Evaluation (PCA), t-distributed Stochastic Neighbor Embedding (t-SNE), and autoencoders assist us simplify high-dimensional knowledge by figuring out and retaining probably the most informative options. By decreasing dimensions, these methods not solely mitigate the problems brought on by knowledge sparsity but in addition enhance computational effectivity, enabling machine studying algorithms to carry out higher. Primarily, dimensionality discount permits us to raised navigate the complexities of high-dimensional areas, serving to algorithms to listen to these essential indicators hidden amidst the noise.

Reference : All the photographs included listed here are borrowed from Anil Ananthaswamy’s e-book, “Why Machines Study.”