All fashions had been skilled utilizing a batch measurement between 512 and 16,384, relying on reminiscence constraints. We employed a 70/30 practice/take a look at random break up and used Adam optimization with a studying price between 3×10−4 and 1×10−3. Additional hyperparameter particulars are given within the following sections.

Totally-Linked Neural Community

The fully-connected feed ahead community, based mostly on the structure described in Part 3.2, was experimented based mostly on it’s dropout price (Bebis and Georgiopoulos, 1994). We first used dropout of 0, which led to some overfitting, however as we elevated dropout to 0.1 and 0.2, we observed that practice and take a look at loss grew comparable, demonstrating efficient mitigation of overfitting, as seen in panel (b) of 2 The mannequin attaining the very best classification accuracy was skilled witt 0.1 dropout.

Transformer Mannequin with Multi-head Self-Consideration

We performed two experiments on the attention-based mannequin: various the variety of consideration heads and scaling the hidden dimensions. Utilizing Equation 4, we skilled the Transformer classifier with totally different head counts (e.g., 1, 2, 4, 8) whereas maintaining different hyperparameters mounted. Every head captures a novel projection of the embedding, revealing distinct function relationships; nevertheless, too many heads led to overfitting and longer coaching instances. We discovered that utilizing 4 heads supplied an optimum stability.

As well as, we experimented with the hidden dimension measurement. Whereas bigger dimensions can supply richer representations, in addition they danger over-parameterization, whereas smaller dimensions could fail to seize adequate information variance. Our outcomes point out {that a} hidden dimension of 256 — identical because the autoencoder’s latent embedding dimension — successfully prevents overfitting whereas preserving important info.

Graph Convolutional Community

To assemble graph representations of the embedded information whereas staying inside reminiscence constraints, we set the cosine similarity threshold to 0.4 and the utmost edges to 1,000. We discovered that utilizing a hidden dimension measurement of 128 and a dropout price of 0.3 yielded the perfect efficiency.

Desk 1:Classification Outcomes on Hematopoietic Multipotent Progenitor CellsMannequin TypeTrain AccuracyTest AccuracyMonocytesLymphocytesFFN (dropout 0.0)100.00percent94.20percent0.69450.6122FFN (dropout 0.1)95.03percent95.24percent0.69120.6409Transformer Self-Attention97.19percent94.59percent0.67270.5126

Bettering Downstream Classification Prognosis

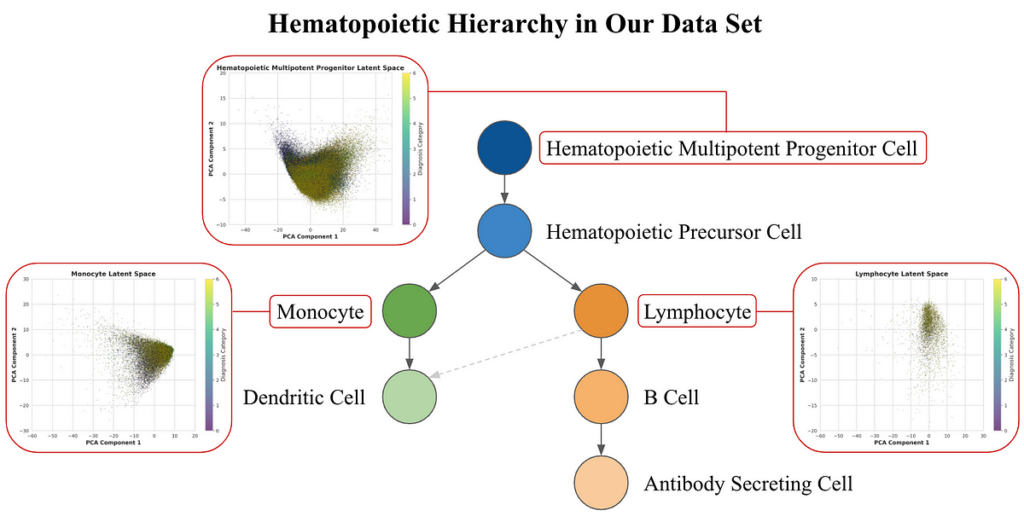

Initially, we skilled the above architectures on the embeddings for hematopoietic multipotent progenitor cells solely. This yielded the educational curves in 2. Concurrently, we evaluated the zero-shot efficiency of the mannequin on downstream monocyte and lymphocytes. The classification of the progenitor cells was strong by itself, as seen in Desk 1, however we additionally wished to evaluate its applicability to downstream cells in a zero-shot setting. The baseline efficiency yielded binary classification scores of 0.6416 for lymphocytes and 0.7252 for monocytes, and exceeded 0.7 F1-score for lymphocytes. Though these outcomes will not be clinical-grade by any means, they reveal that progenitor cell genetic profiles can certainly present a basis for predicting illness in differentiated cells, and that there’s inherent shared info alongside the hematopoietic lineage that our autoencoder and downstream classifiers realized.

Desk 2:Classification Outcomes on Downstream Cell SortsMannequin TypeMonocytesLymphocytesTrain AccuracyTest AccuracyTrain AccuracyTest AccuracyFFN (dropout 0.1)89.84percent83.01percent74.09percent63.41percentSelf-Attention90.31percent83.05percent83.59percent64.35percentGCN81.22percent79.53percent94.11percent65.33%

Consequently, to enhance classification outcomes on monocytes and lymphocytes, we utilized the identical above architectures (and largely the identical hyperparameters) on the embeddings for these cell varieties, reasonably than coaching solely on the progenitors. Notably, the self-attention transformer improved monocyte prediction to 83.05%, whereas lymphocyte classification reached solely 64.35%, surprisingly under the baseline FFN zero-shot outcomes (Desk 2). Furthermore, the diminished dataset, stemming from latent house dimensionality discount and computational constraints with the GCN, highlights the challenges of transferring progenitor insights to lymphocyte illness prediction. 1

Our outcomes present that whereas progenitor-derived predictions improve monocyte illness classification, extending these insights to lymphocytes stays difficult. This highlights the necessity for focused strategies to seize the complicated genetic patterns in lymphocytes and factors to promising future analysis instructions for enhancing cross-cell-type generalization. Nonetheless, promisingly, our zero-shot strategy was capable of match, and even surpass, the classification accuracy of the fashions skilled immediately on lymphocyte latent embeddings.