On this article, I’ll introduce you to hierarchical Bayesian (HB) modelling, a versatile strategy to robotically mix the outcomes of a number of sub-models. This methodology allows estimation of individual-level results by optimally combining data throughout totally different groupings of knowledge by way of Bayesian updating. That is significantly beneficial when particular person models have restricted observations however share frequent traits/behaviors with different models.

The next sections will introduce the idea, implementation, and various use instances for this methodology.

The Drawback with Conventional Approaches

As an software, think about that we’re a big grocery retailer attempting to maximise product-level income by setting costs. We would want to estimate the demand curve (elasticity) for every product, then optimize some revenue maximization operate. As step one to this workstream, we would want to estimate the worth elasticity of demand (how responsive demand is to a 1% change in worth) given some longitudinal knowledge with $i in N$ merchandise over $t in T$ durations. Do not forget that the worth elasticity of demand is outlined as:

$$beta=frac{partial log{textrm{Items}}_{it}}{partial log textrm{Value}_{it}}$$

Assuming no confounders, we will use a log-linear fixed-effect regression model to estimate our parameter of curiosity:

$$log(textrm{Items}_{it})= beta log(textrm{Value})_{it} +gamma_{c(i),t}+ delta_i+ epsilon_{it}$$

$gamma_{c(i),t}$ is a set of category-by-time dummy variables to seize the common demand in every category-time interval and $delta_i$ is a set of product dummies to seize the time-invariant demand shifter for every product. This “fixed-effect” formulation is customary and customary in lots of regression-based fashions to manage for unobserved confounders. This (pooled) regression mannequin permits us to recuperate the common elasticity $beta$ throughout all $N$ models. This could imply that the shop might goal a median worth degree throughout all merchandise of their retailer to maximise the income:

$$underset{textrm{Value}_t}{max} ;;; textrm{Value}_{t}cdotmathbb{E}(textrm{Amount}_{t} | textrm{Value}_{t}, beta)$$

If these models have a pure grouping (product classes), we’d be capable of establish the common elasticity of every class by operating separate regressions (or interacting the worth elasticity with the product class) for every class utilizing solely models from that class. This could imply that the shop might goal common costs in every class to maximise category-specific income, such that:

$$underset{textrm{Value}_{c(i),t}}{max} ;;; textrm{Value}_{c(i),t}cdotmathbb{E}(textrm{Amount}_{c(i),t} | textrm{Value}_{c(i),t}, beta_{c(i)})$$

With ample knowledge, we might even run separate regressions for every particular person product to acquire extra granular elasticities.

Nevertheless, real-world knowledge usually presents challenges: some merchandise have minimal worth variation, brief gross sales histories, or class imbalance throughout classes. Beneath these restrictions, operating separate regressions to establish product elasticity would doubtless result in giant customary errors and weak identification of $beta$. HB fashions addresses these points by permitting us to acquire granular estimates of the coefficient of curiosity by sharing statistical energy each throughout totally different groupings whereas preserving heterogeneity. With the HB formulation, it’s doable to run one single regression (just like the pooled case) whereas nonetheless recovering elasticities on the product degree, permitting for granular optimizations.

Understanding Hierarchical Bayesian Fashions

At its core, HB is about recognizing the pure construction in our knowledge. Relatively than treating all observations as utterly unbiased (many separate regressions) or forcing them to observe equivalent patterns (one pooled regression), we acknowledge that observations can cluster into teams, with merchandise inside every group sharing comparable patterns. The “hierarchical” facet refers to how we manage our parameters in numerous ranges. In its most elementary format, we might have:

- A World parameter that applies to all knowledge.

- Group-level parameters that apply to observations inside that group.

- Particular person-level parameters that apply to every particular particular person.

This system is versatile sufficient so as to add or take away hierarchies as wanted, relying on the specified degree of pooling. For instance, if we predict there are not any similarities throughout classes, we might take away the worldwide parameter. If we predict that these merchandise don’t have any pure groupings, we might take away the group-level parameters. If we solely care in regards to the group-level impact, we will take away the individual-level parameter and have the group-level coefficients as our most granular parameter. If there exists the presence of subgroups nested inside the teams, we will add one other hierarchical layer. The chances are infinite!

The “Bayesian” facet refers to how we replace our beliefs about these parameters based mostly on noticed knowledge. We first begin with a proposed prior distribution that symbolize our preliminary perception of those parameters, then replace them iteratively to recuperate a posterior distributions that includes the knowledge from the info. In observe, because of this we use the global-level estimate to tell our group-level estimates, and the group-level parameters to tell the unit-level parameters. Items with a bigger variety of observations are allowed to deviate extra from the group-level means, whereas models with a restricted variety of observations are pulled nearer to the means.

Let’s formalize this with our worth elasticity instance, the place we (ideally) need to recuperate the unit-level worth elasticity. We estimate:

$$log(textrm{Items}_{it})= beta_i log(textrm{Value})_{it} +gamma_{c(i),t} + delta_i + epsilon_{it}$$

The place:

- $beta_i sim textrm{Regular}(beta_{cleft(iright)},sigma_i)$

- $beta_{c(i)}sim textrm{Regular}(beta_g,sigma_{c(i)})$

- $beta_gsim textrm{Regular}(mu,sigma)$

The one distinction from the primary equation is that we substitute the worldwide $beta$ time period with product-level betas $beta_i$. We specify that the unit degree elasticity $beta_i$ is drawn from a standard distribution centered across the category-level elasticity common $beta_{c(i)}$, which is drawn from a shared world elasticity $beta_g$ for all teams. For the unfold of the distribution $sigma$, we will assume a hierarchical construction for that too, however on this instance, we simply set primary priors for them to take care of simplicity. For this software, we assume a previous perception of: ${ mu= -2, sigma= 1, sigma_{c(i)}=1, sigma_i=1}$. This formulation of the prior assumes that the worldwide elasticity is elastic, 95% of the elasticities fall between -4 and 0, with a typical deviation of 1 at every hierarchical degree. To check whether or not these priors are accurately specified, we might do a prior predictive checks (not coated on this article) to see whether or not our prior beliefs can recuperate the info that we observe.

This hierarchical construction permits data to movement between merchandise in the identical class and throughout classes. If a selected product has restricted worth variation knowledge, its elasticity will probably be pulled towards the class elasticity $beta_{c(i)}$. Equally, classes with fewer merchandise will probably be influenced extra by the worldwide elasticity, which derives its imply from all class elasticities. The fantastic thing about this strategy is that the diploma of “pooling” occurs robotically based mostly on the info. Merchandise with a number of worth variation will preserve estimates nearer to their particular person knowledge patterns, whereas these with sparse knowledge will borrow extra energy from their group.

Implementation

On this part, we implement the above mannequin utilizing the Numpyro package deal in Python, a light-weight probabilistic programming language powered by JAX for autograd and JIT compilation to GPU/TPU/CPU. We begin off by producing our artificial knowledge, defining the mannequin, and becoming the mannequin to the info. We shut out with some visualizations of the outcomes.

Knowledge Producing Course of

We simulate gross sales knowledge the place demand follows a log-linear relationship with worth and the product-level elasticity is generated from a Gaussian distribution $beta_i sim textrm{Regular}(-2, 0.7)$. We add in a random worth change each time interval with a $50%$ chance, category-specific time tendencies, and random noise. This provides in multiplicatively to generate our log anticipated demand. From the log anticipated demand, we exponentiate to get the precise demand, and draw realized models bought from a Poisson distribution. We then filter to maintain solely models with greater than 100 models bought (helps accuracy of estimates, not a essential step), and are left with $N=11,798$ merchandise over $T = 156$ durations (weekly knowledge for 3 years). From this dataset, the true world elasticity is $beta_g = -1.6$, with category-level elasticities starting from $beta_{c(i)} in [-1.68, -1.48]$.

Remember that this DGP ignores loads of real-world intricacies. We don’t mannequin any components that would collectively have an effect on each costs and demand (corresponding to promotions), and we don’t mannequin any confounders. This instance is solely meant to point out that we will recuperate the product-specific elasticity beneath a wells-specified mannequin, and doesn’t goal to cowl find out how to accurately establish that worth is exogenous. Nevertheless, I counsel that readers confer with Causal Inference for the Brave and True for an introduction to causal inference.

import numpy as np

import pandas as pd

def generate_price_elasticity_data(N: int = 1000,

C: int = 10,

T: int = 50,

price_change_prob: float = 0.2,

seed = 42) -> pd.DataFrame:

"""

Generate artificial knowledge for worth elasticity of demand evaluation.

Knowledge is generated by

"""

if seed will not be None:

np.random.seed(seed)

# Class demand and tendencies

category_base_demand = np.random.uniform(1000, 10000, C)

category_time_trends = np.random.uniform(0, 0.01, C)

category_volatility = np.random.uniform(0.01, 0.05, C) # Random volatility for every class

category_demand_paths = np.zeros((C, T))

category_demand_paths[:, 0] = 1.0

shocks = np.random.regular(0, 1, (C, T-1)) * category_volatility[:, np.newaxis]

tendencies = category_time_trends[:, np.newaxis] * np.ones((C, T-1))

cumulative_effects = np.cumsum(tendencies + shocks, axis=1)

category_demand_paths[:, 1:] = category_demand_paths[:, 0:1] + cumulative_effects

# product results

product_categories = np.random.randint(0, C, N)

product_a = np.random.regular(-2, .7, measurement=N)

product_a = np.clip(product_a, -5, -.1)

# Preliminary costs for every product

initial_prices = np.random.uniform(100, 1000, N)

costs = np.zeros((N, T))

costs[:, 0] = initial_prices

# Generate random values and whether or not costs modified

random_values = np.random.rand(N, T-1)

change_mask = random_values X gross sales

def filter_dataframe(df, min_units = 100):

temp = df[['product','units_sold']].groupby('product').sum().reset_index()

unit_filter = temp[temp.units_sold>min_units]['product'].distinctive()

filtered_df = df[df['product'].isin(unit_filter)].copy()

# Abstract

original_product_count = df['product'].nunique()

remaining_product_count = filtered_df['product'].nunique()

filtered_out = original_product_count - remaining_product_count

print(f"Filtering abstract:")

print(f"- Unique variety of merchandise: {original_product_count}")

print(f"- Merchandise with > {min_units} models: {remaining_product_count}")

print(f"- Merchandise filtered out: {filtered_out} ({filtered_out/original_product_count:.1%})")

# World and class elasticity

global_elasticity = filtered_df['product_elasticity'].imply()

filtered_df['global_elasticity'] = global_elasticity

# Class elasticity

category_elasticities = filtered_df.groupby('class')['product_elasticity'].imply().reset_index()

category_elasticities.columns = ['category', 'category_elasticity']

filtered_df = filtered_df.merge(category_elasticities, on='class', how='left')

# Abstract

print(f"nElasticity Info:")

print(f"- World elasticity: {global_elasticity:.3f}")

print(f"- Class elasticities vary: {category_elasticities['category_elasticity'].min():.3f} to {category_elasticities['category_elasticity'].max():.3f}")

return filtered_df

df = generate_price_elasticity_data(N = 20000, T = 156, price_change_prob=.5, seed=42)

df = filter_dataframe(df)

df.loc[:,'cat_by_time'] = df['category'].astype(str) + '-' + df['time_period'].astype(str)

df.head()

Filtering abstract:

- Unique variety of merchandise: 20000

- Merchandise with > 100 models: 11798

- Merchandise filtered out: 8202 (41.0%)

Elasticity Info:

- World elasticity: -1.598

- Class elasticities vary: -1.681 to -1.482

| product | class | time_period | worth | units_sold | product_elasticity | category_elasticity | global_elasticity | cat_by_time |

|---|---|---|---|---|---|---|---|---|

| 0 | 8 | 0 | 125.95 | 550 | -1.185907 | -1.63475 | -1.597683 | 8-0 |

| 0 | 8 | 1 | 125.95 | 504 | -1.185907 | -1.63475 | -1.597683 | 8-1 |

| 0 | 8 | 2 | 149.59 | 388 | -1.185907 | -1.63475 | -1.597683 | 8-2 |

| 0 | 8 | 3 | 149.59 | 349 | -1.185907 | -1.63475 | -1.597683 | 8-3 |

| 0 | 8 | 4 | 176.56 | 287 | -1.185907 | -1.63475 | -1.597683 | 8-4 |

Mannequin

We start by creating indices for merchandise, classes, and category-time mixtures utilizing pd.factorize(). This permits us to seelct the proper parameter for every remark. We then convert the worth (logged) and models collection into JAX arrays, then create plates that corresponds to every of our parameter teams. These plates retailer the parameters values for every degree of the hierarchy, together with storing the parameters representing the mounted results.

The mannequin makes use of NumPyro’s plate to outline the parameter teams:

global_a: 1 world worth elasticity parameter with a $textrm{Regular}(-2, 1)$ prior.category_a: $C=10$ category-level elasticities with priors centered on the worldwide parameter and customary deviation of 1.product_a: $N=11,798$ product-specific elasticities with priors centered on their respective class parameters and customary deviation of 1.product_effect: $N=11,798$ product-specific baseline demand results with a typical deviation of three.time_cat_effects: $(T=156)cdot(C=10)$ time-varying results particular to every category-time mixture with a typical deviation of three.

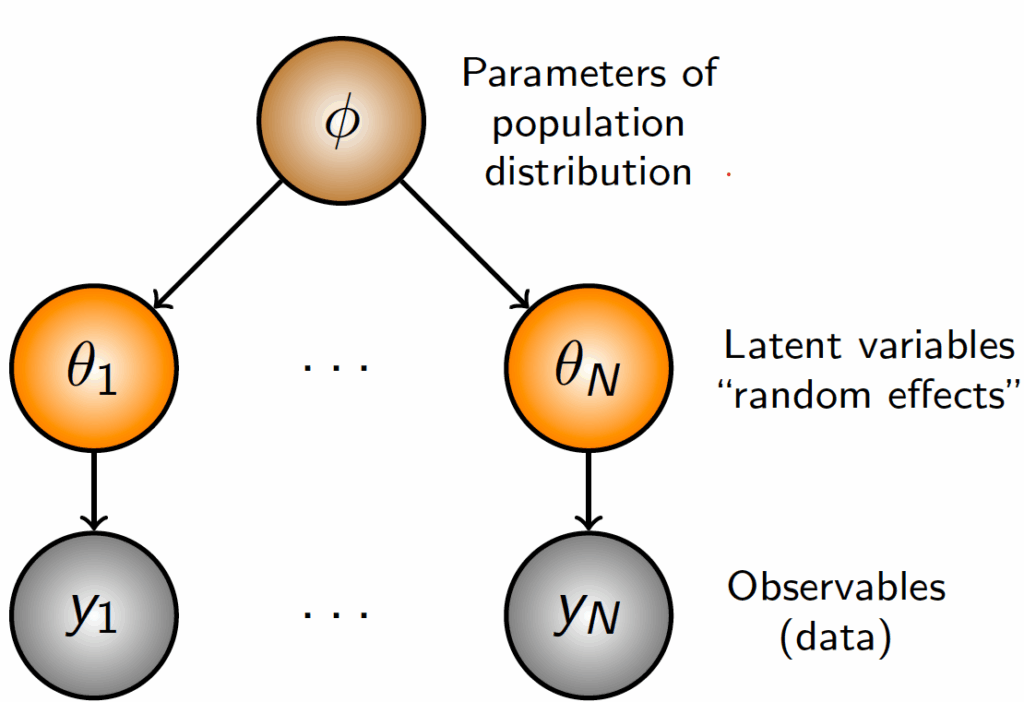

We then reparameterize the parameters utilizing The LocScaleReparam() argument to enhance sampling effectivity and keep away from funneling. After creating the parameters, we calculate log anticipated demand, then convert it again to a price parameter with clipping for numerical stability. Lastly, we name on the info plate to pattern from a Poisson distribution with the calculated price parameter. The optimization algorithm will then discover the values of the parameters that finest match the info utilizing stochastic gradient descent. Beneath is a graphical illustration of the mannequin to point out the connection between the parameters.

import jax

import jax.numpy as jnp

import numpyro

import numpyro.distributions as dist

from numpyro.infer.reparam import LocScaleReparam

def mannequin(df: pd.DataFrame, end result: None):

# Outline indexes

product_idx, unique_product = pd.factorize(df['product'])

cat_idx, unique_category = pd.factorize(df['category'])

time_cat_idx, unique_time_cat = pd.factorize(df['cat_by_time'])

# Convert the worth and models collection to jax arrays

log_price = jnp.log(df.worth.values)

end result = jnp.array(end result) if end result will not be None else None

# Generate mapping

product_to_category = jnp.array(pd.DataFrame({'product': product_idx, 'class': cat_idx}).drop_duplicates().class.values, dtype=np.int16)

# Create the plates to retailer parameters

category_plate = numpyro.plate("class", unique_category.form[0])

time_cat_plate = numpyro.plate("time_cat", unique_time_cat.form[0])

product_plate = numpyro.plate("product", unique_product.form[0])

data_plate = numpyro.plate("knowledge", measurement=end result.form[0])

# DEFINING MODEL PARAMETERS

global_a = numpyro.pattern("global_a", dist.Regular(-2, 1), infer={"reparam": LocScaleReparam()})

with category_plate:

category_a = numpyro.pattern("category_a", dist.Regular(global_a, 1), infer={"reparam": LocScaleReparam()})

with product_plate:

product_a = numpyro.pattern("product_a", dist.Regular(category_a[product_to_category], 2), infer={"reparam": LocScaleReparam()})

product_effect = numpyro.pattern("product_effect", dist.Regular(0, 3))

with time_cat_plate:

time_cat_effects = numpyro.pattern("time_cat_effects", dist.Regular(0, 3))

# Calculating anticipated demand

def calculate_demand():

log_demand = product_a[product_idx]*log_price + time_cat_effects[time_cat_idx] + product_effect[product_idx]

expected_demand = jnp.exp(jnp.clip(log_demand, -4, 20)) # clip for stability

return expected_demand

demand = calculate_demand()

with data_plate:

numpyro.pattern(

"obs",

dist.Poisson(demand),

obs=end result

)

numpyro.render_model(

mannequin=mannequin,

model_kwargs={"df": df,"end result": df['units_sold']},

render_distributions=True,

render_params=True,

)

Estimation

Whereas there are a number of methods to estimate this equation, we use Stochastic Variational Inference (SVI) for this specific software. As an outline, SVI is a gradient-based optimization methodology to attenuate the KL-divergence between a proposed posterior distribution to the true posterior distribution by minimizing the ELBO. It is a totally different estimation approach from Markov-Chain Monte Carlo (MCMC), which samples instantly from the true posterior distribution. In real-world purposes, SVI is extra environment friendly and simply scales to giant datasets. For this software, we set a random seed, initialize the information (household of posterior distribution, assumed to be a Diagonal Regular), outline the training price schedule and optimizer in Optax, and run the optimization for 1,000,000 (takes ~1 hour) iterations. Whereas the mannequin may need converged beforehand, the loss nonetheless improves by a minor quantity even after operating the optimization for 1,000,000 iterations. Lastly, we plot the (log) losses.

from numpyro.infer import SVI, Trace_ELBO, autoguide, init_to_sample

import optax

import matplotlib.pyplot as plt

rng_key = jax.random.PRNGKey(42)

information = autoguide.AutoNormal(mannequin, init_loc_fn=init_to_sample)

# Outline a studying price schedule

learning_rate_schedule = optax.exponential_decay(

init_value=0.01,

transition_steps=1000,

decay_rate=0.99,

staircase = False,

end_value = 1e-5,

)

# Outline the optimizer

optimizer = optax.adamw(learning_rate=learning_rate_schedule)

svi = SVI(mannequin, information, optimizer, loss=Trace_ELBO(num_particles=8, vectorize_particles = True))

# Run SVI

svi_result = svi.run(rng_key, 1_000_000, df, df['units_sold'])

plt.semilogy(svi_result.losses);

Recovering Posterior Samples

As soon as the mannequin has been educated, we will can recuperate the posterior distribution of the parameters by feeding within the ensuing parameters and the preliminary dataset. We can’t name the parameters svi_result.params instantly since Numpyro makes use of an affline transformation on the back-end for non-Regular distributions. Due to this fact, we pattern 1000 instances from the posterior distribution and calculate the imply and customary deviation of every parameter in our mannequin. The ultimate a part of the next code creates a dataframe with the estimated elasticity for every product at every hierarchical degree, which we then be part of again to our authentic dataframe to check whether or not the algorithm recovers the true elasticity.

predictive = numpyro.infer.Predictive(

autoguide.AutoNormal(mannequin, init_loc_fn=init_to_sample),

params=svi_result.params,

num_samples=1000

)

samples = predictive(rng_key, df, df['units_sold'])

# Extract means and std dev

outcomes = {}

excluded_keys = ['product_effect', 'time_cat_effects']

for okay, v in samples.gadgets():

if okay not in excluded_keys:

outcomes[f"{k}"] = np.imply(v, axis=0)

outcomes[f"{k}_std"] = np.std(v, axis=0)

# product elasticity estimates

prod_elasticity_df = pd.DataFrame({

'product': df['product'].distinctive(),

'product_elasticity_svi': outcomes['product_a'],

'product_elasticity_svi_std': outcomes['product_a_std'],

})

result_df = df.merge(prod_elasticity_df, on='product', how='left')

# Class elasticity estimates

prod_elasticity_df = pd.DataFrame({

'class': df['category'].distinctive(),

'category_elasticity_svi': outcomes['category_a'],

'category_elasticity_svi_std': outcomes['category_a_std'],

})

result_df = result_df.merge(prod_elasticity_df, on='class', how='left')

# World elasticity estimates

result_df['global_a_svi'] = outcomes['global_a']

result_df['global_a_svi_std'] = outcomes['global_a_std']

result_df.head()

| product | class | time_period | worth | units_sold | product_elasticity | category_elasticity | global_elasticity | cat_by_time | product_elasticity_svi | product_elasticity_svi_std | category_elasticity_svi | category_elasticity_svi_std | global_a_svi | global_a_svi_std |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 8 | 0 | 125.95 | 550 | -1.185907 | -1.63475 | -1.597683 | 8-0 | -1.180956 | 0.000809 | -1.559872 | 0.027621 | -1.5550271 | 0.2952548 |

| 0 | 8 | 1 | 125.95 | 504 | -1.185907 | -1.63475 | -1.597683 | 8-1 | -1.180956 | 0.000809 | -1.559872 | 0.027621 | -1.5550271 | 0.2952548 |

| 0 | 8 | 2 | 149.59 | 388 | -1.185907 | -1.63475 | -1.597683 | 8-2 | -1.180956 | 0.000809 | -1.559872 | 0.027621 | -1.5550271 | 0.2952548 |

| 0 | 8 | 3 | 149.59 | 349 | -1.185907 | -1.63475 | -1.597683 | 8-3 | -1.180956 | 0.000809 | -1.559872 | 0.027621 | -1.5550271 | 0.2952548 |

| 0 | 8 | 4 | 176.56 | 287 | -1.185907 | -1.63475 | -1.597683 | 8-4 | -1.180956 | 0.000809 | -1.559872 | 0.027621 | -1.5550271 | 0.2952548 |

Outcomes

The next code plots the true and estimated elasticities for every product. Every level is ranked by their true elasticity worth (black), and the estimated elasticity from the mannequin can also be proven. We will see that the estimated elasticities follows the trail of the true elasticities, with a Imply Absolute Error of round 0.0724. Factors in purple represents merchandise whose 95% CI doesn’t comprise the true elasticity, whereas factors in blue symbolize merchandise whose 95% CI incorporates the true elasticity. On condition that the worldwide imply is -1.598, this represents a median error of 4.5% on the product degree. We will see that the SVI estimates intently observe the sample of the true elasticities however with some noise, significantly because the elasticities develop into increasingly more unfavorable. On the highest proper panel, we plot the connection between the error of the estimated elasticities and the true elasticity values. As true elasticities develop into increasingly more unfavorable, our mannequin turns into much less correct.

For the category-level and global-level elasticities, we will create the posteriors utilizing two strategies. We will both boostrap all product-level elasticities inside the class, or we will get the category-level estimates instantly from the posterior parameters. Once we have a look at the category-level elasticity estimates on the underside left, we will see that the each the category-level estimates recovered from the mannequin and the bootstrapped samples from the product-level elasticities are additionally barely biased in the direction of zero, with an MAE of ~.033. Nevertheless, the boldness interval given by the category-level parameter covers the true parameter, in contrast to the bootstrapped product-level estimates. This means that when figuring out group-level elasticities, we should always instantly use the group-level parameters as an alternative of bootstrapping the extra granular estimates. When trying on the world degree, each strategies incorporates the true parameter estimate within the 95% confidence bounds, with the worldwide parameter out-performing the product-level bootstrapping, at the price of having bigger customary errors.

Concerns

- HB underestimates posterior variance: One downside of utilizing SVI for the estimation is that it underestimates the posterior variance. Whereas we’ll cowl this matter intimately in a later article, the target operate for SVI solely takes under consideration the distinction in expectation of our posited distribution and the true distribution. Which means it doesn’t think about the total correlation construction between parameters within the posterior. The mean-field approximation generally utilized in SVI assumes conditional (on the earlier hierarchy’s draw) independence between parameters, which ignores any covariances between merchandise inside the identical hierarchy. Which means if are any spillover results (corresponding to cannibalization or cross-price elasticity), it might not be accounted for within the confidence bounds. As a consequence of this mean-field assumption, the uncertainty estimates are typically overly assured, leading to confidence intervals which might be too slim and fail to correctly seize the true parameter values on the anticipated price. We will see within the high left determine that solely 9.7% of the product-level elasticities cowl their true elasticity. In a later put up, we’ll embody some solutions to this downside.

- Significance of priors: When utilizing HB, priors matter considerably extra in comparison with customary Bayesian approaches. Whereas giant datasets usually enable the probability to dominate priors when estimating world parameters, hierarchical constructions modifications this dynamic and cut back the efficient pattern sizes at every degree. In our mannequin, the worldwide parameter solely sees 10 category-level observations (not the total dataset), classes solely draw from their contained merchandise, and merchandise rely solely on their very own observations. This lowered efficient pattern measurement causes shrinkage, the place outlier estimates (like very unfavorable elasticities) get pulled towards their class means. This highlights the significance of prior predictive checks, since misspecified priors could have outsized affect on the outcomes.

def elasticity_plots(result_df, outcomes=None):

# Create the determine with 2x2 grid

fig = plt.determine(figsize=(12, 10))

gs = fig.add_gridspec(2, 2)

# product elasticity

ax1 = fig.add_subplot(gs[0, 0])

# Knowledge prep

df_product = result_df[['product','product_elasticity','product_elasticity_svi','product_elasticity_svi_std']].drop_duplicates()

df_product['product_elasticity_svi_lb'] = df_product['product_elasticity_svi'] - 1.96*df_product['product_elasticity_svi_std']

df_product['product_elasticity_svi_ub'] = df_product['product_elasticity_svi'] + 1.96*df_product['product_elasticity_svi_std']

df_product = df_product.sort_values('product_elasticity')

mae_product = np.imply(np.abs(df_product.product_elasticity-df_product.product_elasticity_svi))

colours = []

for i, row in df_product.iterrows():

if (row['product_elasticity'] >= row['product_elasticity_svi_lb'] and

row['product_elasticity'] Conclusion

Alternate Makes use of: Except for estimating worth elasticity of demand, HB fashions even have quite a lot of different makes use of in Data Science. In retail, HB fashions can forecast demand for present shops and remedy the cold-start downside for brand spanking new shops by borrowing data from shops/networks which have already been established and are clustered inside the identical hierarchy. For suggestion techniques, HB can estimate user-level preferences from a mix of consumer and item-level traits. This construction allows related suggestions to new customers based mostly on cohort behaviors, regularly transitioning to individualized suggestions as consumer historical past accumulates. If no cohort groupings are simply obtainable, Okay-means can be utilized to group comparable models based mostly on their traits.

Lastly, these fashions may also be used to mix outcomes from experimental and observational research. Scientists can use historic observational uplift estimates (advertisements uplift) and complement it with newly developed A/B exams to scale back the required pattern measurement for experiments by incorporating prior information. This strategy creates a steady studying framework the place every new experiment builds upon earlier findings relatively than ranging from scratch. For groups going through useful resource constraints, this implies quicker time-to-insight (particularly when mixed with surrogate models) and extra environment friendly experimentation pipelines.

Closing Remarks: Whereas this introduction has highlighted a number of purposes of hierarchical Bayesian fashions, we’ve solely scratched the floor. We haven’t deep dived into granular implementation elements corresponding to prior and posterior predictive checks, formal goodness-of-fit assessments, computational scaling, distributed coaching, efficiency of estimation methods (MCMC vs. SVI), and non-nested hierarchical constructions, every of which deserves their very own put up.

However, this overview ought to present a sensible start line for incorporating hierarchical Bayesian into your toolkit. These fashions provide a framework for dealing with (often) messy, multi-level knowledge constructions which might be usually seen in real-world enterprise issues. As you start implementing these approaches, I’d love to listen to about your experiences, challenges, successes, and new use instances for this class of mannequin, so please attain out with questions, insights, or examples by way of my email or LinkedIn. When you have any suggestions on this text, or want to request one other matter in causal inference/machine studying, please additionally be happy to succeed in out. Thanks for studying!

Note: All photographs used on this article is generated by the writer.