Hello associates! It’s Day-14 of studying machine studying. We’ve discovered about Choice Timber (Day-9), Random Forests (Day-10), and the way to make fashions higher with Corss-Validation (Day-11) and Hyperparameter Tuning (Day-13). At this time, we’ll discover new mannequin known as Gradient Boosting. It’s like constructing group of Choice Timber that be taught from one another’s errors to make even higher guesses!

Gradient Boosting

Gradient Boosting is a means for the pc to make guesses by constructing many Choice Timber, however in a better means than Random Forests. In Random Forests (Day-10), all of the bushes work collectively and vote on the ultimate reply. In Gradient Boosting, the bushes are constructed one after one other, and every new tree tries to repair the errors of the earlier ones.

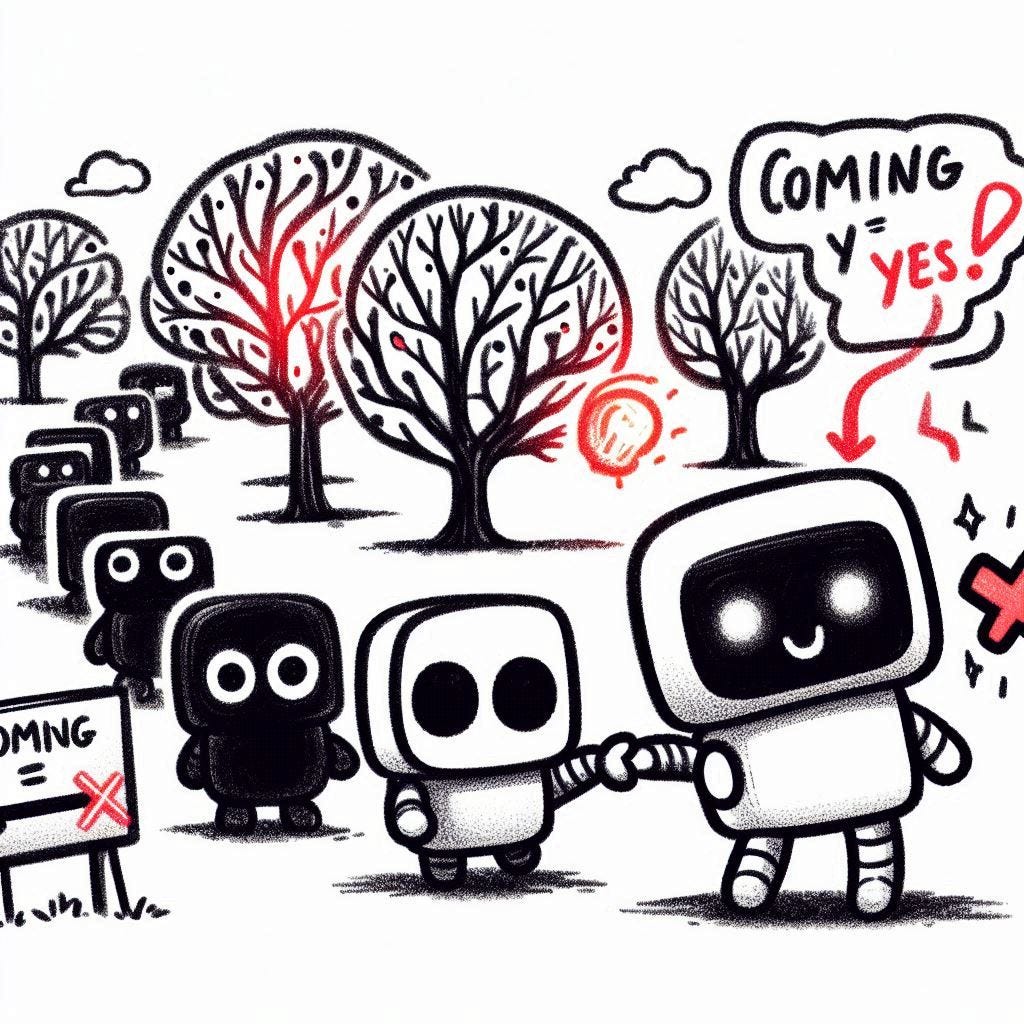

Think about you’re making an attempt to guess if your pals will come to your celebration. You ask one buddy for recommendation, however they guess incorrect. So, you ask one other buddy to deal with fixing that mistake, after which one other buddy to repair the following mistake, and so forth. Every buddy learns from the earlier one’s errors, and collectively, they make a very good guess. That’s how Gradient Boosting works — it builds bushes that be taught from one another to get higher and higher.

Instance of How Gradient Boosting Work

Let’s use our celebration knowledge to see how Gradient Boosting works. We’ll use the identical knowledge from earlier days:

- Good friend 1: Age 20, Likes Pizza 1, Coming 1.

- Good friend 2: Age 22, Likes Pizza 0, Coming 0.

- Good friend 3: Age 25, Likes Pizza 1, Coming 1.

Step 1: Begin with a Easy Guess

Gradient Boosting begins by making a quite simple guess for everybody. A standard start line is to guess the commonest reply within the knowledge:

- We have now 2 associates coming (Coming = 1) and 1 not coming (Coming = 0).

- Most typical: Coming = 1.

- First Guess for Everybody: Coming = 1.

Let’s see how this guess does:

- Good friend 1: Precise 1, Guess 1 (Appropriate).