Documented, examined, and co-written by &

Getting your machine prepared for GPU-accelerated machine studying on Linux sounds easy, till you’re knee-deep in mismatched library variations, obscure error messages, and conflicting set up guides.

This text is a component information and half dev log. On April 24, 2025, we configured and examined a whole ML setting utilizing CUDA 12.6, cuDNN 9.6, PyTorch 2.7, and TensorFlow 2.18 on Ubuntu 24.04 by way of WSL2. Each step was documented and validated that day, so what you’re studying isn’t simply idea, it’s a working setup. This information is the results of that have: a transparent, examined, and totally purposeful setup that goes past surface-level directions.

Though a of that is documented for Ubuntu 24.04 on WSL2, you should use the entire steps on native, bare-metal set up of Ubuntu as effectively.

Earlier than putting in something, we began with a fundamental however essential query: which variations are even suitable with our system?

Every thing within the CUDA ecosystem begins together with your NVIDIA driver model. The motive force determines which variations of the CUDA SDK you may set up. So our first step was to substantiate precisely what driver was put in.

nvidia-smi

WSL2 Driver Conduct

What’s value noting, particularly when you’re new to WSL, is that WSL2 shares the NVIDIA driver put in in your Home windows host system. You don’t want to put in a separate GPU driver inside Ubuntu (in contrast to native Linux). This simplifies the setup a bit, but it surely additionally implies that your CUDA SDK should be suitable together with your host system’s driver model.

Based mostly on this driver model and NVIDIA’s help matrix, we decided that CUDA 12.6 was the only option, as newer variations would threat compatibility points with cuDNN and the frameworks we deliberate to make use of.

We selected CUDA 12.6 as a result of it was the best confirmed suitable model for our driver and it had steady help in each PyTorch 2.7 and TensorFlow 2.18.

As soon as we confirmed that CUDA 12.6 was the perfect suitable model for our driver, the following step was to put in the SDK.

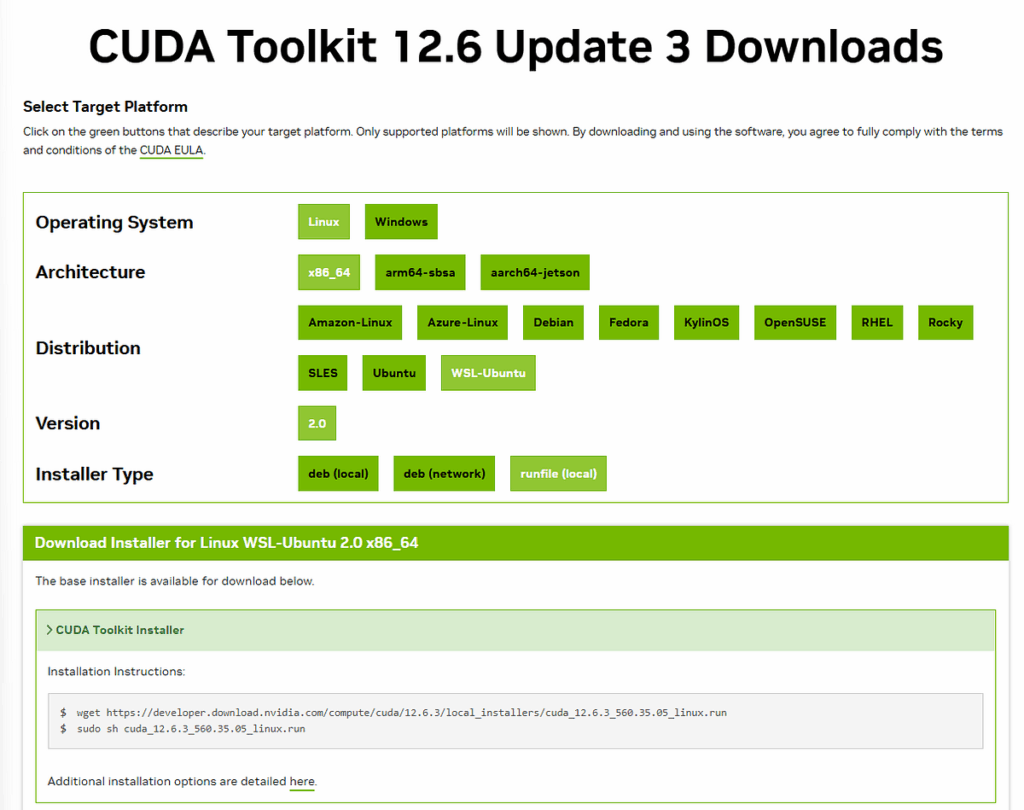

We navigated to the CUDA 12.6.3 archive page and chosen the suitable settings for the environment

Notice: It’s essential to make use of the WSL-specific SDK to keep away from lacking dependencies or driver mismatches. The set up directions are barely completely different from native Ubuntu setups. However identical directions might be used for native Ubuntu.

We opted for the runfile-based set up as an alternative of a bundle supervisor (like apt) for better management. Whereas bundle managers are handy, they usually embody further dependencies or computerized driver installations, which we didn’t want as a result of WSL makes use of the Home windows GPU driver instantly.

Go to the next hyperlink to obtain the CUDA SDK Toolkit:

# Web site Hyperlink

https://developer.nvidia.com/cuda-12-6-3-download-archive?target_os=Linux&target_arch=x86_64&Distribution=WSL-Ubuntu&target_version=2.0&target_type=runfile_local

# Obtain CUDA 12.6.3 SDK for WSL2

wget https://developer.obtain.nvidia.com/compute/cuda/12.6.3/local_installers/cuda_12.6.3_560.35.05_linux.run# Run the installer (skip driver set up if prompted)

sudo sh cuda_12.6.3_560.35.05_linux.run

As soon as the set up was accomplished, we added the next traces to our ~/.bashrc file to make sure the system acknowledges the CUDA binaries and libraries:

Open .bashrc in a textual content editor:

nano ~/.bashrc

Then add these two traces to the tip of the file:

export PATH=/usr/native/cuda-12.6/bin:$PATH

export LD_LIBRARY_PATH=/usr/native/cuda-12.6/lib64:$LD_LIBRARY_PATH

Save and exit the file (Ctrl + O, Enter, then Ctrl + X), and apply the modifications:

supply ~/.bashrc

As soon as we had up to date .bashrc and reloaded our shell, we verified that the CUDA toolkit was correctly put in by checking the model of nvcc, the CUDA compiler:

nvcc --version

After establishing the CUDA toolkit, the following important part was cuDNN (CUDA Deep Neural Community library). cuDNN gives optimized primitives for deep studying workloads and is crucial for frameworks like PyTorch and TensorFlow to make use of the GPU effectively.

We selected cuDNN 9.6 as it’s totally suitable with CUDA 12.6 (per NVIDIA’s support matrix).

Obtain and Setup

We adopted directions from the cuDNN 9.6.0 archive for Ubuntu 24.04 (local deb installer).

Earlier than set up, we added the required dependencies to compile cuDNN samples:

sudo apt-get set up libfreeimage3 libfreeimage-dev

Then, we downloaded and put in cuDNN from following hyperlink:

https://developer.nvidia.com/cudnn-9-6-0-download-archive?target_os=Linux&target_arch=x86_64&Distribution=Ubuntu&target_version=24.04&target_type=deb_local

# Set up instructions for cuDNN set up

wget https://developer.obtain.nvidia.com/compute/cudnn/9.6.0/local_installers/cudnn-local-repo-ubuntu2404-9.6.0_1.0-1_amd64.deb

sudo dpkg -i cudnn-local-repo-ubuntu2404-9.6.0_1.0-1_amd64.deb

sudo cp /var/cudnn-local-repo-ubuntu2404-9.6.0/cudnn-*-keyring.gpg /usr/share/keyrings/

sudo apt-get replace

sudo apt-get -y set up cudnn-cuda-12

This put in cuDNN libraries linked particularly towards CUDA 12, guaranteeing compatibility with our toolkit and ML frameworks.

Elective: Set up and Check cuDNN Samples

To check the set up and discover pattern initiatives, we additionally put in cuDNN’s pattern code:

sudo apt-get -y set up libcudnn9-samples

cp -r cudnn_samples_v9/ ~/supply/cudnn_samples_v9

cd ~/supply/cudnn_samples_v9

Notice: These instructions had been run and examined on April 24, 2025, inside Ubuntu 24.04 on WSL2. All pattern code compiled efficiently with out errors.

With CUDA and cuDNN put in and dealing, we moved on to putting in PyTorch 2.7 — the newest steady launch that helps CUDA 12.6, as of April 2025.

We began by heading over to the official PyTorch installation page and deciding on the proper configuration for our system:

Earlier than operating any set up instructions, we double-checked model help utilizing the PyTorch RELEASE.md compatibility matrix from here. This helped be certain that PyTorch 2.7 and CUDA 12.6 would work easily collectively, and which cuDNN model PyTorch anticipated internally.

Setting Up the Conda Surroundings

We used Miniconda to handle remoted environments. Right here’s how we created the setting and put in PyTorch:

# Elective: Set up ipykernel in your base conda env first

pip set up ipykernel# Create a brand new setting with Python 3.11

conda create -n torch27 python=3.11

conda activate torch27

# Set up PyTorch 2.7 with CUDA 12.6

pip3 set up torch torchvision torchaudio --index-url https://obtain.pytorch.org/whl/cu126

Verifying PyTorch Set up

As soon as put in, we opened Python contained in the setting and ran:

import torch

print(torch.cuda.is_available())

If the output is True, it means your PyTorch setup is efficiently utilizing the GPU by way of CUDA.

Why We Created Separate Environments for PyTorch

Earlier than diving into the TensorFlow set up, it’s essential to clarify why we use completely different Conda environments for every ML framework: PyTorch and TensorFlow.

Throughout our setup, we initially tried putting in each frameworks in the identical setting (ml) to save lots of time and manageability. However in a short time, model conflicts started to floor:

- PyTorch 2.7 requires cuDNN 9.5 internally.

- TensorFlow 2.13 (deliberate) relied on cuDNN 9.3 and anticipated barely completely different CUDA bindings.

- Even the Python and dependency variations overlapped or clashed (e.g., one wanted ninja, the opposite didn’t).

These incompatibilities made it clear that making an attempt to keep up each frameworks in a single setting might result in irritating, hard-to-debug points, particularly when testing GPU availability and runtime conduct.

Lesson Discovered: Isolate Environments

So as an alternative, we adopted a well known greatest observe:

Use remoted environments per framework:

This ensures steady setups and simpler upgrades/downgrades per framework with out cross-contamination of dependencies.

We created a clear Conda setting for PyTorch:

conda create -n torch27 python=3.11

conda activate torch27

We’ll quickly repeat the identical for TensorFlow (in a separate env like tf218), protecting installations clear, versioned, and simple to handle.

This additionally offers flexibility to check out experimental builds or downgrade particular packages with out worrying about one framework breaking the opposite.

With PyTorch working completely in its personal setting, we moved on to establishing TensorFlow 2.18 in its personal devoted Conda setting to keep away from model clashes and bundle conflicts.

Notice: We’re protecting TensorFlow separate as a result of PyTorch and TensorFlow usually require completely different cuDNN variations, construct flags, and dependencies. Putting in each in the identical setting is dangerous and might break one or each frameworks.

Creating the TensorFlow Surroundings

We created a clear Conda setting utilizing Python 3.11:

conda create -n tf218 python=3.11

conda activate tf218

Then put in TensorFlow 2.18 with GPU help:

python3 -m pip set up tensorflow[and-cuda]==2.18

The [and-cuda] tag ensures that TensorFlow pulls within the mandatory CUDA and cuDNN libraries instantly, fairly than counting on international system installations.

Verifying TensorFlow GPU Entry

As soon as put in, we examined the setup utilizing this command:

python3 -c "import tensorflow as tf; print(tf.config.list_physical_devices('GPU'))"

Anticipated Output:

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

This confirmed that TensorFlow was correctly linked with CUDA 12.6 and ready to make use of the GPU. Though some warnings about plugin registration and efficiency optimizations appeared, they’re frequent and non-blocking except you’re compiling TensorFlow from supply. These warnings go away when code is run the following time. So you may safely ignore these.

We now had each PyTorch 2.7 and TensorFlow 2.18 put in, examined, and GPU-accelerated — every in its personal steady sandbox.

To check the environments visually and run ML code interactively, we arrange Jupyter Notebooks with every Conda setting:

pip set up ipykernel

python -m ipykernel set up --user --name=tf218 --display-name "TensorFlow 2.18"

We used VS Code’s Jupyter plugin to open .ipynb information and choose both Torch or TensorFlow kernels relying on what we had been engaged on.

Right here’s what we put in and examined up to now:

Establishing ML environments with GPU help isn’t at all times easy, particularly when managing a number of frameworks, SDK variations, and deep studying libraries.

However documenting as we went, co-debugging, cross-referencing NVIDIA help matrices, and verifying all the things step-by-step helped us construct confidence and readability. And now, all the things is operating easily, with clear environments, GPU acceleration, and no hidden conflicts.

Whether or not you’re working solo or with a pal (like we did), having a repeatable, validated dev log is your greatest protection towards future setup ache.

Pleased experimenting, coaching, and deploying!