It’s a typical incidence; I’ll sit down and try to decide on a movie from certainly one of many streaming providers equivalent to Netflix or Amazon. Given the quantity of selection and the good suggestion algorithms, I’ll nonetheless discover myself endlessly looking the apps to discover a hidden gem.

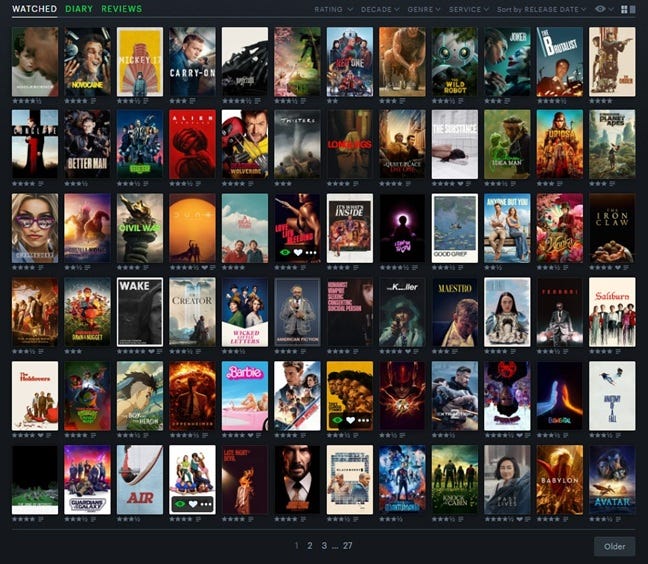

For greater than 10 years I’ve been ranking and reviewing movies and amassed round 1800 rankings on Letterboxd. This must be an affordable pattern measurement to achieve perception into what kinds of movies I choose.

Selecting a movie to look at could be thought of a classification downside and may very well be decided by utilizing options about previous rankings. Let’s say the goal metric is that the expected movie ranking is Excessive (>3) or Low (≤3) and the options to tell this prediction are elements like 12 months of launch, style or runtime. (Be aware different individuals might use rankings in a different way and will class a 2 or 3 as value watching. This comes down to non-public choice)

One methodology that can be utilized for Classification (and regression) issues is a Choice Tree. A Choice Tree is a machine-learning methodology that creates binary splits on options to map observations to areas from these splits.

The principle benefit of a call tree is it’s a quick and versatile option to mannequin and will help interpret the results options have merely. The opposite benefit is that though it applies linear splits, it can be helpful for non-linear relationships too as there could be many splits occurring. One draw back is that it may very simply overfit. This implies it’s irrelevant or unsuitable after we wish to apply it to new movies the mannequin has not seen earlier than.

Visualising the choice tree often goes from the root node, the place we break up on a characteristic, to different inside nodes and ends on the terminal node, known as a leaf node. This chooses the category with nearly all of observations in that node.

Let’s take a look at the discharge 12 months for example. A speculation may very well be that I choose newer to older movies on common.

There isn’t any clear break up earlier than a sure 12 months the place there are solely good or unhealthy movies. Nonetheless it does appear that newer movies usually tend to have the next ranking.

If we create a resolution tree to foretell the ranking utilizing just one break up with coaching and check information, we get:

The accuracy of this mannequin with just one break up is 60%. Though poor, that is an enchancment over randomly selecting Low or Excessive (ratio of excessive is 54%)

How has the choice tree determined the absolute best break up is after 2012? In classification, the principle goal is to create a mannequin that can precisely predict the category, minimising the error of getting these predictions unsuitable.

A method is to make use of the misclassification error price as the standards for every break up. That is outlined as:

Incorrect Predictions / Complete Predictions

We are able to take a look at the check information in a confusion matrix. This compares the expected towards the true labels, proven beneath.

On this case, our Misclassification Error Fee is:

39 + 147 / (114 + 147 +39 +166) =>

186 / 466 = 0.399

We are able to additionally get the Accuracy which is 1 — misclassification so 0.601

The mannequin may try to break up the information many occasions to seek out the bottom doable misclassification price. Nonetheless a significant draw back is that it doesn’t differ between splits that produce nodes with solely observations from one class vs splits that produce a blended node. That is known as the purity of the node.

There are two predominant standards generally used for deciding the place to separate the chosen characteristic: Gini Index or Entropy. Each give a greater measure of how pure a node is. One more reason to not use a misclassification price is that it isn’t differentiable, not like Gini and Entropy

The Gini Index is calculated by utilizing the proportion of every class in every node:

Gini Index = 1 — (proportion of every class in every node)²

Within the above resolution tree for the Low class leaf node offers:

1 — ( 423 / 917 )² — (494 / 917)² = 0.497

The Gini Index can be known as Gini purity and interpreted as the possibility of misclassifying a random pattern, so we wish to minimise this.

An index of 0.5 is an equal variety of every class within the node. For the Low leaf node this isn’t the very best resolution as we’ve got a really impure node to foretell with.

Equally, Entropy is outlined as:

Entropy = — (proportion of sophistication in node) * log₂(proportion of sophistication in node)

Calculating this once more for the Low-class leaf node offers 0.997.

This Entropy could be considered the quantity of uncertainty within the node and is linked to the data gained for a category.

What every of those tells us is that the mannequin is unhealthy. There is just one break up and one characteristic getting used. Attempting additional splits with solely a 12 months didn’t show helpful, so maybe discovering extra info and options in regards to the movies rated will create a greater mannequin.

We explored how a call tree tried to foretell whether or not to look at a movie and the way the tree chooses the splits primarily based on both the Gini Index or Entropy.

For extra studying about Choice Timber, I like to recommend The Elements of Statistical Learning.