Linear Regression is likely one of the broadly used and easy Machine Studying Algorithm. It’s a fundamental algorithm to start out of your AI Studying with.

What’s Linear Regression?

Linear regression fashions the connection between enter variables and the output by becoming a straight line in 2D, a aircraft in 3D, or a hyperplane in larger dimensions. The variety of dimensions corresponds to the variety of enter (impartial) variables.

For higher understanding, let’s maintain issues easy.

Let’s contemplate one enter variable and one output variable. For instance, take the peak and weight of a bunch of individuals. Suppose we’ve got an inventory of pattern top and weight knowledge. Now, if we wish to predict the load of an individual given their top, how would we do it?

We would attempt to guess the load based mostly on the prevailing knowledge — however that will simply be a random guess.

Wait, is there a method to calculate it mathematically?

After all, that is the place linear regression comes into the image.

Pattern Information:

Let’s attempt to plot this knowledge on a graph

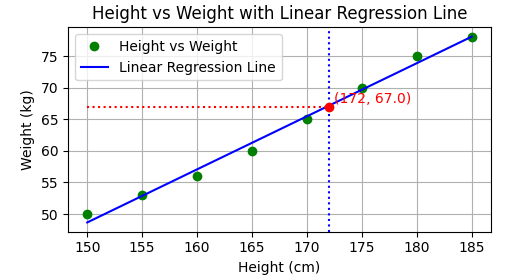

Now we have plotted the factors in a graph. Now we are going to strive to attract a line such that the road is nearer to all of the factors.

Now we have drawn a greatest match straight line which passes closest to all of the pattern factors.

With this graph, let’s strive predicting the load for a top of 172 cm. We’ll draw a line from 172 on the x-axis and join it to our best-fit line. By doing so, we are able to establish the corresponding worth of y (weight).

Hurray!! we’ve got discovered the anticipated weight which is 67Kg. We can’t declare that that is the precise weight of the particular person. As a substitute we may roughly say this may be weight of the particular person for the given top, based mostly on the pattern knowledge supplied.

Nice!! you’ve discovered linear regression. However you is likely to be questioning the place we’ve got used complicated algorithm, it’s only a easy line , proper?

Let me break down it for you.

In 2D(we’ve got two parameter top and weight) , linear regression is all a couple of line. However how will we arrive at a greatest match line which passes near all of the pattern level. If we all know this then we’ve got mastered linear regression.

Equation of a straight line:

As per our graph, y is weight, x is top. If we discover the optimum worth of m (slope) and c (intercept). We are able to discover the worth of y simply.

Let’s rewrite the equation

I’ve simply modified the varibale identify. we name these as weights in ML world.

If we’ve got a number of options then the equation can be

That is nothing however an equation of hyperplane.

Discovering the optimum values for the weights is called coaching. On this context, the mannequin is just the equation of the best-fit line.

TRAINING OF MODEL:

- We initialize weight with random weights and we attempt to plot a line. Clearly the road won’t be closest to all of the factors. We have to change the worth of weights to make it nearer to all the information factors.

we’ve got obtained a random line with randomly initialized weight. Now we’ll attempt to modify the load. The pink dotted line signifies how far the anticipated line is from the precise output. It’s the error. Longer the pink line is bigger the error. We have to cut back the space of pink dotted strains.

2. Calculate the Error worth. The distinction between every precise and predicted values.

With that randomly initialized weight, we are going to get excessive Error , The motive of the coaching is to scale back the Error by adjusting weights.

3. Mannequin parameter choice to attenuate Error. Machine studying approaches discover the most effective parameters for the linear mannequin by defining a price operate and minimizing it by way of gradient descent.

Value Operate

Skinny of it as an error operate. On this case the Error operate is the fee operate. The operate might be Imply Squared Error(MSE), Root Imply squared Error(RMSE) and many others.

Let’s take MSE as price operate. The equation of MSE is

Let’s plot price operate in opposition to the w worth. The MSE is a convex operate.

We have to cut back the error to its minimal. To do that, we modify the worth of w based mostly on the error.

The method of adjusting www to attenuate the error known as Gradient Descent.

Let’s now take a look at how mannequin coaching works — how the worth of w modifications in response to the fee operate.

α- Studying price (alpha)

The studying price refers to how a lot the parameters are modified at every iteration. If the educational price is just too excessive, the mannequin fails to converge and jumps from good to dangerous price optimizations. If the educational price is just too low, the mannequin will take too lengthy to converge to the minimal error.

So, we’ll cease coaching the mannequin additional after sure iteration or if the loss just isn’t altering(assuming it has converged).

Now, we’ve got the optimum worth of weights , which we are able to use to foretell the results of new knowledge. Is our mannequin prepared? Sure, mannequin is simply set of weights.

Efficiency Metrics

Now we have our mannequin prepared, we have to calculate how properly our mannequin performs. There are some metrics which we are able to use to deduce how properly our mannequin performs. one such is R2 or R-squared rating.

The worth of R2 will ideally be between 0 to 1. Extra nearer to 1, extra efficiency.

Regularization

Regularization is a way utilized in machine studying to forestall overfitting by penalizing complicated fashions.

It provides a penalty to the loss operate for having giant weights (coefficients)

Lasso Regression:

- Will make some weights zero.

- Used for function choice ( Every weight is for every function).

Ridge Regression:

- Will cut back the worth of weights.

- To keep away from overfitting.