Study the mechanics of neural networks with a step-by-step instance in R, utilized to an actual advertising and marketing drawback — and see the way it stacks up in opposition to conventional fashions.

Neural networks — extra generally referred to right now as deep studying — appear to be in all places right now, together with advertising and marketing. They energy advice engines, automate advert concentrating on, analyze sentiment, and even generate content material. Neural networks are sometimes talked about and infrequently defined.

This text takes a sensible, hands-on have a look at what neural networks actually are, how they match into the bigger framework of synthetic intelligence, and the way entrepreneurs can use them in significant methods. We’ll stroll by the core ideas and construct a easy neural community step-by-step utilizing R. We’ll even examine the outcomes to a different machine studying mannequin referred to as logistic regression to see the way it stacks up.

Alongside the way in which, I’ll share a few of my very own journey with neural networks — beginning again within the early ’90s, lengthy earlier than they have been stylish. Whether or not you’re seeking to sharpen your information expertise or simply higher perceive the instruments behind trendy advertising and marketing, this publish is designed to offer you each perception and sensible examples.

For full code and dataset, go to my GitHub repo: https://github.com/joedom99/neural-networks-in-marketing-using-r

My introduction to neural networks didn’t come from a knowledge science course — it got here from the TV present Star Trek: The Subsequent Technology. The android “Information” had a positronic mind powered by a neural community, and being the form of one who enjoys digging into the science behind the fiction, I seemed it up. To my shock, neural networks weren’t simply sci-fi — they have been actual. Initially proposed in 1957 by Dr. Frank Rosenblatt, the idea of the “perceptron” was designed to imitate the way in which human neurons course of data.

I revisited neural networks extra significantly throughout graduate college within the early Nineteen Nineties as a part of Georgia State University’s Determination Sciences course — what we’d now name information science or analytics. After class sooner or later, I discussed to my professor, Dr. Alok Srivastava, that I had programming expertise in C and thought a few of our spreadsheet forecasting fashions could possibly be automated. That’s when he requested, “What have you learnt about Backpropagation Neural Networks?” That dialog led us to construct early neural community fashions with hand-coded C packages, utilizing a now-classic reference ebook — Neural Networks in C++ by Adam Blum.

On the time, neural networks have been nonetheless a distinct segment idea — overshadowed quickly after by the rise of the web and caught in what many referred to as an AI winter — a interval when curiosity and funding in synthetic intelligence cooled considerably as a consequence of restricted outcomes. Like many others, I set AI apart to give attention to Web applied sciences.

However now, a long time later, neural networks have reemerged on the core of recent AI. The identical sample recognition strategies we explored again then at the moment are powering product suggestions, picture classification, and instruments like ChatGPT. What as soon as felt experimental is now embedded within the digital instruments entrepreneurs use day-after-day.

To grasp neural networks, it helps to see the place they match within the greater image of Synthetic Intelligence (AI). These phrases usually get used interchangeably, however they really signify completely different layers in a hierarchy of know-how.

- Synthetic Intelligence (AI) is the broadest class. It refers to machines performing duties that usually require human intelligence — issues like decision-making, language understanding, or visible recognition.

- Machine Studying (ML) is a subset of AI. It entails coaching algorithms to enhance at a job by studying from information slightly than following a hard and fast algorithm.

- Neural Networks are one sort of machine studying mannequin. Impressed by how the human mind processes data, they’re designed to detect patterns and relationships in information — particularly when these patterns are too complicated for conventional fashions to seize.

- Deep Studying is a time period used when neural networks grow to be extra layered and sophisticated. These deep neural networks are able to dealing with duties like facial recognition, pure language processing, and picture era. Primarily, all deep studying is finished utilizing neural networks — however not all neural networks are “deep.”

- Generative AI, reminiscent of ChatGPT or picture turbines like DALL·E, builds on deep studying and provides fashions educated particularly to generate new content material — textual content, photographs, audio, and past.

For entrepreneurs, this distinction issues. Lots of the instruments we use — predictive analytics, buyer segmentation, content material era — fall someplace alongside this spectrum. And on the heart of all of it, you’ll usually discover neural networks doing the heavy lifting behind the scenes.

At their core, neural networks are algorithms designed to acknowledge patterns. They’re modeled loosely after the way in which the human mind works, with interconnected “neurons” organized in layers that course of data collectively.

On the coronary heart of each neural community is a straightforward idea: a mathematical mannequin referred to as a perceptron. This mannequin mimics how organic neurons work by taking a number of inputs, making use of a set of weights to these inputs, summing them up, and passing the end result by an activation perform to supply an output.

This diagram reveals how a single synthetic neuron (or perceptron) works:

- Inputs (𝑥₁ to 𝑥ₙ) are multiplied by corresponding weights (𝑤₁ to 𝑤ₙ).

- These merchandise are summed collectively (Σ), then handed by an activation perform (𝜑), which determines the ultimate output (𝑦₁).

- This course of permits the mannequin to be taught relationships between inputs and outputs, adjusting weights as wanted by coaching.

Now think about stacking many of those perceptrons collectively in layers — that’s how a neural community is fashioned.

A fundamental neural community consists of three sorts of layers:

- Enter Layer: The place the information enters (e.g., buyer age, funds, advert period).

- Hidden Layers: The place inside neurons course of the inputs, detect patterns, and alter weights. There will be one or many hidden layers relying on the complexity of the duty.

- Output Layer: The place the mannequin makes its prediction (e.g., chance of marketing campaign success).

Every connection between neurons has a weight that influences how alerts are handed by the community. The mannequin learns by adjusting these weights utilizing a course of referred to as backpropagation, which applies calculus to reduce prediction errors throughout coaching.

Neural networks are particularly good at studying nonlinear relationships — conditions the place inputs work together in complicated methods that may’t be captured by easy equations. This makes them well-suited for duties like picture recognition, speech processing, and buyer conduct prediction.

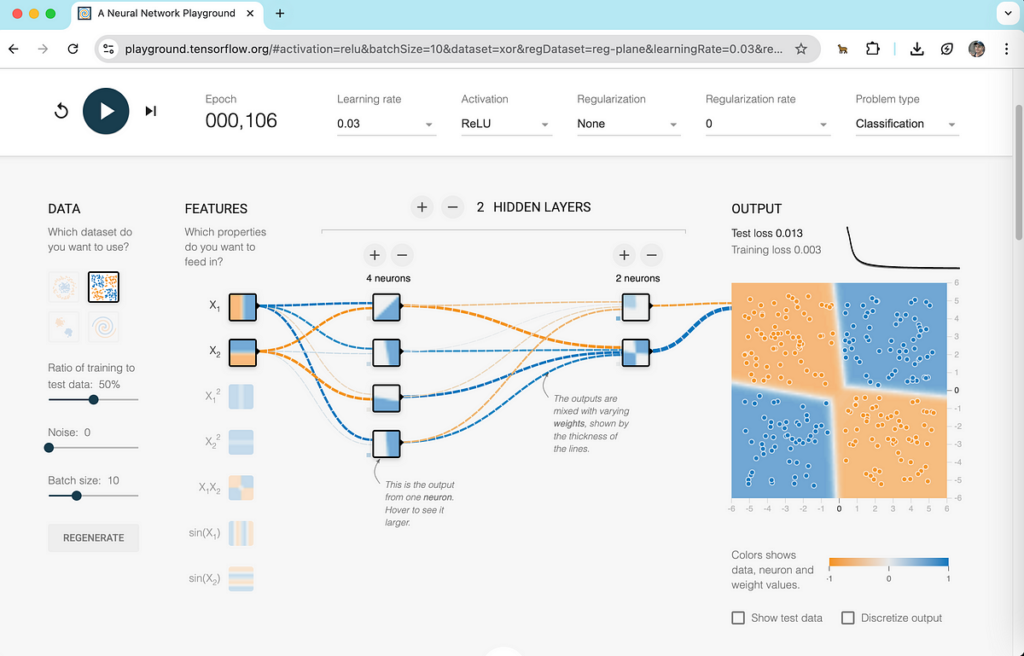

👉 Attempt it your self: Google’s Neural Network Playground is a enjoyable, interactive solution to see neural networks in motion.

In advertising and marketing, a neural community acts like a super-smart spreadsheet. However as an alternative of counting on mounted formulation, it learns patterns from information. You feed it examples — previous campaigns, clicks, conversions — and it begins to “determine” what combos of inputs result in success.

The trade-off? Neural networks are sometimes called black field fashions as a result of it may be laborious to grasp precisely how they make choices. Nonetheless, as we’ll see on this instance, even a small community can ship highly effective insights when used accurately.

Neural networks would possibly sound like one thing reserved for tech giants or analysis labs, however they’re already baked into lots of the instruments entrepreneurs use day-after-day. Whether or not you understand it or not, in case you’ve run a digital advert marketing campaign, despatched an e-mail blast with predictive ship occasions, or used a platform that recommends content material, there’s an excellent likelihood a neural community was concerned. Lots of the AI makes use of I discussed in my article I Own a Marketing Agency. Here’s How We Use (and Don’t Use) AI Like ChatGPT in 2025 are primarily based on neural networks.

Listed here are a few of the commonest methods neural networks are displaying up in advertising and marketing right now:

- Buyer Segmentation and Concentrating on — Neural networks excel at discovering patterns in giant, messy datasets. They’ll group clients primarily based on refined behaviors — issues like searching habits, buy timing, or content material interactions — to assist entrepreneurs create smarter viewers segments.

- Predicting Buyer Habits — Whether or not it’s forecasting churn, predicting future purchases, or estimating lifetime worth, neural networks can be taught from historic information to anticipate what somebody would possibly do subsequent. These predictions can drive every little thing from e-mail personalization to retention methods.

- Customized Suggestions — Suggestion engines like those utilized by Amazon, Netflix, or Spotify are powered by neural networks. In advertising and marketing, comparable fashions can be utilized to counsel merchandise, companies, or content material tailor-made to every person — bettering relevance and boosting conversions.

- Picture and Video Recognition — Visible content material is in all places in advertising and marketing, and neural networks are behind a lot of the picture recognition know-how used to categorise, tag, or analyze that content material. For instance, you would possibly use a mannequin to mechanically establish model logos or detect which social posts function your merchandise.

- Sentiment Evaluation and Social Listening — Neural networks can even course of language — serving to manufacturers perceive the temper or emotion behind buyer critiques, assist tickets, or social media feedback. This type of evaluation helps groups reply quicker and with extra context.

- Optimizing Advert Efficiency — Advert platforms use deep studying fashions to foretell click-through charges, bid on impressions in actual time, and dynamically alter artistic. When you don’t see the neural community itself, it’s usually optimizing issues behind the scenes.

These aren’t simply theoretical use circumstances — they’re issues entrepreneurs are doing right now, usually while not having to construct the fashions themselves. However as we’ll see within the subsequent sections, understanding how these fashions work (and even constructing one your self) provides you a critical benefit in the case of decoding outcomes, troubleshooting efficiency, or creating one thing customized on your personal campaigns.

Neural networks are already behind the scenes in lots of the advertising and marketing platforms we depend on. It’s possible you’ll not all the time get to decide on whether or not to make use of one — they’re usually constructed into the instruments. However in some circumstances, particularly when constructing customized fashions, you do have a selection. In a earlier article, for instance, we constructed a recommender system using collaborative filtering — a completely completely different method that doesn’t depend on neural networks. We may have used one, however it wasn’t essential.

That’s the purpose of this part: understanding the strengths and trade-offs of neural networks helps you determine after they’re price constructing your self — and when an easier or extra clear mannequin may be a greater match.

✅ What Neural Networks Are Good At

- Discovering complicated patterns — Neural networks are nice at dealing with messy, nonlinear relationships between inputs — particularly when there are many variables concerned.

- Scaling with massive information — They shine in environments the place giant quantities of knowledge are flowing in and have to be processed or predicted rapidly, like in e-commerce or programmatic advert bidding.

- Delivering excessive predictive accuracy — With sufficient information and correct tuning, neural networks usually outperform conventional fashions on classification and prediction duties.

- Working with unstructured information — In contrast to fashions that solely work on numbers and classes, neural networks will be educated on photographs, audio, textual content, and extra — making them versatile throughout many advertising and marketing functions.

⚠️ When a Neural Community Would possibly Not Be the Proper Instrument

- You must clarify the outcomes — Neural networks is usually a “black field,” which makes them tougher to interpret. If it’s worthwhile to justify outcomes to purchasers, stakeholders, or regulators, a extra clear mannequin could also be higher.

- Your dataset is small or easy — Neural networks usually want a good quantity of knowledge to coach successfully. Easier fashions like logistic regression or choice timber usually work simply as nicely — and are simpler to handle — on smaller datasets.

- You’re brief on time or computing energy — Coaching and tuning a neural community takes extra time and assets than operating a fast regression or clustering mannequin. If turnaround time is important, that could possibly be a dealbreaker.

- You danger overfitting — With out cautious validation, neural networks can be taught patterns too nicely — memorizing the coaching information as an alternative of generalizing. This makes mannequin efficiency look nice in testing however fail in real-world use.

In brief, neural networks are a strong possibility when the issue is complicated, the information is wealthy, and efficiency is a precedence. However they’re not all the time essential. Typically an easier mannequin gives you what you want quicker — with fewer complications. Let’s discover an instance.

Let’s think about you’re employed at a small advertising and marketing company that runs digital advert campaigns for quite a lot of purchasers. These campaigns can have completely different targets — some are designed to generate leads, others to drive gross sales, and a few are nearly getting a message in entrance of the suitable viewers. Whatever the particular goal, on the finish of the day, every consumer desires to know: Was the marketing campaign a hit?

The difficult half is that success can look completely different from one consumer to the subsequent. For one, it may be a minimal variety of certified leads. For an additional, it could possibly be a return on funding that justifies the spend. In observe, companies outline success primarily based on a mixture of metrics — conversion charges, income, engagement, and cost-efficiency. For this train, we’re going to simplify issues a bit whereas nonetheless capturing the enterprise logic behind the scenes.

In our instance, a marketing campaign shall be thought of profitable if it generates estimated income that’s at the least 1.5 occasions the funds. This rule of thumb is a stand-in for a client-defined efficiency objective and displays the concept an excellent marketing campaign ought to ship greater than it prices. We’ll name this our Success variable — set to 1 if the rule is met, and 0 in any other case.

To make issues concrete, we’ve created a small artificial dataset that features variables you’d usually observe for a digital marketing campaign. You’ll be able to obtain this dataset from github.

- Funds — Whole spend allotted to the marketing campaign

- Platform — The advert platform used (e.g., Fb, Google, LinkedIn)

- AudienceSize — Variety of individuals focused

- Period — Marketing campaign size in days

- EstimatedRevenue — Projected income generated. We’re going to go away this out of the mannequin as a result of we in all probability wouldn’t have an correct quantity right here forward of time.

- Success — A binary goal: 1 = success, 0 = not profitable. We’d have some indication of the income (and different success metrics) so that is included within the mannequin and is definitely what we’re attempting to find out.

At 10,000 rows, this dataset is sufficiently small to work with in R however sensible sufficient to reveal how a neural community processes enter variables to be taught patterns and make predictions. We’ll begin by constructing a neural community in R, then examine its efficiency to a logistic regression mannequin — giving us each predictive energy and interpretability.

We’ll now stroll by the R code used to construct and consider a neural community for predicting advertising and marketing marketing campaign success talked about within the earlier part. There are a number of methods to implement a neural community utilizing R, however we’ve chosen to make use of the highly effective neuralnet package deal.

You’ll be able to comply with alongside utilizing the code and dataset from my GitHub repo.

🧩 Step 1: Load Required Libraries

We begin by loading the R packages wanted for information manipulation, modeling, and preprocessing. In case you haven’t put in these packages earlier than, you may uncomment the set up.packages() strains to put in them.

# -------------------------------------------------------

# STEP 1: Load required libraries

# -------------------------------------------------------

# Solely run set up.packages() as soon as if not already put in

# set up.packages("tidyverse")

# set up.packages("readr")

# set up.packages("neuralnet")

# set up.packages("fastDummies")library(tidyverse) # Load core information manipulation and visualization packages

library(readr) # Load features for quick CSV file studying

library(neuralnet) # Load the package deal for coaching and visualizing neural networks

library(fastDummies) # Load helper features to create one-hot encoded dummy variables

These packages set us as much as learn the information, remodel it right into a usable format, and construct a neural community mannequin with minimal setup.

📁 Step 2: Load and Preview the Dataset

The dataset consists of 10,000 artificial advertising and marketing campaigns. Every row represents a digital marketing campaign with data like funds, platform, viewers dimension, period, estimated income, and whether or not or not it was thought of a hit.

Right here’s a have a look at the primary few rows:

Now let’s load it into R and take a fast have a look at the construction:

# -------------------------------------------------------

# STEP 2: Load and preview the dataset

# -------------------------------------------------------

# Load your artificial 10,000-row advertising and marketing marketing campaign dataset

information # Shortly examine construction and information sorts

head(information)

glimpse(information)

Operating these instructions provides us a preview of the information and confirms that every one columns are loaded accurately. This step additionally helps us confirm that the dataset consists of numeric and categorical variables we’ll must preprocess earlier than modeling.

# A tibble: 6 × 6

Funds Platform AudienceSize Period EstimatedRevenue Success

1 960 Google 81224 21 3171. 1

2 3872 Instagram 24457 39 4909. 0

3 3192 Instagram 71572 44 7865. 1

4 566 LinkedIn 30960 26 1479. 1

5 4526 TikTok 88695 33 7284. 1

6 3544 Google 8910 14 7440. 1

The output above is produced by the head() perform. It shows the primary six rows of the dataset. This offers us a fast have a look at the construction and values we’ll be working with. This can be a useful checkpoint to verify the information loaded as anticipated.

Rows: 10,000

Columns: 6

$ Funds 960, 3872, 3192, 566, 4526, 3544, 3271, 3019, 230, 17…

$ Platform "Google", "Instagram", "Instagram", "LinkedIn", "TikT…

$ AudienceSize 81224, 24457, 71572, 30960, 88695, 8910, 3693, 16858,…

$ Period 21, 39, 44, 26, 33, 14, 22, 39, 20, 11, 34, 23, 27, 1…

$ EstimatedRevenue 3171.12, 4909.19, 7864.67, 1479.04, 7284.10, 7439.58,…

$ Success 1, 0, 1, 1, 1, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1,…

The glimpse() perform (used to supply the output above) offers a compact abstract of the complete dataset. It reveals us what number of rows and columns there are (10,000 and 6, respectively), together with every column’s identify, information sort, and a pattern of values. This confirms that we’re working with the suitable sorts of information: numeric (

📊 Step 3: Discover Abstract Statistics

Earlier than coaching a mannequin, it’s a good suggestion to discover the dataset extra formally. Abstract statistics give us a way of scale, variation, and general patterns.

The next code calculates:

- General averages and normal deviations for numeric fields

- The general marketing campaign success charge

- A breakdown of averages and success charges by promoting platform

# -------------------------------------------------------

# STEP 3: Generate abstract statistics

# -------------------------------------------------------

# General stats for numeric variables

summary_stats %

summarise(

Depend = n(),

Budget_Mean = imply(Funds),

Budget_SD = sd(Funds),

AudienceSize_Mean = imply(AudienceSize),

AudienceSize_SD = sd(AudienceSize),

Duration_Mean = imply(Period),

Duration_SD = sd(Period),

Revenue_Mean = imply(EstimatedRevenue),

Revenue_SD = sd(EstimatedRevenue),

Success_Rate = imply(Success)

)

print("=== General Abstract Statistics ===")

print(summary_stats)# Grouped abstract by promoting platform

success_by_platform %

group_by(Platform) %>%

summarise(

Depend = n(),

Avg_Budget = imply(Funds),

Avg_Duration = imply(Period),

Avg_Audience = imply(AudienceSize),

Avg_Revenue = imply(EstimatedRevenue),

Success_Rate = imply(Success)

)

print("=== Abstract by Platform ===")

print(success_by_platform)

=== General Abstract Statistics ===

# A tibble: 1 × 10

Depend Budget_Mean Budget_SD AudienceSize_Mean AudienceSize_SD Duration_Mean

1 10000 2570. 1410. 50123. 28848. 25.1

This output above confirms that we’re working with a ten,000-row dataset primarily based on sensible small enterprise advertising and marketing campaigns. The typical marketing campaign funds is simply over 2,500, with a variety (SD = 1,410), and campaigns usually goal round 50,000 viewers members. On common, campaigns run for 25 days. These statistics present helpful context for modeling — particularly when fascinated about which inputs would possibly affect whether or not a marketing campaign is profitable.

=== Abstract by Platform ===

# A tibble: 5 × 7

Platform Depend Avg_Budget Avg_Duration Avg_Audience Avg_Revenue Success_Rate

1 Fb 1947 2518. 25.1 50116. 5676. 0.880

2 Google 1997 2579. 25.0 50245. 5734. 0.857

3 Instagram 2028 2596. 24.9 51100. 5818. 0.867

4 LinkedIn 1970 2603. 24.9 50050. 5776. 0.860

5 TikTok 2058 2551. 25.4 49119. 5725. 0.864

This breakdown above reveals that the campaigns are pretty evenly distributed throughout platforms. Common budgets, durations, and viewers sizes are all in an identical vary, which suggests a balanced dataset. Whereas the variations are refined, we are able to see that Fb has the best success charge at 88%, whereas Google has the bottom at round 86%. These small variations may appear minor to us, however to a neural community, they may signify learnable patterns that affect prediction accuracy.

Now that we’ve explored the dataset and perceive the vary of inputs throughout platforms, we’re prepared to start out making ready the information for modeling. Step one in that course of is changing the Platform column right into a format {that a} neural community can perceive.

🧮 Step 4: One-Sizzling Encode the Platform Column

Neural networks can solely work with numeric enter, so we have to convert the Platform column — which is categorical — right into a numerical format. One of the best ways to do that is thru one-hot encoding, which creates a brand new column for every platform (e.g., Platform_Google, Platform_TikTok, and many others.).

We’ll omit one platform to behave because the baseline. This avoids redundancy and multicollinearity in our mannequin.

# -------------------------------------------------------

# STEP 4: One-hot encode the specific 'Platform' column

# -------------------------------------------------------

# Convert 'Platform' into binary columns for every platform (minus one for baseline)

data_encoded select_columns = "Platform",

remove_first_dummy = TRUE,

remove_selected_columns = TRUE)

Right here’s what the dataset seems to be like after one-hot encoding utilizing the dummy_cols() perform from the fastDummies package deal:

The unique Platform column has been changed with 4 new binary columns: Platform_Google, Platform_Instagram, Platform_LinkedIn, and Platform_TikTok. A worth of 1 signifies that the marketing campaign was run on that platform, and 0 means it wasn’t.

We deliberately omit one platform (on this case, Fb) to function the baseline. This avoids redundant data and lets the mannequin examine every platform in opposition to the omitted class.

The dummy_cols() perform automates this course of — rapidly producing these new binary columns and eradicating the unique categorical one. That is the essence of one-hot encoding: representing a single categorical variable as a number of binary flags in order that machine studying fashions can course of them numerically.

Now that our categorical information is correctly formatted, the subsequent step is to normalize the numeric columns so that every function contributes on an identical scale throughout coaching.

📏 Step 5: Normalize Numeric Inputs

Neural networks are delicate to the dimensions of numeric enter. Options like Funds or AudienceSize might need very completely different ranges, which might throw off the coaching course of. To repair that, we normalize all numeric inputs to a typical scale between 0 and 1. This ensures that every function contributes proportionally throughout coaching.

# -------------------------------------------------------

# STEP 5: Normalize numeric enter variables

# -------------------------------------------------------

# Normalize inputs to a 0–1 scale to assist the neural community prepare effectively

data_scaled %

mutate(throughout(c(Funds, AudienceSize, Period, EstimatedRevenue),

~ (. - min(.)) / (max(.) - min(.))))

After normalization, the values within the numeric columns now fall between 0 and 1, as proven beneath:

You’ll be able to see that Funds, AudienceSize, Period, and EstimatedRevenue at the moment are all expressed as relative values. This makes it simpler for the neural community to be taught patterns with out being biased towards options with bigger numeric scales. Categorical columns (Platform_*) stay untouched — they’re already binary and don’t require scaling.

Now that our information is encoded and scaled, we’re prepared to separate it into coaching and testing units so we are able to consider how nicely the mannequin performs.

🧪 Step 6: Break up into Practice/Check Units

Earlier than coaching the mannequin, we have to divide the information into two components:

- Coaching set (80%) — used to coach the neural community.

- Check set (20%) — used to guage how nicely the mannequin performs on unseen information.

This can be a normal observe in machine studying to forestall overfitting and to simulate how the mannequin would behave in a real-world deployment.

# -------------------------------------------------------

# STEP 6: Break up the information into coaching and take a look at units

# -------------------------------------------------------

set.seed(123)

train_indices train_data test_data

Setting the seed ensures that your outcomes are reproducible — you’ll get the identical cut up each time you run the code. At this level, now we have two clear datasets: one for coaching the neural community and one other for testing how nicely it generalizes to new campaigns.

Now we’re able to outline the neural community mannequin and begin coaching it utilizing the coaching set.

🧠 Step 7: Outline the Mannequin System

On this step, we outline the method that tells the neural community which variables to make use of as inputs and what to foretell. Our goal variable is Success, and we’re utilizing 4 numeric variables (Funds, AudienceSize, Period) together with the one-hot encoded platform columns.

# -------------------------------------------------------

# STEP 7: Outline the neural community mannequin method

# -------------------------------------------------------

# Notice: EstimatedRevenue is excluded

method Platform_Google + Platform_Instagram + Platform_LinkedIn + Platform_TikTok")

Once I first created this mannequin, I included EstimatedRevenue as one of many inputs. It made intuitive sense at first — however the mannequin rapidly overfit and achieved practically good accuracy. That’s as a result of Success is straight primarily based on income, so together with it gave the mannequin an unfair benefit.

In real-world situations, you usually wouldn’t know the income earlier than the marketing campaign runs, so it’s finest to exclude it from the inputs. In case you ever wish to take a look at it for comparability or academic functions, simply add EstimatedRevenue again into the method.

Now that the mannequin is aware of which variables to work with, we are able to prepare the neural community.

🤖 Step 8: Practice the Neural Community

Now it’s time to coach our neural community utilizing the neuralnet() perform. On this instance, we use a easy structure: one hidden layer with three neurons and a logistic (binary) output.

# -------------------------------------------------------

# STEP 8: Practice the neural community

# -------------------------------------------------------

# Utilizing 3 hidden neurons and a binary (logistic) output

nn_model information = train_data,

hidden = 3,

linear.output = FALSE,

stepmax = 1e6)

Let’s break down what every parameter does:

- method: This defines the construction of the mannequin — what we’re predicting (Success) and which options we’re utilizing as inputs.

- information: The coaching dataset the mannequin will be taught from.

- hidden = 3: This specifies one hidden layer with 3 neurons. You’ll be able to change this to a vector (e.g., c(4, 2)) for a number of hidden layers.

- linear.output = FALSE: Since we’re predicting a binary end result (0 or 1), we set this to FALSE to use a logistic activation perform to the output layer.

- stepmax = 1e6: This units the utmost variety of steps (iterations) the coaching course of can take. It helps stop infinite loops if convergence is sluggish.

The mannequin works by adjusting the inner weights to reduce prediction error on the coaching information. Beneath the hood, this course of makes use of backpropagation — a method that updates weights through the use of calculus to compute how a lot every neuron contributed to the general error. As soon as the mannequin finishes coaching, we are able to visualize its construction to raised perceive what’s happening inside. (NOTE: actually giant fashions can take a very long time to run).

Though I didn’t put this within the unique program, you may examine the inner construction and weights of the community utilizing:

nn_model$end result.matrix

This output provides an in depth have a look at the ultimate coaching state by displaying the end result matrix as follows:

> nn_model$end result.matrix

[,1]

error 3.972053e+02

reached.threshold 9.886200e-03

steps 3.290000e+03

Intercept.to.1layhid1 -5.510213e+00

Funds.to.1layhid1 1.719789e+01

AudienceSize.to.1layhid1 2.405900e+00

Period.to.1layhid1 2.305527e+00

Platform_Google.to.1layhid1 -1.043216e+00

Platform_Instagram.to.1layhid1 1.138820e+00

Platform_LinkedIn.to.1layhid1 1.771528e+00

Platform_TikTok.to.1layhid1 1.684564e+00

Intercept.to.1layhid2 1.658392e+00

Funds.to.1layhid2 6.855963e-01

AudienceSize.to.1layhid2 -5.861810e+00

Period.to.1layhid2 -4.882796e-01

Platform_Google.to.1layhid2 6.385049e-01

Platform_Instagram.to.1layhid2 2.040862e-01

Platform_LinkedIn.to.1layhid2 5.573260e-01

Platform_TikTok.to.1layhid2 2.059042e-01

Intercept.to.1layhid3 -1.168566e+00

Funds.to.1layhid3 -8.427645e+00

AudienceSize.to.1layhid3 4.578910e+00

Period.to.1layhid3 -2.181978e-01

Platform_Google.to.1layhid3 6.396035e-03

Platform_Instagram.to.1layhid3 -4.211105e-01

Platform_LinkedIn.to.1layhid3 -3.692508e-01

Platform_TikTok.to.1layhid3 -4.161335e-01

Intercept.to.Success -1.310614e-01

1layhid1.to.Success 2.336759e+00

1layhid2.to.Success -1.982403e+00

1layhid3.to.Success 2.198383e+01

- error reveals the ultimate error after coaching. Decrease is healthier.

- reached.threshold confirms whether or not coaching stopped when the error reached an appropriate degree.

- steps tells us what number of iterations it took to coach the mannequin — on this case, 3,290. That’s nicely beneath the stepmax of 1,000,000 we set earlier, which implies the mannequin was capable of converge effectively with out hitting the higher restrict.

- Under which can be the precise weights connecting every enter and hidden neuron to the output. These are what the mannequin “discovered” from the information.

For instance, take this weight from the output above:

Funds.to.1layhid1 = 17.20 — Which means the normalized Funds has a robust constructive affect on the primary hidden neuron. In different phrases, as funds will increase (on a scale from 0 to 1), it will increase the activation of this neuron, which in flip can enhance the chance of the mannequin predicting a marketing campaign as profitable.

Now examine that to:

Funds.to.1layhid3 = -8.43 — This reveals that the identical enter — Funds — has a adverse affect on one other neuron. That’s the fantastic thing about neural networks: they don’t depend on only one interpretation. Totally different neurons seize completely different patterns within the information.

We additionally see enter from platform indicators, reminiscent of:

Platform_TikTok.to.1layhid1 = 1.68 — Which means campaigns run on TikTok are nudging the primary hidden neuron positively. This might mirror refined correlations within the information that the mannequin is choosing up.

These weights signify the “studying” that takes place throughout coaching. Whereas we don’t interpret each manually in observe, they do assist demystify what’s taking place behind the scenes. That mentioned, this complexity is strictly why a neural community is usually thought of a black field — it’s tough to totally clarify how all of the weights work together to supply a prediction.

💡 Sidebar: Neural Networks and Generative AI

Whereas this weblog focuses on a easy neural community for advertising and marketing predictions, the identical basic rules are utilized in instruments like ChatGPT, DALL·E, and different generative AI fashions.

These fashions are powered by deep neural networks — usually with tens of millions and even billions of parameters — educated to acknowledge patterns in textual content, photographs, and extra. Similar to in our instance, they be taught by adjusting weights between layers of neurons. However at that scale, the interactions grow to be so complicated that even builders can’t all the time clarify precisely why the mannequin generated a selected output.

That’s why these methods are sometimes called black bins: highly effective and efficient, however laborious to interpret. The mannequin might provide the proper reply — however understanding the “why” behind it’s a part of an ongoing problem within the area of explainable AI.

🧬 Step 9: Visualize the Community

Since our neural community is small — simply three hidden neurons and a handful of inputs — we are able to truly visualize the mannequin in a considerably significant method. That is one thing you can’t do with bigger, extra complicated networks. In our case it provides us a useful peek into what’s taking place behind the scenes.

# -------------------------------------------------------

# STEP 9: Visualize the educated community

# -------------------------------------------------------

plot(nn_model)

Operating this command produces the next diagram:

Right here’s what you’re seeing within the neural community pictured above:

- Left facet: The enter layer comprises the seven enter options (3 numeric and 4 one-hot encoded platforms).

- Center: Three hidden neurons make up the only hidden layer.

- Proper facet: The output neuron labeled Success, which makes the ultimate prediction.

- Numbers on arrows: These are the discovered weights that signify how strongly one node influences one other.

- Blue arrows: Bias phrases — values the mannequin provides at every layer to enhance flexibility.

For instance, the arrow from Funds to the primary hidden neuron reveals a big constructive weight (~17.19), which means Funds closely influences that neuron’s activation. You can even see how every hidden neuron contributes otherwise to the ultimate prediction.

Remember that these weights are all the results of coaching — the mannequin discovered them mechanically primarily based on patterns within the information. Subsequent, we’ll use the take a look at information to guage how nicely the mannequin above performs in observe.

📈 Step 10: Make Predictions on the Check Set

Now that the mannequin is educated, it’s time to see how nicely it performs on unseen information — the take a look at set we put aside earlier. We’ll move in simply the enter options and examine the mannequin’s predictions to the precise outcomes.

# -------------------------------------------------------

# STEP 10: Predict utilizing the take a look at set

# -------------------------------------------------------

test_inputs predictions predicted_class 0.5, 1, 0)

precise

Right here’s what every line does:

- choose(test_data, -Success): Removes the goal variable (Success) so we solely feed inputs into the mannequin.

- compute(nn_model, test_inputs)$internet.end result: Makes use of the educated mannequin to generate predicted possibilities between 0 and 1.

- ifelse(predictions > 0.5, 1, 0): Converts possibilities into binary predictions (1 for predicted success, 0 in any other case).

- predicted_class: These are the mannequin’s predictions — a vector of 1s and 0s primarily based on the edge of 0.5 set above. A 1 means the mannequin predicts the marketing campaign shall be profitable; a 0 means it predicts failure.

- precise : These are the true outcomes from the take a look at set. Every worth is both 1 (the marketing campaign was truly profitable) or 0 (it wasn’t). We’ll examine these in opposition to predicted_class to guage the mannequin’s efficiency.

This step mimics how the mannequin can be utilized in a real-world setting — the place we don’t know upfront whether or not a marketing campaign will succeed, however we wish the mannequin to make its finest guess primarily based on the out there inputs. Subsequent, we’ll consider these predictions to see how correct — and helpful — they are surely.

✅ Step 11: Consider Neural Community Efficiency

With the predictions in hand, we are able to now assess how nicely the mannequin carried out. We’ll calculate a number of frequent classification metrics:

- Accuracy — The proportion of whole predictions that have been appropriate.

- Precision — Among the many campaigns the mannequin predicted as profitable, what number of truly have been?

- Recall — Among the many campaigns that have been truly profitable, what number of did the mannequin accurately establish?

- F1 Rating — A harmonic imply of precision and recall that balances each metrics.

- Confusion Matrix — which provides a breakdown of true vs. false positives and negatives.

# -------------------------------------------------------

# STEP 11: Consider mannequin efficiency

# -------------------------------------------------------# Accuracy

accuracy cat("Accuracy:", spherical(accuracy, 3), "n")

# Confusion matrix

conf_matrix print("=== Confusion Matrix ===")

print(conf_matrix)

# Precision, Recall, and F1 Rating

TP TN FP FN

precision recall f1_score

cat("Precision:", spherical(precision, 3), "n")

cat("Recall:", spherical(recall, 3), "n")

cat("F1 Rating:", spherical(f1_score, 3), "n")

Accuracy: 0.867=== Confusion Matrix ===

Precise

Predicted 0 1

0 3 6

1 260 1731

Precision: 0.869

Recall: 0.997

F1 Rating: 0.929

The mannequin achieved an accuracy of 0.867, which implies it accurately predicted the end result for about 87% of the campaigns within the take a look at set. The confusion matrix helps break that down additional:

- True Positives (TP): 1,731 — The mannequin accurately predicted that these campaigns would achieve success — and so they have been.

- False Positives (FP): 260 — The mannequin predicted success, however these campaigns truly failed. That is the place the mannequin is overconfident.

- True Negatives (TN): 3 — These campaigns have been accurately predicted as failures.

- False Negatives (FN): 6 — These campaigns have been truly profitable, however the mannequin incorrectly predicted failure.

From the confusion matrix, we are able to extract some necessary abstract stats that give us a clearer image of the mannequin’s strengths and weaknesses.

- Precision = 0.869 → When the mannequin predicted a marketing campaign would succeed, it was proper about 87% of the time. Precision = TP / (TP + FP).

- Recall = 0.997 → It efficiently recognized virtually each precise success. Recall = TP / (TP + FN).

- F1 Rating = 0.929 → This harmonic imply balances precision and recall, displaying the mannequin performs nicely general. F1 Rating = 2 * (Precision * Recall) / (Precision + Recall).

These metrics enable you perceive not simply whether or not the mannequin is correct, however the way it’s correct — and the place it’s extra prone to make errors. To place these leads to context, it’s useful to check them in opposition to a extra conventional method — logistic regression — to see whether or not the neural community is definitely delivering higher efficiency or simply added complexity.

⚖️ Step 12: Examine with Logistic Regression

To judge whether or not the neural community is definitely giving us higher efficiency — or simply added complexity — we’ll examine it in opposition to a extra conventional mannequin: logistic regression.

Logistic regression is a generally used classification algorithm that estimates the likelihood of a binary end result — on this case, whether or not a advertising and marketing marketing campaign shall be profitable (1) or not (0). It really works by assigning weights to every enter function and making use of a logistic (sigmoid) perform to generate a likelihood between 0 and 1.

In contrast to neural networks, logistic regression is linear, quick, and extremely interpretable, making it a superb baseline mannequin. This step helps us see whether or not the neural internet is really including worth or simply complexity.

# =======================================================

# STEP 12: Logistic Regression Comparability

# =======================================================# Practice logistic regression mannequin (similar method, similar inputs)

log_model information = train_data,

household = binomial)

# View logistic regression mannequin abstract

abstract(log_model)

# Predict possibilities on take a look at set

log_probs

# Convert possibilities to binary class predictions

log_predicted_class 0.5, 1, 0)

# Precise values

log_actual

# Accuracy

log_accuracy cat("Logistic Regression Accuracy:", spherical(log_accuracy, 3), "n")

# Confusion Matrix

log_conf_matrix print("=== Logistic Regression Confusion Matrix ===")

print(log_conf_matrix)

# Precision, Recall, F1

TP TN FP FN

log_precision log_recall log_f1_score

cat("Logistic Regression Precision:", spherical(log_precision, 3), "n")

cat("Logistic Regression Recall:", spherical(log_recall, 3), "n")

cat("Logistic Regression F1 Rating:", spherical(log_f1_score, 3), "n")

After coaching the logistic regression mannequin with the glm() perform, we get the next abstract output. Right here’s what the important thing components imply:

Name:

glm(method = method, household = binomial, information = train_data)Coefficients:

Estimate Std. Error z worth Pr(>|z|)

(Intercept) 1.69731 0.13378 12.687 Funds -2.20795 0.13125 -16.822 AudienceSize 3.58266 0.14703 24.367 Period 0.25069 0.11984 2.092 0.03645 *

Platform_Google -0.29435 0.11339 -2.596 0.00943 **

Platform_Instagram -0.08592 0.11651 -0.737 0.46084

Platform_LinkedIn -0.20151 0.11483 -1.755 0.07929 .

Platform_TikTok -0.12462 0.11425 -1.091 0.27537

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for binomial household taken to be 1)

Null deviance: 6328.8 on 7999 levels of freedom

Residual deviance: 5280.5 on 7992 levels of freedom

AIC: 5296.5

Variety of Fisher Scoring iterations: 6

The primary (name) line merely reveals the method used to run the regression. This confirms the mannequin was match utilizing logistic regression (binomial household) with the method we outlined earlier. Subsequent, the coefficients are proven.

For the importance codes, the extra asterisks (*), the extra statistically necessary that function is to the mannequin. For instance, AudienceSize and Funds are extremely vital, which means they’ve a robust and dependable relationship with marketing campaign success. Some platform indicators, like Instagram and TikTok, don’t present statistically vital results — at the least not on this dataset.

Whereas no single platform stood out, this output reinforces a key takeaway: reaching a big viewers and managing your funds successfully issues greater than which social media platform you select.

This mannequin confirms one thing most entrepreneurs already know: promoting on social media issues. Marketing campaign success isn’t random — it’s tied to actual inputs like funds, period, and viewers attain. Even when particular person platforms differ, utilizing paid digital campaigns — particularly on social platforms — stays a measurable, data-driven solution to affect advertising and marketing outcomes.

The mannequin match statistics present that including predictors improves the mannequin. The drop from null deviance to residual deviance signifies higher match, and the AIC worth offers a fast solution to examine this mannequin to others — decrease is healthier.

Now let’s have a look at how the logistic regression mannequin carried out on the take a look at information:

Logistic Regression Accuracy: 0.859 === Logistic Regression Confusion Matrix ===

Precise

Predicted 0 1

0 14 33

1 249 1704

Logistic Regression Precision: 0.873

Logistic Regression Recall: 0.981

Logistic Regression F1 Rating: 0.924

These outcomes are calculated the identical method we defined earlier for the neural community — utilizing the confusion matrix to derive precision, recall, and F1 rating. The accuracy right here is 85.9%, barely decrease than the neural community’s 86.7%, however nonetheless robust. Let’s examine the fashions facet by facet:

The neural community edges out logistic regression on accuracy and recall, which means it’s higher at figuring out profitable campaigns. Logistic regression barely outperforms on precision, which means it makes fewer false constructive predictions. General, each fashions carry out nicely, however the neural community might provide a small benefit in use circumstances the place lacking a profitable marketing campaign is extra expensive than flagging a couple of additional false positives.

When deciding which mannequin to make use of, it comes right down to your targets.

- Logistic regression is a superb selection in case you want a quick, interpretable mannequin that clearly reveals how every variable impacts the end result.

- Neural networks, however, can uncover extra complicated patterns and will carry out higher with bigger or much less linear datasets — particularly when recall is a precedence.

Both mannequin will be improved additional by function engineering, hyperparameter tuning, and even incorporating extra information sources. This instance is simply the place to begin — and a reminder that even easy fashions can present significant insights.

Now that we’ve seen how each fashions carry out in observe, let’s summarize what we’ve found.

Now that we’ve seen how each fashions carry out in observe, let’s summarize what we’ve found.

Neural networks, as soon as a distinct segment tutorial subject, at the moment are a sensible instrument for entrepreneurs. Even a comparatively easy mannequin with a single hidden layer was capable of predict marketing campaign success with excessive accuracy — demonstrating robust recall and a capability to choose up on refined patterns within the information. Whereas the mannequin itself could also be tougher to interpret than conventional strategies, the payoff is evident: neural networks provide a versatile, highly effective method for making data-driven advertising and marketing choices.

Logistic regression, against this, gave us a stable and interpretable baseline. However when the stakes are increased — or when the objective is to maximise efficiency over simplicity — neural networks can present that additional edge.

Whether or not you’re optimizing advert spend, refining viewers concentrating on, or forecasting marketing campaign outcomes, neural networks belong in your advertising and marketing analytics toolkit.

In case you haven’t tried constructing a neural community but, now’s a good time. Begin easy, experiment along with your information, and see what insights emerge. The instruments are accessible, the maths is manageable — and the outcomes would possibly shock you.

Blum, Adam. Neural Networks in C++: An Object-Oriented Framework for Constructing Connectionist Techniques. Wiley, 1992.

Chapman, Chris, and Elea McDonnell Feit. R for Advertising and marketing Analysis and Analytics. 2nd ed., Springer, 2019, https://r-marketing.r-forge.r-project.org/

Chollet, François, with Tomasz Kalinowski and J. J. Allaire. (July 2022) Deep Studying with R, Second Version., Manning Publications.

Ciaburro, Giuseppe, and Balaji Venkateswaran. (2017) Neural Networks with R: Good Fashions Utilizing CNN, RNN, Deep Studying, and Synthetic Intelligence Ideas. Packt Publishing.

Domaleski, Joe. “I Personal a Advertising and marketing Company. Right here’s How We Use (and Don’t Use) AI Like ChatGPT in 2025.” Advertising and marketing Information Science, 2 Feb. 2025, https://blog.marketingdatascience.ai/i-own-a-marketing-agency-heres-how-we-use-and-don-t-use-ai-like-chatgpt-in-2025-f85f40ca55d5.

Domaleski, Joe. “Reflecting Again on 45 Years of Private Expertise with Science, Know-how, Engineering, and Math (STEM).” The Citizen, 30 Apr. 2024, https://thecitizen.com/2024/04/30/reflecting-back-on-45-years-of-personal-experience-with-stem/.

Domaleski, Joe. “UBCF vs. IBCF: Evaluating Advertising and marketing Suggestion System Algorithms in R.” Advertising and marketing Information Science, 7 Apr. 2025, https://blog.marketingdatascience.ai/ubcf-vs-ibcf-comparing-marketing-recommendation-system-algorithms-in-r-38ff36bf05d3.

Domaleski, Joe. Neural Networks in Advertising and marketing Utilizing R. GitHub, 2025, https://github.com/joedom99/neural-networks-in-marketing-using-r.

Fritsch, Stefan, et al. “neuralnet: Coaching of Neural Networks”. Model 1.44.2, 7 Feb. 2019, The Complete R Archive Community (CRAN), https://CRAN.R-project.org/package=neuralnet.

James, Gareth, et al. (2021) An Introduction to Statistical Studying: With Purposes in R. 2nd ed., Springer. https://www.statlearning.com/.

Kaplan, Jacob, and Benjamin Schlegel. “fastDummies: Quick Creation of Dummy (Binary) Columns and Rows from Categorical Variables.” Model 1.7.5, 20 Jan. 2025, The Complete R Archive Community (CRAN), https://CRAN.R-project.org/package=fastDummies.

Khamaiseh, Samer Y., et al. Determine from “Adversarial Deep Studying: A Survey on Adversarial Assaults and Protection Mechanisms on Picture Classification.” IEEE Entry, vol. PP, no. 99, Jan. 2022, pp. 1–1. https://doi.org/10.1109/ACCESS.2022.3208131.

Pawlus, Michael, and Rodger Devine. (2020) Arms-On Deep Studying with R: A Sensible Information to Designing, Constructing, and Bettering Neural Community Fashions Utilizing R. Packt Publishing.

Rosenblatt, Frank. “The Perceptron: A Probabilistic Mannequin for Info Storage and Group within the Mind.” Psychological Assessment, vol. 65, no. 6, 1958, pp. 386–408, https://www.ling.upenn.edu/courses/cogs501/Rosenblatt1958.pdf

Smilkov, Daniel, et al. TensorFlow Playground. Google, https://playground.tensorflow.org/ Accessed: April 2025.