Hey there ! I’m Pankaj Chouhan, an information fanatic who spends means an excessive amount of time tinkering with Python and datasets. In the event you’ve ever questioned how one can make sense of a messy spreadsheet earlier than leaping into fancy machine studying fashions, you’re in the best place. At the moment, I’m spilling the beans on Exploratory Information Evaluation (EDA) — the unsung hero of knowledge science. It’s not glamorous, but it surely’s the place the magic begins.

I’ve been taking part in with knowledge for years, and EDA is my go-to step. It’s like attending to know a brand new buddy — determining their quirks, strengths, and what they’re hiding. On this information, I’ll stroll you thru how I sort out EDA in Python, utilizing a dataset I stumbled upon about scholar efficiency (college students.csv). No fluff, simply sensible steps with code you may run your self. Let’s dive in!

Think about you get an enormous field of puzzle items. You don’t begin jamming them collectively immediately — you dump them out, have a look at the shapes, and see what you’ve bought. That’s EDA. It’s about exploring your knowledge to grasp it earlier than doing something fancy like constructing fashions.

For this information, I’m utilizing a dataset with information on 1,000 college students — stuff like their gender, whether or not they took a check prep course, and their scores in math, studying, and writing. My purpose? Get to know this knowledge and clear it up so it’s prepared for extra.

Right here’s how I sort out EDA, damaged down into simple chunks:

- Verify the Fundamentals (Data & Form): How large is it ? What’s inside ?

- Repair Lacking Stuff: Are there any gaps?

- Spot Outliers: Any bizarre numbers?

- Have a look at Skewness: Is the information lopsided?

- Flip Phrases into Numbers (Encoding): Make classes model-friendly.

- Scale Numbers: Maintain every little thing honest.

- Make New Options: Add one thing helpful.

- Discover Connections: See how issues relate.

I’ll present you every one with our scholar knowledge — tremendous easy !

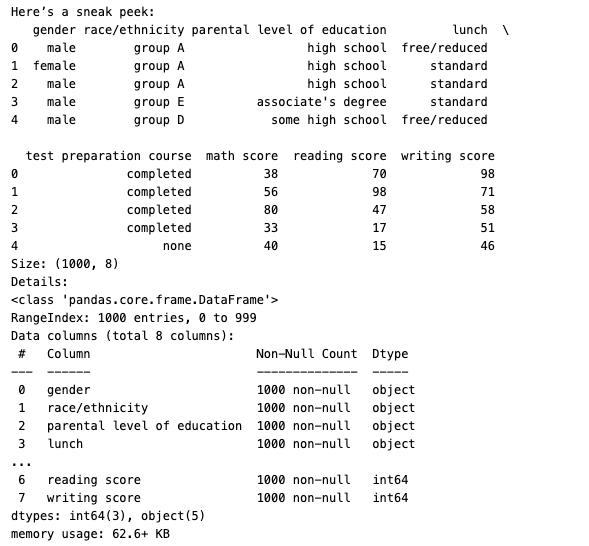

First, I load the information and take a fast peek. Right here’s what I do:

import pandas as pd # For dealing with knowledge

import numpy as np # For math stuff

import seaborn as sns # For fairly charts

import matplotlib.pyplot as plt # For drawing# Load the scholar knowledge

knowledge = pd.read_csv('college students.csv')

# See the primary few rows

print("Right here’s a sneak peek:")

print(knowledge.head())

# What number of rows and columns?

print("Measurement:", knowledge.form)

# What’s in there?

print("Particulars:")

knowledge.information()

What I See:

The primary few rows present columns like gender, lunch, and math rating. The form says 1,000 rows and eight columns — good and small. The information() tells me there’s no lacking knowledge (yay!) and splits the columns into phrases (like gender) and numbers (like math rating). It’s like a fast hey from the information!

Lacking knowledge can mess issues up, so I examine :

print("Any gaps?")

print(knowledge.isnull().sum())

What I See:

All zeros — no lacking values! That’s fortunate. If I discovered some, like clean math scores, I’d both skip these rows (knowledge.dropna()) or fill them with the typical (knowledge[‘math score’].fillna(knowledge[‘math score’].imply())). At the moment, I’m off the hook.

Outliers are numbers that stick out — like a child scoring 0 when everybody else is at 70. I take advantage of a field plot to identify them :

plt.determine(figsize=(8, 5))

sns.boxplot(x=knowledge['math score'])

plt.title('Math Scores - Any Odd Ones?')

plt.present()

What I See:

Most scores are between 50 and 80, however there’s a dot means down at 0. Is {that a} mistake? Possibly not — somebody might’ve bombed the check. If I needed to take away it, I’d do that:

# Discover the "regular" vary

Q1 = knowledge['math score'].quantile(0.25)

Q3 = knowledge['math score'].quantile(0.75)

IQR = Q3 - Q1

data_clean = knowledge[(data['math score'] >= Q1 - 1.5 * IQR) & (knowledge['math score'] print("Measurement after cleansing:", data_clean.form)

However I’ll maintain it — it feels actual.

Skewness is when knowledge leans a method — like extra low scores than excessive ones. I examine it for math rating:

from scipy.stats import skew

print("Skewness (Math Rating):", skew(knowledge['math score']))# Draw an image

sns.histplot(knowledge['math score'], bins=10, kde=True)

plt.title('How Math Scores Unfold')

plt.present()

Skewness (Math Rating): -0.033889641841880695

What I See:

Skewness is -0.3 — barely extra low scores, however not an enormous deal. The chart exhibits most scores between 60 and 80. If it had been tremendous skewed (like 2.0), I’d tweak it with one thing like np.log1p(knowledge[‘math score’]). Right here, it’s okay.

Computer systems don’t get phrases like “male” or “feminine” — they want numbers. I repair gender :

Set up scikit-learn

%pip set up scikit-learn

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

knowledge['gender_num'] = le.fit_transform(knowledge['gender'])

print("Gender as Numbers:")

print(knowledge[['gender', 'gender_num']].head())

What I See:

feminine turns into 0, male into 1. Simple! For one thing with extra choices, like lunch (customary or free/lowered), I’d cut up it into two columns:

knowledge = pd.get_dummies(knowledge, columns=['lunch'], prefix='lunch')

Now I’ve bought lunch_standard and lunch_free/lowered — good for later.

Scores go from 0 to 100, however what if I add one thing tiny like “hours studied”? I scale to maintain it honest:

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

knowledge['math_score_norm'] = scaler.fit_transform(knowledge[['math score']])

print("Math Rating (0 to 1):")

print(knowledge['math_score_norm'].head())

Standardization (heart at 0):

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

knowledge['math_score_std'] = scaler.fit_transform(knowledge[['math score']])

print("Math Rating (Commonplace):")

print(knowledge['math_score_std'].head())

What I See:

Normalization makes scores 0 to 1 (e.g., 72 turns into 0.72). Standardization shifts them round 0 (e.g., 72 turns into 0.39). I’d use standardization for many fashions — it’s my go-to.

Typically I combine issues as much as get extra out of the information. I create an average_score :

knowledge['average_score'] = (knowledge['math score'] + knowledge['reading score'] + knowledge['writing score']) / 3

print("Common Rating:")

print(knowledge['average_score'].head())

What I See:

A child with 72, 72, and 74 will get 72.67. It’s a fast strategy to see total efficiency — fairly helpful !

Now I search for patterns. First, a heatmap for scores:

correlation = knowledge[['math score', 'reading score', 'writing score']].corr()

plt.determine(figsize=(8, 6))

sns.heatmap(correlation, annot=True, cmap='coolwarm')

plt.title('How Scores Join')

plt.present()

What I See:

Numbers like 0.8 and 0.95 — scores transfer collectively. In the event you’re good at math, you’re possible good at studying.

Then, a scatter plot :

plt.determine(figsize=(8, 6))

sns.scatterplot(x='math rating', y='studying rating', hue='lunch_standard', knowledge=knowledge)

plt.title('Math vs. Studying by Lunch')

plt.present()

What I See:

Children with customary lunch (orange dots) rating increased — possibly they’re consuming higher?

Lastly, a field plot:

plt.determine(figsize=(8, 6))

sns.boxplot(x='check preparation course', y='math rating', knowledge=knowledge)

plt.title('Math Scores with Check Prep')

plt.present()

What I See:

Check prep youngsters have increased scores — apply helps!