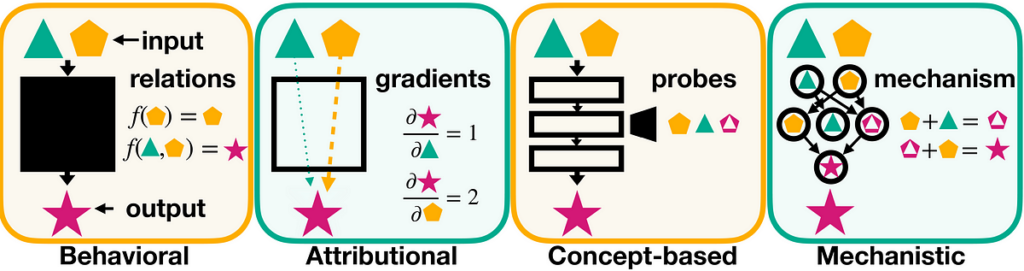

Mechanistic interpretability is an method to understanding how machine studying fashions — particularly deep neural networks — course of and signify info at a elementary stage. It seeks to transcend black-box explanations and establish particular circuits, patterns, and constructions inside a mannequin that contribute to its conduct.

Circuit Evaluation

- As a substitute of treating fashions as a monolithic complete, researchers analyze how neurons and a focus heads work together.

- This includes tracing the move of data by means of layers, figuring out modular elements, and understanding how they contribute to particular predictions.

Function Decomposition

- Breaking down how fashions signify ideas internally.

- In imaginative and prescient fashions, this might imply discovering neurons that activate for particular textures, objects, or edges.

- In language fashions, this would possibly contain neurons that detect grammatical construction or particular entities.

Activation Patching & Ablations

- Activation patching: Changing activations of 1 neuron with one other to see how conduct adjustments.

- Ablations: Disabling particular neurons or consideration heads to check their significance.

Sparse Coding & Superposition

- Many fashions don’t retailer options in a one-neuron-per-feature approach.

- As a substitute, options are sometimes entangled, that means a single neuron contributes to a number of completely different ideas relying on context.

- Sparse coding methods intention to disentangle these overlapping representations.

Automated Interpretability Strategies

- Utilizing instruments like dictionary studying, causal scrubbing, and have visualization to automate discovery of inside constructions.

- For instance, making use of principal part evaluation (PCA) or sparse autoencoders to know the latent area of a mannequin.

Consider a deep neural community like a mind. Mechanistic interpretability is about determining precisely how that mind processes info, reasonably than simply figuring out that it will get the proper reply.

1. Neurons and Circuits = Mind Areas and Pathways

- In each the mind and neural networks, neurons course of info.

- However neurons don’t act alone — they kind circuits that work collectively to acknowledge patterns, make selections, or predict outcomes.

- Mechanistic interpretability is like neuroscience for AI — we’re making an attempt to map out these circuits and perceive their operate.

2. Activation Patching = Mind Lesions & Stimulation

- In neuroscience, scientists disable components of the mind (lesions) or stimulate particular areas to see what occurs.

- In AI, researchers do one thing related: they flip off particular neurons or consideration heads to see how the mannequin adjustments.

- Instance: In a imaginative and prescient mannequin, disabling sure neurons would possibly cease it from recognizing faces however not objects — similar to mind injury within the fusiform gyrus may cause face blindness (prosopagnosia).

3. Function Superposition = Multitasking Neurons

- Within the mind, particular person neurons can reply to a number of issues — a single neuron within the hippocampus would possibly hearth for each your grandmother’s face and your childhood dwelling.

- AI fashions do the identical factor: neurons don’t at all times retailer one idea at a time — they multitask.

- Mechanistic interpretability tries to separate these entangled options, similar to neuroscientists strive to determine how neurons encode recollections and ideas.

4. Consideration Heads = Selective Consideration within the Mind

- In transformers (like GPT), consideration heads give attention to completely different phrases in a sentence to know that means.

- That is just like how the prefrontal cortex directs consideration — you don’t course of each sound in a loud room equally; your mind decides what to give attention to.

- Researchers research which consideration heads give attention to what, similar to neuroscientists research how the mind filters info.

5. Interpretability Instruments = Mind Imaging (fMRI, EEG, and many others.)

- In neuroscience, we use fMRI, EEG, and single-neuron recordings to peek contained in the mind.

- In AI, we use instruments like activation visualization, circuit tracing, and causal interventions to see what’s occurring inside fashions.

- Understanding how AI fashions work could make them safer, similar to understanding the mind helps deal with neurological problems.

- It helps us debug AI techniques and stop errors, similar to diagnosing mind problems.

- It additionally teaches us extra about intelligence itself — each synthetic and organic.

- Debugging & Security → Helps stop adversarial assaults and unintended biases.

- Mannequin Alignment → Ensures that fashions behave as anticipated, essential for AI alignment analysis.

- Theoretical Insights → Helps bridge deep studying with neuroscience and cognitive science.

- Effectivity & Optimization → Identifies redundant or pointless computations in a mannequin, main to raised architectures.